Problem description

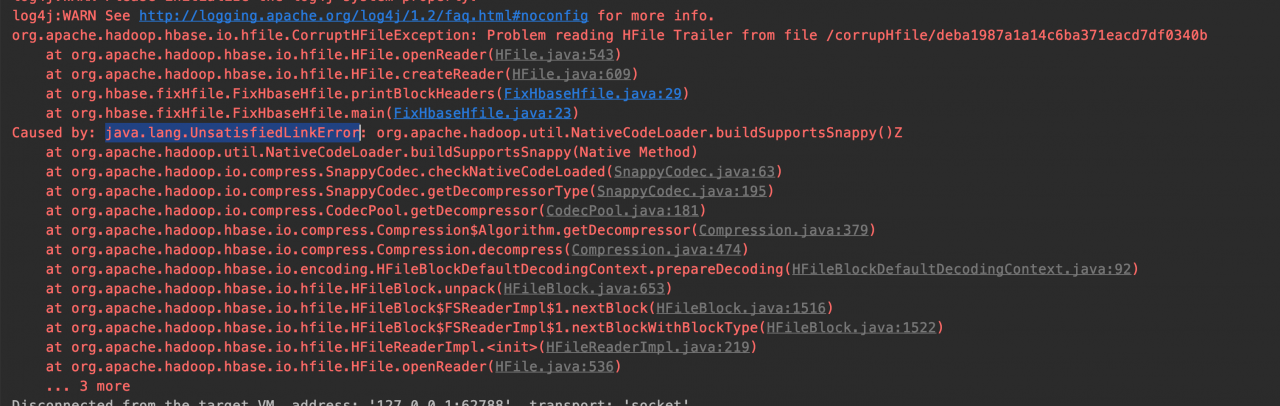

When using hhbase to read hfile with snappy compression, an error is reported as follows:

java.lang.UnsatisfiedLinkError: org.apache.hadoop.util.NativeCodeLoader.buildSupportsSnappy()Z

If you go up to the log, there will be a prompt like this:

Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

analysis

For this problem, we need to compile Hadoop native for Mac platform, but after compiling many versions of Hadoop, we will encounter cmake problems

Hadoop yarn server nodemanager: make failed, which can be solved by yarn-8622 patch

Hadoop MapReduce client native task: make failed

So we finally compiled Hadoop 2.8.5, Hadoop 3.1.1, Hadoop 3.2.1, Hadoop 3.2.1. There are similar problems in some versions. The first problem has been solved, and the second problem will be encountered, so we have to give up compiling

Solutions

Fortunately, there is open source, hot search Hadoop native MAC on GitHub, someone really shares it, and then replace it with native ${Hadoop}_ Some other configurations of home}/lib/native are as follows:

$ vim .zshrc

export HADOOP_HOME=/Users/jiazz/devEnvs/hadoop-3.1.1

export HADOOP_PREFIX=/Users/jiazz/devEnvs/hadoop-3.1.1

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export HADOOP_OPTS="-Djava.library.path=${HADOOP_HOME}/lib/native"

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:${HADOOP_HOME}/lib/native

export JAVA_LIBRARY_PATH=$JAVA_LIBRARY_PATH:${HADOOP_HOME}/lib/native

$ source .zshrc

In fact, the success of can be detected by the command:

Note here that Mac will check the source of the file you replaced. You need to set – > Security and privacy – > General – > Allow to open

# old

$ hadoop checknative -a

WARNING: HADOOP_PREFIX has been replaced by HADOOP_HOME. Using value of HADOOP_PREFIX.

2020-11-03 13:28:45,307 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Native library checking:

hadoop: false

zlib: false

zstd : false

snappy: false

lz4: false

bzip2: false

openssl: false

ISA-L: false

# new

$ hadoop checknative -a

WARNING: HADOOP_PREFIX has been replaced by HADOOP_HOME. Using value of HADOOP_PREFIX.

2020-11-03 13:28:59,672 WARN bzip2.Bzip2Factory: Failed to load/initialize native-bzip2 library system-native, will use pure-Java version

2020-11-03 13:28:59,677 INFO zlib.ZlibFactory: Successfully loaded & initialized native-zlib library

2020-11-03 13:28:59,967 WARN erasurecode.ErasureCodeNative: Loading ISA-L failed: Failed to load libisal.2.dylib (dlopen(libisal.2.dylib, 9): image not found)

2020-11-03 13:28:59,967 WARN erasurecode.ErasureCodeNative: ISA-L support is not available in your platform... using builtin-java codec where applicable

Native library checking:

hadoop: true /Users/jiazz/devEnvs/hadoop-3.1.1/lib/native/libhadoop.dylib

zlib: true /usr/lib/libz.1.dylib

zstd : true /usr/local/Cellar/zstd/1.4.5/lib/libzstd.1.4.5.dylib

snappy: true /usr/local/Cellar/snappy/1.1.8/lib/libsnappy.1.1.8.dylib

lz4: true revision:10301

bzip2: false

openssl: false EVP_CIPHER_CTX_reset

ISA-L: false Loading ISA-L failed: Failed to load libisal.2.dylib (dlopen(libisal.2.dylib, 9): image not found)

2020-11-03 13:28:59,995 INFO util.ExitUtil: Exiting with status 1: ExitException

Run the program in idea again, the problem is solved It is verified that if the java -jar is run on the server, you also need to add parameters-Djava.library.path=$HADOOP_HOME/lib/native

Similar Posts:

- Hadoop Start Error: ssh: Could not resolve hostname xxx: Name or service not known

- HDFS Operate hadoop Error: Command not Found [How to Solve]

- [Solved] /bin/bash: /us/rbin/jdk1.8.0/bin/java: No such file or directory

- [Solved] HDFS Filed to Start namenode Error: Premature EOF from inputStream;Failed to load FSImage file, see error(s) above for more info

- [Solved] idea connect to remote Hadoop always error: org.apache.hadoop.io.nativeio.NativeIO$Windows.createDirectoryWithMode0(Ljava/lang/String;I)

- Initialization of react native Android [How to Solve]

- [Solved] hadoop:hdfs.DFSClient: Exception in createBlockOutputStream

- eclipse scala failed to load the jni shared library

- Hadoop “Unable to load native-hadoop library for y

- [Solved] ava.io.IOException: HADOOP_HOME or hadoop.home.dir are not set