These two days in learning kafka, the official web demo deployed to their own virtual machine to run, normal;

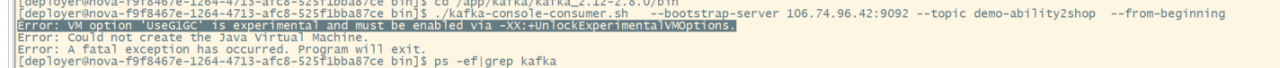

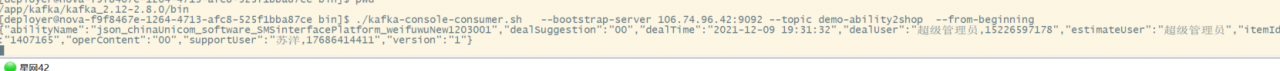

Then deployed to the company’s R & D line host, found that the producer has been unable to send messages;

Some of the error logs are as follows:

[2014-11-13 09:58:09,660] WARN Error while fetching metadata [{TopicMetadata for topic mor ->

No partition metadata for topic mor due to kafka.common.LeaderNotAvailableException}] for topic [mor]: class kafka.common.LeaderNotAvailableException (kafka.producer.BrokerPartitionInfo)

[2014-11-13 09:58:09,660] ERROR Failed to send requests for topics mor with correlation ids in [17,24] (kafka.producer.async.DefaultEventHandler)

[2014-11-13 09:58:09,660] ERROR Error in handling batch of 17 events (kafka.producer.async.ProducerSendThread)

kafka.common.FailedToSendMessageException: Failed to send messages after 3 tries.

at kafka.producer.async.DefaultEventHandler.handle(DefaultEventHandler.scala:90)

at kafka.producer.async.ProducerSendThread.tryToHandle(ProducerSendThread.scala:104)

at kafka.producer.async.ProducerSendThread$$anonfun$processEvents$3.apply(ProducerSendThread.scala:87)

at kafka.producer.async.ProducerSendThread$$anonfun$processEvents$3.apply(ProducerSendThread.scala:67)

at scala.collection.immutable.Stream.foreach(Stream.scala:526)

at kafka.producer.async.ProducerSendThread.processEvents(ProducerSendThread.scala:66)

at kafka.producer.async.ProducerSendThread.run(ProducerSendThread.scala:44)

The procedure is exactly the same, except that the listening port of zookeeper is modified on the R&D line, and the command is entered according to the corresponding port, so it is not caused by a problem with the parameters;

Compare the configuration on the R & D line and the virtual machine, found no difference except for this port;

Put in the server.properties file

#host.name=localhost

and then ran it, and found that the problem was solved;

(But it works fine in the virtual machine without changing this stuff;

I suspect that some internal configuration differences are caused by different distributions;

Virtual machine:

Distributor ID: Ubuntu

Description: Ubuntu 14.04.1 LTS

Release: 14.04

Codename: trusty

R&D Line:

LSB Version: :

Distributor ID: RedHatEnterpriseServer

Description: Red Hat Enterprise Linux Server release 5.4 (Tikanga)

Release: 5.4

Codename: Tikanga

)

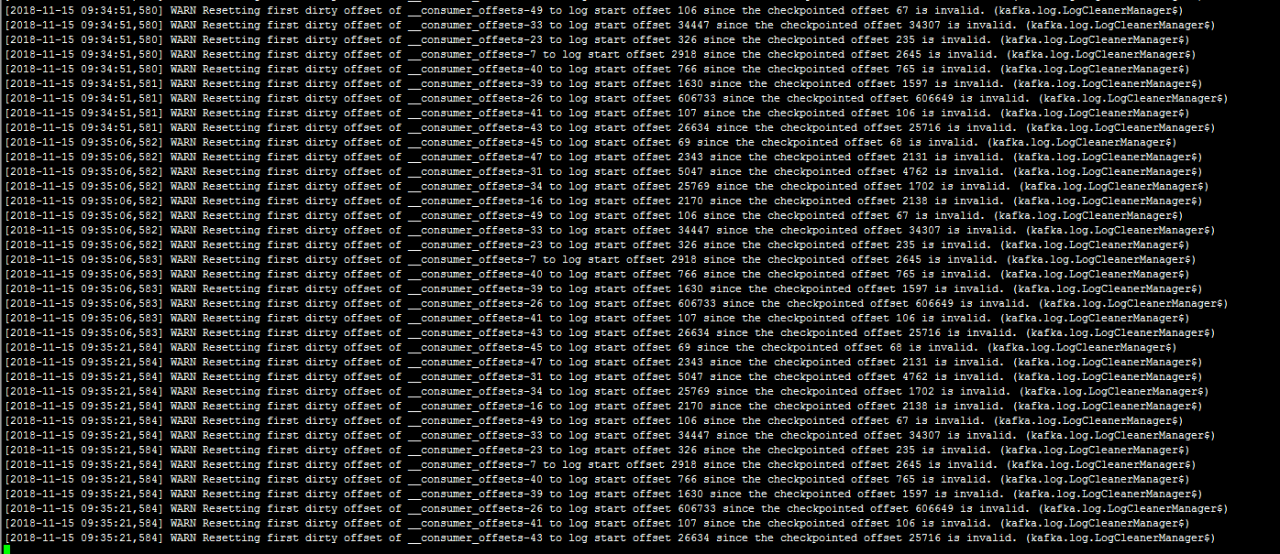

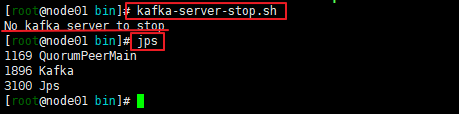

In the process of solving the problem, but also found other problems, but so far not found to affect the operation;

is zookeeper and broker run up, create the producer, create the topic, create the Consumer, when, zookeeper will report an exception, part of the record is as follows:

[2014-11-13 09:12:13,486] INFO Got user-level KeeperException when processing sessionid:0x149a6b3e36c0001 type:setData cxid:0x3 zxid:0xfffffffffffffffe txntype:unknown reqpath:n/a Error Path:/config/topics/morning Error:KeeperErrorCode = NoNode for /config/topics/morning (org.apache.zookeeper.server.PrepRequestProcessor)

[2014-11-13 09:12:13,506] INFO Got user-level KeeperException when processing sessionid:0x149a6b3e36c0001 type:create cxid:0x4 zxid:0xfffffffffffffffe txntype:unknown reqpath:n/a Error Path:/config/topics Error:KeeperErrorCode = NodeExists for /config/topics (org.apache.zookeeper.server.PrepRequestProcessor)

[2014-11-13 09:12:13,535] INFO Processed session termination for sessionid: 0x149a6b3e36c0001 (org.apache.zookeeper.server.PrepRequestProcessor)

[2014-11-13 09:19:50,958] INFO Got user-level KeeperException when processing sessionid:0x149a6bbeaf50000 type:create cxid:0x4 zxid:0xfffffffffffffffe txntype:unknown reqpath:n/a Error Path:/brokers Error:KeeperErrorCode = NoNode for /brokers (org.apache.zookeeper.server.PrepRequestProcessor)

[2014-11-13 09:19:50,982] INFO Got user-level KeeperException when processing sessionid:0x149a6bbeaf50000 type:create cxid:0xa zxid:0xfffffffffffffffe txntype:unknown reqpath:n/a Error Path:/config Error:KeeperErrorCode = NoNode for /config (org.apache.zookeeper.server.PrepRequestProcessor)

[2014-11-13 09:19:50,998] INFO Got user-level KeeperException when processing sessionid:0x149a6bbeaf50000 type:create cxid:0x10 zxid:0xfffffffffffffffe txntype:unknown reqpath:n/a Error Path:/admin Error:KeeperErrorCode = NoNode for /admin (org.apache.zookeeper.server.PrepRequestProcessor)

[2014-11-13 09:19:51,295] INFO Got user-level KeeperException when processing sessionid:0x149a6bbeaf50000 type:setData cxid:0x19 zxid:0xfffffffffffffffe txntype:unknown reqpath:n/a Error Path:/controller_epoch Error:KeeperErrorCode = NoNode for /controller_epoch (org.apache.zookeeper.server.PrepRequestProcessor)

[2014-11-13 09:19:51,374] INFO Got user-level KeeperException when processing sessionid:0x149a6bbeaf50000 type:delete cxid:0x27 zxid:0xfffffffffffffffe txntype:unknown reqpath:n/a Error Path:/admin/preferred_replica_election Error:KeeperErrorCode = NoNode for /admin/preferred_replica_election (org.apache.zookeeper.server.PrepRequestProcessor)

[2014-11-13 10:31:50,651] INFO Got user-level KeeperException when processing sessionid:0x149a6bbeaf5001a type:setData cxid:0x19 zxid:0xfffffffffffffffe txntype:unknown reqpath:n/a Error Path:/consumers/test-consumer-group/offsets/mor/0 Error:KeeperErrorCode = NoNode for /consumers/test-consumer-group/offsets/mor/0 (org.apache.zookeeper.server.PrepRequestProcessor)

[2014-11-13 10:31:50,661] INFO Got user-level KeeperException when processing sessionid:0x149a6bbeaf5001a type:create cxid:0x1a zxid:0xfffffffffffffffe txntype:unknown reqpath:n/a Error Path:/consumers/test-consumer-group/offsets Error:KeeperErrorCode = NoNode for /consumers/test-consumer-group/offsets (org.apache.zookeeper.server.PrepRequestProcessor)

At first the producer could not send messages, I thought it was related to these exceptions, but when running in the virtual machine, these same exceptions appeared, but also did not affect the producer to send messages;

After searching the Internet, some people said it was the reason for incorrectly closing zookeeper and server, and some said it was the reason for not deleting the zookeeper logs and kafka logs under /tmp,

Anyway, I tried the methods mentioned above, but still reported these exceptions;

If any of you know what causes these exceptions, please tell me, thank you very much;

In addition, when the Consumer running java code from the local machine connects to the R & D line, the connection is quickly closed and the message sent by the producer is not received;

The reason is that the configured timeout is too short, zookeeper did not finish reading the Consumer’s data, the connection was disconnected by the Consumer, part of the log is as follows:

[2014-11-13 10:28:47,989] INFO Accepted socket connection from /192.168.50.33:2676 (org.apache.zookeeper.server.NIOServerCnxn)

[2014-11-13 10:28:47,989] WARN EndOfStreamException: Unable to read additional data from client sessionid 0x0, likely client has closed socket (org.apache.zookeeper.server.NIOServerCnxn)

The solution is to configure the timeout time in the configuration to be longer, as follows:

props.put(“zookeeper.session.timeout.ms”, “400000”);

kafka official website on the java version of the producer part of the code, there is a place to write is not very clear, as follows:

props.put("metadata.broker.list","broker1:9092,broker2:9092");

Broker1 and broker2 represent the corresponding host name of the broker, not the ID of the broker

props.put("metadata.broker.list","localhost:9092,localhost:9093");