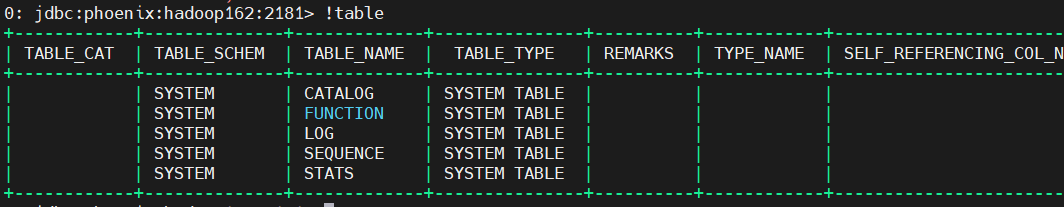

/opt/module/phoenix-5.0.0/bin » ./sqlline.py hadoop162:2181 atguigu@hadoop162 Setting property: [incremental, false] Setting property: [isolation, TRANSACTION_READ_COMMITTED] issuing: !connect jdbc:phoenix:hadoop162:2181 none none org.apache.phoenix.jdbc.PhoenixDriver Connecting to jdbc:phoenix:hadoop162:2181 SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/module/phoenix-5.0.0/phoenix-5.0.0-HBase-2.0-client.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/module/hadoop-3.1.3/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. 21/11/24 11:07:15 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 21/11/24 11:07:15 WARN client.ConnectionImplementation: Retrieve cluster id failed java.util.concurrent.ExecutionException: org.apache.phoenix.shaded.org.apache.zookeeper.KeeperException$NoNodeException: KeeperErrorCode = NoNode for /hbase/hbaseid at java.util.concurrent.CompletableFuture.reportGet(CompletableFuture.java:357) at java.util.concurrent.CompletableFuture.get(CompletableFuture.java:1895) at org.apache.hadoop.hbase.client.ConnectionImplementation.retrieveClusterId(ConnectionImplementation.java:527) at org.apache.hadoop.hbase.client.ConnectionImplementation.(ConnectionImplementation.java:287) at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.hadoop.hbase.client.ConnectionFactory.createConnection(ConnectionFactory.java:219) at org.apache.hadoop.hbase.client.ConnectionFactory.createConnection(ConnectionFactory.java:114) at org.apache.phoenix.query.HConnectionFactory$HConnectionFactoryImpl.createConnection(HConnectionFactory.java:47) at org.apache.phoenix.query.ConnectionQueryServicesImpl.openConnection(ConnectionQueryServicesImpl.java:430) at org.apache.phoenix.query.ConnectionQueryServicesImpl.access$400(ConnectionQueryServicesImpl.java:272) at org.apache.phoenix.query.ConnectionQueryServicesImpl$12.call(ConnectionQueryServicesImpl.java:2556) at org.apache.phoenix.query.ConnectionQueryServicesImpl$12.call(ConnectionQueryServicesImpl.java:2532) at org.apache.phoenix.util.PhoenixContextExecutor.call(PhoenixContextExecutor.java:76) at org.apache.phoenix.query.ConnectionQueryServicesImpl.init(ConnectionQueryServicesImpl.java:2532) at org.apache.phoenix.jdbc.PhoenixDriver.getConnectionQueryServices(PhoenixDriver.java:255) at org.apache.phoenix.jdbc.PhoenixEmbeddedDriver.createConnection(PhoenixEmbeddedDriver.java:150) at org.apache.phoenix.jdbc.PhoenixDriver.connect(PhoenixDriver.java:221) at sqlline.DatabaseConnection.connect(DatabaseConnection.java:157) at sqlline.DatabaseConnection.getConnection(DatabaseConnection.java:203) at sqlline.Commands.connect(Commands.java:1064) at sqlline.Commands.connect(Commands.java:996) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at sqlline.ReflectiveCommandHandler.execute(ReflectiveCommandHandler.java:38) at sqlline.SqlLine.dispatch(SqlLine.java:809) at sqlline.SqlLine.initArgs(SqlLine.java:588) at sqlline.SqlLine.begin(SqlLine.java:661) at sqlline.SqlLine.start(SqlLine.java:398) at sqlline.SqlLine.main(SqlLine.java:291) Caused by: org.apache.phoenix.shaded.org.apache.zookeeper.KeeperException$NoNodeException: KeeperErrorCode = NoNode for /hbase/hbaseid at org.apache.phoenix.shaded.org.apache.zookeeper.KeeperException.create(KeeperException.java:111) at org.apache.phoenix.shaded.org.apache.zookeeper.KeeperException.create(KeeperException.java:51) at org.apache.hadoop.hbase.zookeeper.ReadOnlyZKClient$ZKTask$1.exec(ReadOnlyZKClient.java:168) at org.apache.hadoop.hbase.zookeeper.ReadOnlyZKClient.run(ReadOnlyZKClient.java:323) at java.lang.Thread.run(Thread.java:748)

–> It is caused by Hbase not being up.

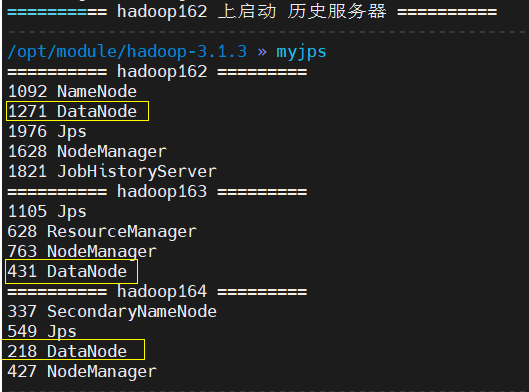

–> it’s because hadoop cluster is not started properly and datanode nodes are not up

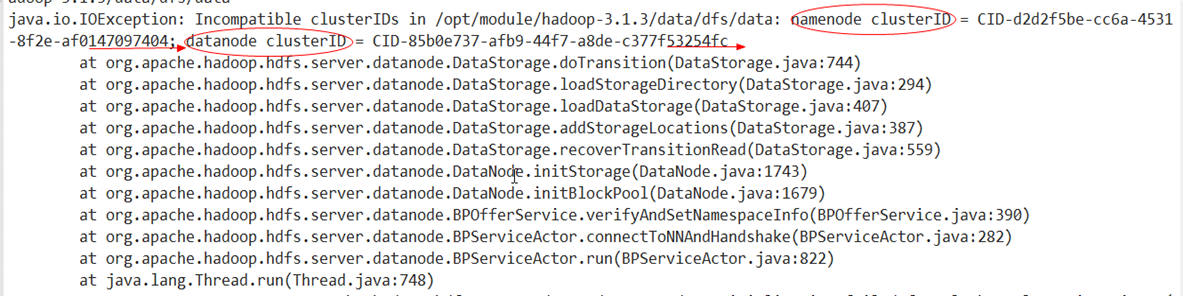

–> The cluster ID of the datanode is not the same as the cluster ID of the namenode, so dn can’t find the cluster

![]()

Duplicate formatting causes the clusterID of the datanode and the clusterID of the new namenode to be inconsistent, so when the cluster is started there is only the namenode, not the datanode

Workaround.

1, stop the cluster and reboot to restart (to ensure that Hadoop’s services are killed) the node where the namenode is located

2. Clean up.

Delete all data and logs in the root directory of hadoop (three nodes): rm -rf $HADOOP_HOME/data $HADOOP_HOME/logs

Delete all contents under /tmp: sudo rm -rf /tmp/*

Use the cleanup script to delete the contents of the three nodes: data, logs, and tmp directories in one click.

3, reformat

hdfs namenode -format

Finally, restart the cluster successfully.

Cleanup script

!/bin/bash

for host in hadoop102 hadoop103 hadoop104

do

ssh $host rm -rf $HADOOP_HOME/data $HADOOP_HOME/logs

ssh $host sudo rm -rf /tmp/*

done