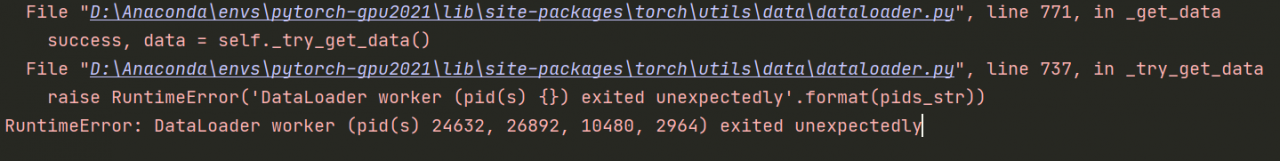

Error message:

Error reason: num in torch.utils.data.dataloader_ Workers error num_ Change workers to 0, which is the default value. num_ Workers is used to specify the number of multiple processes. The default value is 0, which means that multiple processes are not enabled. If: set num_ When workers is set to 0, the program reports an error and prompts to set the environment variable KMP_ DUPLICATE_ LIB_ OK = true, then you can set the environment variable KMP_ DUPLICATE_ LIB_ OK = true or use a temporary environment variable: (add this line of code at the beginning of the code)

os.environ['KMP_DUPLICATE_LIB_OK'] = 'TRUE'

Original code:

# Training dataset

train_loader = torch.utils.data.DataLoader(

datasets.MNIST(root='.', train=True, download=True,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])), batch_size=64, shuffle=True, num_workers=4)

# Test dataset

test_loader = torch.utils.data.DataLoader(

datasets.MNIST(root='.', train=False, transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])), batch_size=64, shuffle=True, num_workers=4)

Modification code:

# Training dataset

train_loader = torch.utils.data.DataLoader(

datasets.MNIST(root='.', train=True, download=True,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])), batch_size=64, shuffle=True, num_workers=0)

# Test dataset

test_loader = torch.utils.data.DataLoader(

datasets.MNIST(root='.', train=False, transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])), batch_size=64, shuffle=True, num_workers=0)