The following error message appears when running the spark test program.

Exception in thread “main” java.lang.NoSuchMethodError: scala.Product.$init$(Lscala/Product;)V

at org.apache.spark.SparkConf$DeprecatedConfig.<init>(SparkConf.scala:809)

at org.apache.spark.SparkConf$.<init>(SparkConf.scala:642)

at org.apache.spark.SparkConf$.<clinit>(SparkConf.scala)

at org.apache.spark.SparkConf.set(SparkConf.scala:94)

at org.apache.spark.SparkConf.set(SparkConf.scala:83)

at org.apache.spark.SparkConf.setAppName(SparkConf.scala:120)

at com.spark.HiveContextTest.main(HiveContextTest.java:9)

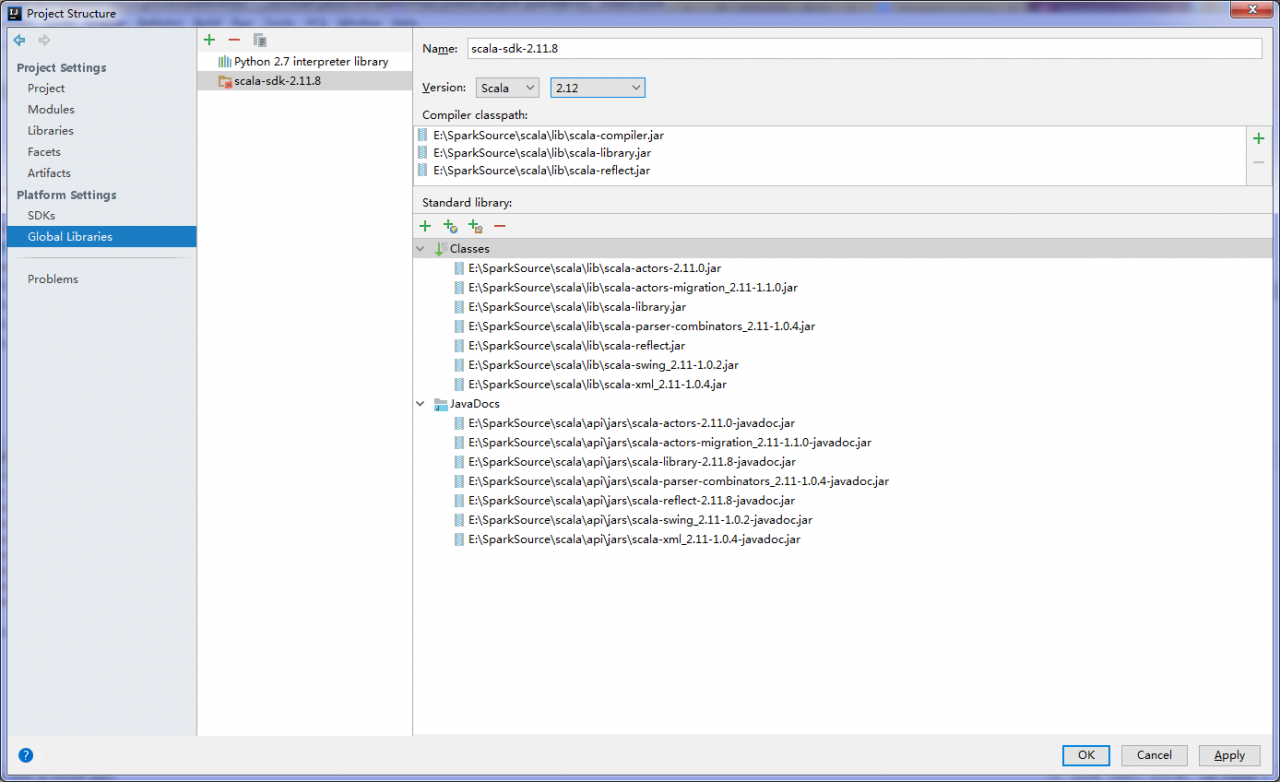

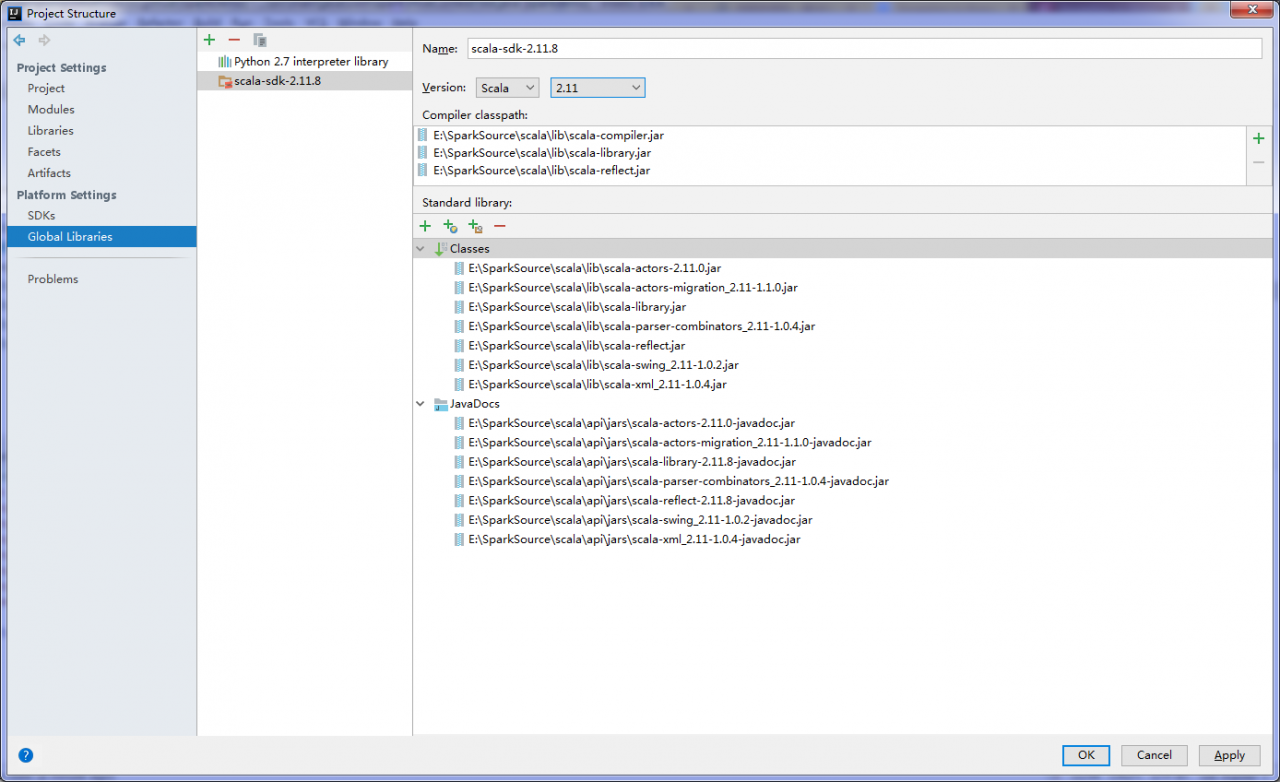

The current version of scala runtime environment introduced by iidea is not the same as the default scala version of idea

Similar Posts:

- [Solved] Spark Programmer Compile error: object apache is not a member of package org

- Spark Program Compilation error: object apache is not a member of package org

- Only one SparkContext may be running in this JVM

- org.apache.spark.SparkException: A master URL must be set in your configuration

- [Solved] Exception in thread “main” java.lang.NoSuchMethodError: org.apache.hadoop.security.HadoopKerberosName.setRuleMechanism(Ljava/lang/String;)V

- [Solved] ERROR Shell: Failed to locate the winutils binary in the hadoop binary path

- ERROR Shell: Failed to locate the winutils binary in the hadoop binary path

- Idea Run Scala Error: Exception in thread “main” java.lang.NoSuchMethodError:com.google.common.base.Preconditions.checkArgument(ZLjava/lang/String;Ljava/lang/Object;)V

- Spark shell cannot start normally due to scala compiler

- [Solved] Spark Install Error: ERROR SparkContext: Error initializing SparkContext. java.lang.reflect.InvocationTargetException