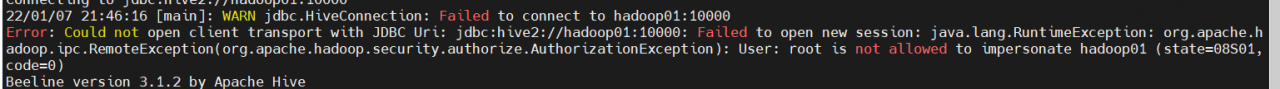

Beeline connection exception in hive user: * * * is not allowed to impersonate

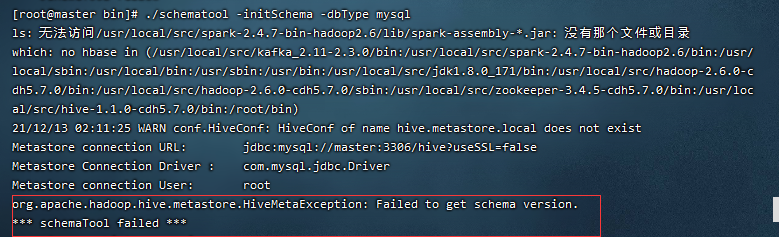

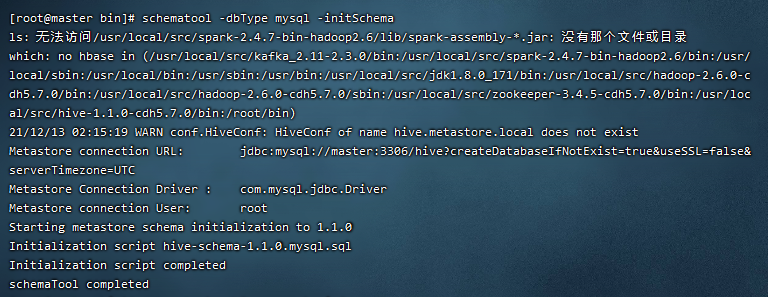

1. Details of error reporting

When beeline connects hive, the following occurs:

The command causing the error is:

bin/beeline -u jdbc:hive2://hadoop01:10000 -n root

2. Solutions

Restart the hadoop cluster after adding the configuration to /etc/hadoop/core-site.xml in the hadoop directory: (root is the username that reported the error)

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

3. Reference

Hadoop2.0 version began to support the ProxyUser mechanism. The meaning is to use User A’s user authentication information to access the hadoop cluster in the name of User B. For the server, it is considered that User B is accessing the cluster at this time, and the corresponding authentication of the access request (including the permission of the HDFS file system and the permission of YARN to submit the task queue) is performed by the user User B.

Assume superuser user name super, you want to make the user joesubmit any and access hdfs. kerberos have superuser credentials, but the user joedoes not. Tasks need to be joerun as a user , and access to any files on the namenode must also be joedone as a user . Requires kerberos credentials that the user joecan use superto establish a connection to the namenode or job tracker. In other words, the superuser is being impersonating joe.

By core-site.xmlit is provided, superuser supercan and only from host1 and host2 to simulate the user belongs group1 and group2:

<property>

<name>hadoop.proxyuser.super.hosts</name>

<value>host1,host2</value>

</property>

<property>

<name>hadoop.proxyuser.super.groups</name>

<value>group1,group2</value>

</property>

In a workaround, the security requirements are not high, wildcards *can be used for impersonation (impersonation) from any host or any user, user root from any host can impersonate (impersonate) any user belonging to any group, there is no need to impersonate a user here , but created its own authentication information for the root user, the root user also has access to the hadoop cluster:

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>