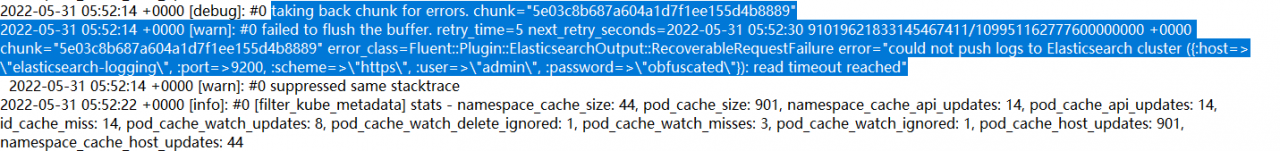

Background: The architecture is that fluentd logs are collected locally and then uploaded to es. It is found that the local stock of logs collected by fluentd has been increasing, and the logs are not written to es. The fluentd log reports an error of read timeout reached, as shown in the following figure

Check:

1. Suspect the disk performance problem, use the dd command to test the disk used by es-data, and find that the writing speed is still OK, so this problem is ruled out

2. It is suspected that there is an error in the buffer file. According to the reported chunkid, go to the /var/log/td-agent/buffer/elasticsearch directory of the corresponding fluentd host to remove the errored file to another place, then restart fluentd and find that there are new The chunkid file appears to block log writing, after several attempts, this problem is ruled out.

3. It is suspected to be caused by the number of threads of fluentd, and the parameter is

| flush_thread_count # If not set, the default is 1 |

Set this parameter to 8, restart fluentd again, and find that the error of read timeout reached will still be reported, and when the number of threads is 1, it will report 8 at a time. The cause of the problem is not this parameter.

4. It is suspected that the timeout time of fluentd is too short, and the parameter is

| request_timeout 240s #Originally 120s |

Try to set it to 240s. After a long time, the error of read timeout reached will still be reported. If it is set to 2400s, because the time is too long, no error will be reported for the time being, but the number of local logs is still increasing and has not decreased. It is suspected that it has nothing to do with this parameter. Try other Method

5. When querying data, it is found that there are similar problems by adding the following parameters to solve the problem. The first two have been set, and the last one has not been set.

| reconnect_on_error true

reload_on_failure true reload_connections false |

| reload_connections false # default is true

It is possible to adjust how the elasticsearch-transport host reload feature works. By default, it will reload the host list from the server every 10,000 requests to spread the load. This can be a problem if your Elasticsearch cluster is behind a reverse proxy, as the Fluentd process may not have direct access to the Elasticsearch nodes. |

The es svc used in fluentd in the cluster is equivalent to reverse proxying the Elasticsearch node. After adding this configuration to fluentd and restarting, it is found that the log is written normally, and the problem is solved. The reason why the old platform is OK is because the old platform is iptables, and the iptables rules are generated to convert nat to ip address. The new platform is all ipvs, and ipvs is directly routed to the corresponding pod-ip, which is equivalent to a reverse proxy, so this parameter must be added.

Similar Posts:

- [Solved] Nginx proxy Timeout: upstream timed out (110: Connection timed out)

- Nginx Timeout Error: upstream timed out (110: Connection timed out) while reading response header from ups…

- [Solved] nginx upstream timed out (110 connection timed out)

- [Solved] When PostgreSQL writes data to Excel, there is a failure your, nginx error handling

- Elasticsearch Startup Error: main ERROR Unable to locate appender “rolling_old” for logger config “root”

- Springboot+Elasticsearch Error [How to Solve]

- [Solved] reason”: “Root mapping definition has unsupported parameters:

- Filebeat output redis i/o timeout [How to Solve]

- [ Elasticsearch-PHP] No alive nodes found in your cluster in

- Elasticsearch + kibana set user name and password to log in