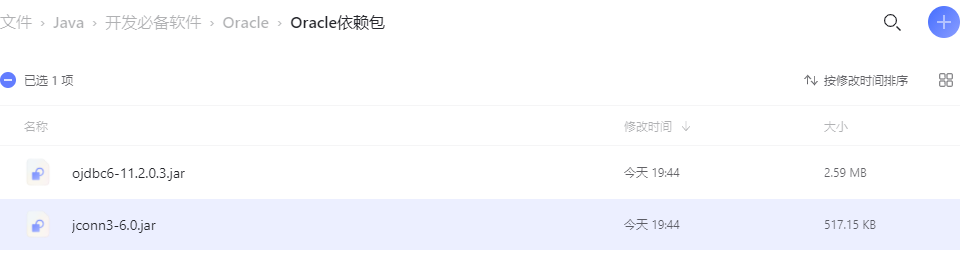

Download online or ask colleagues to pass two jars, ojdbc6-11.2 0.7. 0. Jar and jconn3 0.jar

Put in the same folder:

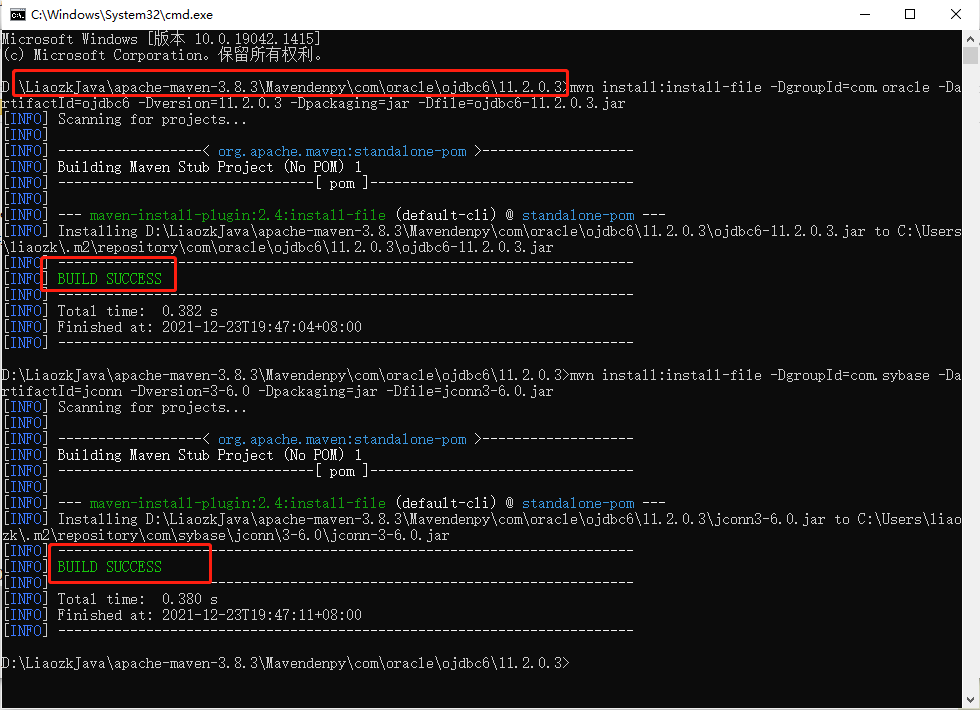

CMD in this folder is as follows: press enter to enter the CMD console.

Enter the following two commands:

mvn install:install-file -DgroupId=com.oracle -DartifactId=ojdbc6 -Dversion=11.2.0.3 -Dpackaging=jar -Dfile=ojdbc6-11.2.0.3.jar mvn install:install-file -DgroupId=com.sybase -DartifactId=jconn -Dversion=3-6.0 -Dpackaging=jar -Dfile=jconn3-6.0.jar

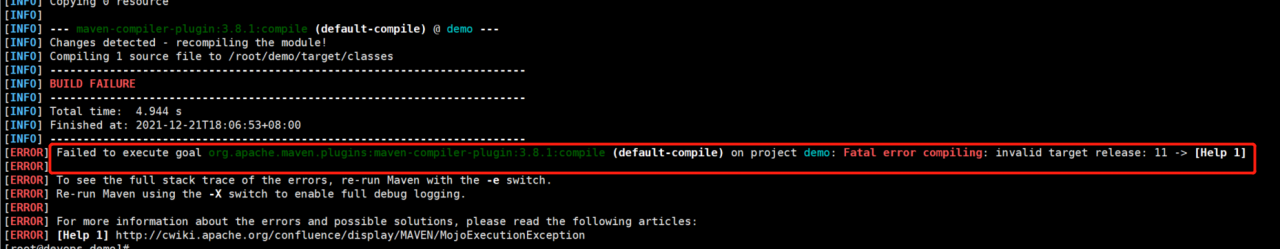

Represents a successful addition. For build failure, please check whether the version number, groupid, file name, etc. in the statement are consistent with the actual situation, and then run it after modification.

Note: If you have installed multiple maven versions, please check which version the current maven_home address is. This command is to install the jar locally into our local maven warehouse. If the warehouse is wrong, it won’t work if you install it.

After installation, right-click the project and update project

Click OK, and then Maven install again. No error will be reported