problem

Error message: undefined reference to ` CV:: imread (STD:: String const & amp;, int) ‘

When the above error occurs, I thought there was a problem with the opencv link, so I have been trying to solve the problem of importing opencv and trying to link the library in various ways, but the error will always appear in the end

Finally, it was found that the problem was C + + abi

Solution

In the code #define_GLIBCXX_USE_CXX11_ABI 0 causes this error. If it is removed, it can run normally

reason

#define _GLIBCXX_USE_CXX11_ABI 0 is an old version of ABI used when compiling links

In libstdc + + (c + + standard library under GCC) released in GCC version 5.1, a new implementation of STD:: basic_string is added. The new implementation coexists with the old implementation, but has different names. The new implementation is called STD:: _cxx11:: basic_string, and the old one is called STD:: basic_string.

The newer version of GCC will compile STD:: string as STD:: string in C + + 11:__cxx11::basic_string< char> At this time, if the third-party library you call does not enable the C + + 11 feature during compilation, the STD:: string in it is actually STD:: basic_string< char>, The two cannot be converted to each other# define _GLIBCXX_USE_CXX11_ABI 0 is to make STD:: string in your code compile according to the old implementation

But my opencv should have been compiled with STD:: basic_ String, so it doesn’t need to be set. If it is set, the error shown in the title will appear instead

However, if the third-party library uses an old implementation and its compiler is a new version, you need to add #define_GLIBCXX_USE_CXX11_ ABI 0, otherwise undefined reference to ` CV:: imread (STD:: _cxx11:: basic_string <char, STD:: char_traits, STD:: allocator > const & int) ‘will appear

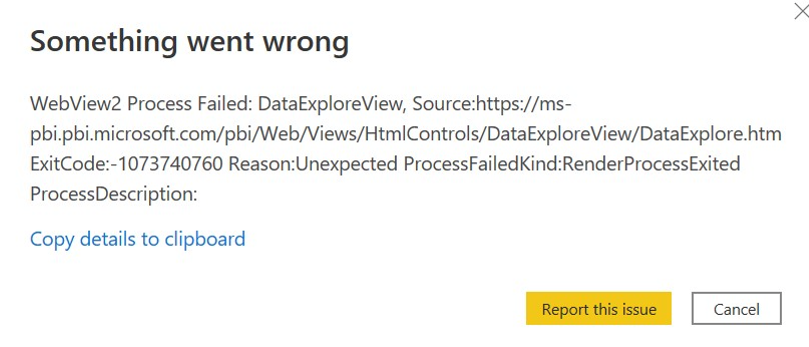

This is related to the browser Edge. Open Edge and find an error: An incompatible piece of software attempted to load along with Microsoft Edge. This can be caused by malware, though it’s usually caused by a program that is out-of-date. We recommend making sure you have the latest version of that program installed, and that your antimalware software is running and up-to-date.

This is related to the browser Edge. Open Edge and find an error: An incompatible piece of software attempted to load along with Microsoft Edge. This can be caused by malware, though it’s usually caused by a program that is out-of-date. We recommend making sure you have the latest version of that program installed, and that your antimalware software is running and up-to-date.