Problem Description:

After receiving four or five SMS messages of master-slave disconnection and recovery in one night, check the error information in the error log of MySQL (5.6) slave database in the production environment (master-slave architecture). Generally speaking, the slave database lost its connection with the master database, resulting in io thread reconnection.

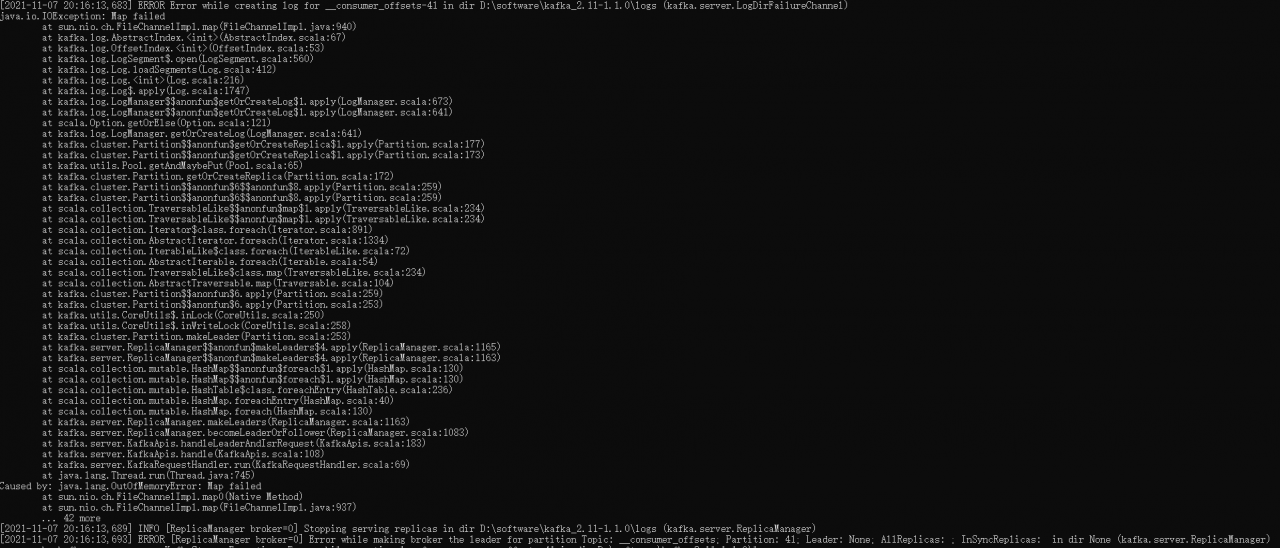

2019-12-02 03:46:44 47114 [ERROR] Error reading packet from server: Lost connection to MySQL server during query ( server_errno=2013)

2019-12-02 03:46:44 47114 [Note] Slave I/O thread: Failed reading log event, reconnecting to retry, log 'binlog.002295' at position 386140629

2019-12-02 03:46:44 47114 [Warning] Storing MySQL user name or password information in the master info repository is not secure and is therefore not recommended. Please consider using the USER and PASSWORD connection options for START SLAVE; see the 'START SLAVE Syntax' in the MySQL Manual for more information.

2019-12-02 03:46:54 47114 [ERROR] Slave I/O: error reconnecting to master 'repli@192.168.11.10:3306' - retry-time: 60 retries: 1, Error_code: 2013

2019-12-02 03:48:04 47114 [ERROR] Slave I/O: error reconnecting to master 'repli@192.168.11.10:3306' - retry-time: 60 retries: 2, Error_code: 2013

2019-12-02 03:49:14 47114 [ERROR] Slave I/O: error reconnecting to master 'repli@192.168.11.10:3306' - retry-time: 60 retries: 3, Error_code: 2013

2019-12-02 03:50:24 47114 [ERROR] Slave I/O: error reconnecting to master 'repli@192.168.11.10:3306' - retry-time: 60 retries: 4, Error_code: 2013

2019-12-02 03:51:34 47114 [ERROR] Slave I/O: error reconnecting to master 'repli@192.168.11.10:3306' - retry-time: 60 retries: 5, Error_code: 2013

2019-12-02 03:52:44 47114 [ERROR] Slave I/O: error reconnecting to master 'repli@192.168.11.10:3306' - retry-time: 60 retries: 6, Error_code: 2013

2019-12-02 03:53:54 47114 [ERROR] Slave I/O: error reconnecting to master 'repli@192.168.11.10:3306' - retry-time: 60 retries: 7, Error_code: 2013

2019-12-02 03:55:04 47114 [ERROR] Slave I/O: error reconnecting to master 'repli@192.168.11.10:3306' - retry-time: 60 retries: 8, Error_code: 2013

2019-12-02 03:56:06 47114 [Note] Slave: connected to master 'repli@192.168.11.10:3306',replication resumed in log 'binlog.002295' at position 386140629

2019-12-02 04:01:00 47114 [ERROR] Error reading packet from server: Lost connection to MySQL server during query ( server_errno=2013)

2019-12-02 04:01:00 47114 [Note] Slave I/O thread: Failed reading log event, reconnecting to retry, log 'binlog.002295' at position 45291556Query official documents:

B.4.2.3 Lost connection to MySQL server

There are three likely causes for this error message.

Usually it indicates network connectivity trouble and you should check the condition of your network if this error occurs frequently. If the error message includes “during query,” this is probably the case you are experiencing.

Sometimes the “during query” form happens when millions of rows are being sent as part of one or more queries. If you know that this is happening, you should try increasing net_read_timeout from its default of 30 seconds to 60 seconds or longer, sufficient for the data transfer to complete.

More rarely, it can happen when the client is attempting the initial connection to the server. In this case, if your connect_timeout value is set to only a few seconds, you may be able to resolve the problem by increasing it to ten seconds, perhaps more if you have a very long distance or slow connection. You can determine whether you are experiencing this more uncommon cause by using SHOW GLOBAL STATUS LIKE 'Aborted_connects'. It will increase by one for each initial connection attempt that the server aborts. You may see “reading authorization packet” as part of the error message; if so, that also suggests that this is the solution that you need.

If the cause is none of those just described, you may be experiencing a problem with BLOB values that are larger than max_allowed_packet, which can cause this error with some clients. Sometime you may see an ER_NET_PACKET_TOO_LARGE error, and that confirms that you need to increase max_allowed_packet. Check step by step according to official documents:

First of all, we can eliminate the network reasons, because it is a LAN, there is no problem in the network environment, and there is no firewall interception, etc;

Because the error message from the slave library contains during query, there is no other error message, such as reading authorization packet, The reason for this er_NET_PACKET_TOO_error should be as follows:

Sometimes the “during query” form happens when millions of rows are being sent as part of one or more queries. If you know that this is happening, you should try increasing net_read_timeout from its default of 30 seconds to 60 seconds or longer, sufficient for the data transfer to complete.

According to the official recommendation, the value of the parameter net_read_timeout can be increasedCombined with the scenario of master-slave replication, query binary logs and business personnel. The master database will generate many large transactions during this time period. Since the default time for reading data from the main library is 30 seconds (the default value of the net_read_timeout parameter), the slave library connection process will be closed after 30 seconds. Specifically, when reading a transaction from the IO thread of the library, the transaction may not be read in 30 seconds because the transaction is too large. However, the transaction from the database to the database is incomplete. It is considered that there is a problem with the connection to the primary database, so the primary database will be reconnected.

But after adjusting the net_read_ timeout = 900, the error will still appear!!

Therefore, it is assumed that when the master database sends data to the slave database, a large transaction cannot be sent within a limited time (net_write_timeout, default 30s), so the transaction cannot be read no matter how long it takes to read from the database.

Therefore, adjust the net of the main library_write_timeout=300

This error is not reported later.

There are two ways to change the value:

1、Use set GLOBAL command. For example: set GLOBAL net_write_timeout=120;

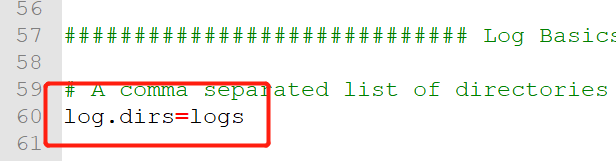

2、Modify the parameter value in the configuration file of Mysql: net_write_timeout=120

After that, restart the database service.