The connection error of hiveserver2 is as follows: error: could not open client transport with JDBC URI: jdbc:hive2 ://hadoop01:10000: java.net.ConnectException: Connection refused (Connection refused) (state=08S01,code=0)

1. Check whether the hiveserver2 service is started

[root@hadoop01 ~]# jps

5101 RunJar # start normal

2. See if Hadoop security mode is off

[root@hadoop01 ~]# hdfs dfsadmin -safemode get

Safe mode is OFF # normal

If it is safe mode is on, please refer to https://www.cnblogs.com/-xiaoyu-/p/11399287.html

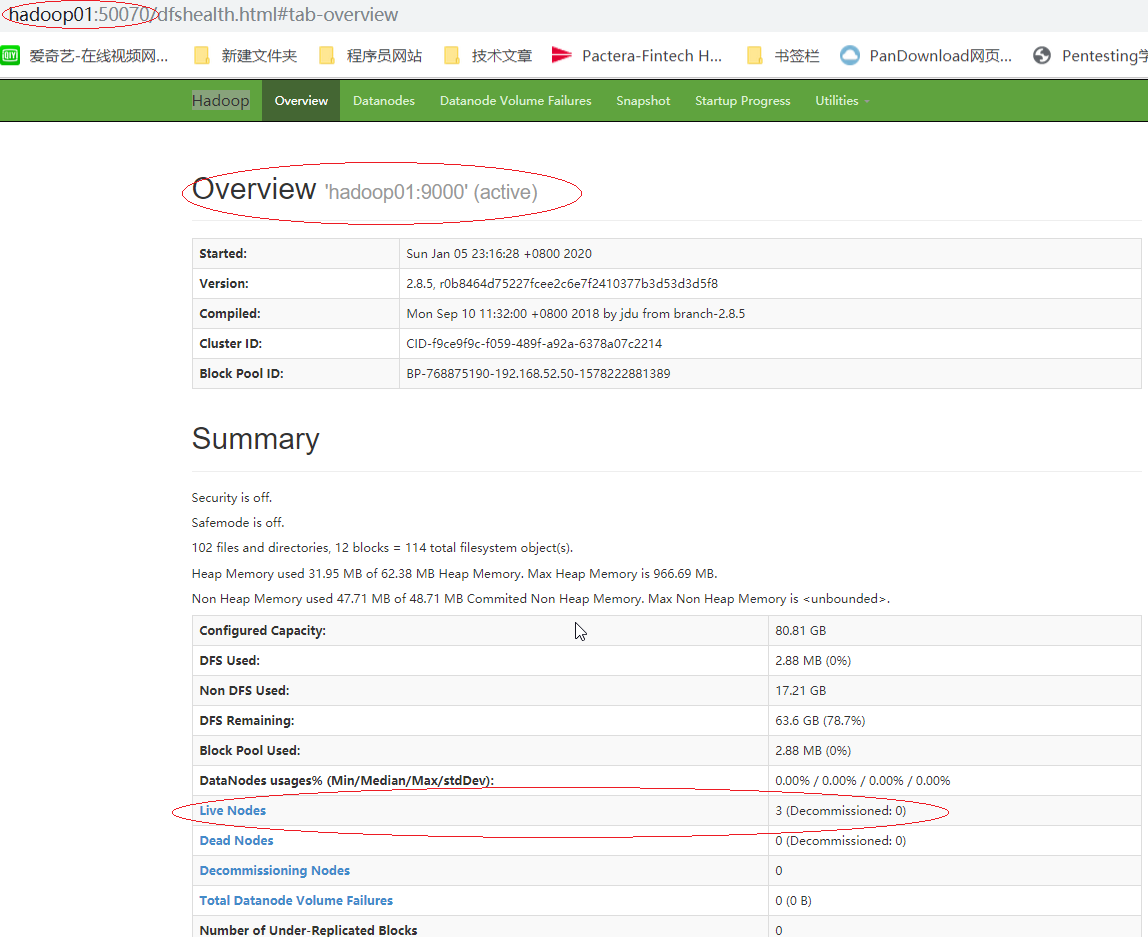

3. Browser open http://hadoop01 : 50070/see if Hadoop cluster starts normally

4. Check whether MySQL service is started

[root@hadoop01 ~]# service mysqld status

Redirecting to /bin/systemctl status mysqld.service

● mysqld.service - MySQL 8.0 database server

Loaded: loaded (/usr/lib/systemd/system/mysqld.service; disabled; vendor preset: disabled)

Active: active (running) since Sun 2020-01-05 23:30:18 CST; 8min ago

Process: 5463 ExecStartPost=/usr/libexec/mysql-check-upgrade (code=exited, status=0/SUCCESS)

Process: 5381 ExecStartPre=/usr/libexec/mysql-prepare-db-dir mysqld.service (code=exited, status=0/SUCCESS)

Process: 5357 ExecStartPre=/usr/libexec/mysql-check-socket (code=exited, status=0/SUCCESS)

Main PID: 5418 (mysqld)

Status: "Server is operational"

Tasks: 46 (limit: 17813)

Memory: 512.5M

CGroup: /system.slice/mysqld.service

└─5418 /usr/libexec/mysqld --basedir=/usr

Jan 05 23:29:55 hadoop01 systemd[1]: Starting MySQL 8.0 database server...

Jan 05 23:30:18 hadoop01 systemd[1]: Started MySQL 8.0 database server.

Active: active (running) since Sun 2020-01-05 23:30:18 CST; 8 min ago means normal start

If not, service mysqld start starts mysql

Attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention attention Note:

Be sure to use the local MySQL tool to connect to the MySQL server to see if it can connect normally( Just checking)

If you can’t connect, take a look:

Configure the MySQL database to be logged in on any host as long as it is root user + password.

1. Enter mysql

[root@hadoop102 mysql-libs]# mysql -uroot -p000000

2. Show the database

mysql>show databases;

3. Use mysql database

mysql>use mysql;

4. Show all tables in the mysql database

mysql>show tables;

5.Show the structure of the user table

mysql>desc user;

6.Query the user table

mysql>select User, Host, Password from user;

7.Modify the user table by changing the content of the Host table to %

mysql>update user set host='%' where host='localhost';

8. delete the other hosts for the root user

mysql>delete from user where Host='hadoop102';

mysql>delete from user where Host='127.0.0.1';

mysql>delete from user where Host='::1';

9. flush

mysql>flush privileges;

10.Quit

mysql>quit;

Check whether the mysql-connector-java-5.1.27.tar.gz driver package is put under/root/servers/hive-apache-2.3.6/lib

<value>jdbc:mysql://hadoop01:3306/hive?createDatabaseIfNotExist=true</value>

#Check if mysql has the library specified above hive If mysql does not have the library see step 7

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| hive |

| information_schema |

| mysql |

| performance_schema |

| sys |

+--------------------+

5 rows in set (0.01 sec)

3306 followed by hive is the meta-database, which you can specify yourself For example

<value>jdbc:mysql://hadoop01:3306/metastore?createDatabaseIfNotExist=true</value>

5. See if the Hadoop configuration file core-site.xml has the following configuration

<property>

<name>hadoop.proxyuser.root.hosts</name> -- root is the current Linux user, mine is the root user

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

If the linux user is his own name, such as: xiaoyu

then the configuration is as follows.

<property>

<name>hadoop.proxyuser.xiaoyu.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.xiaoyu.groups</name>

<value>*</value>

</property>

6. Other issues

# HDFS file permission issues

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

7.org.apache.hadoop.hive.metastore.hivemetaexception: failed to get schema version.

schematool -dbType mysql -initSchema

Don’t download the wrong package

Apache hive-2.3.6 download address: http://mirror.bit.edu.cn/apache/hive/hive-2.3.6/

Index of/Apache/hive/hive-2.3.6 icon name last modified size description [dir] parent directory –

[] apache-hive-2.3.6-bin.tar.gz 23-aug-2019 02:53 221m (download this) [] apache-hive-2.3.6-src.tar.gz 23-aug-2019 02:53 20m

9. Important

Everything has been checked, still wrong!!! JPS view all the processes started by the machine are closed, and then restart the device, and then

Turn on zookeeper (if any)

Open Hadoop cluster

Open MySQL service

Open hiveserver2

Beeline connection

The configuration file is as follows, for reference only, subject to the actual configuration

hive-site.xml

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://hadoop01:3306/hive?createDatabaseIfNotExist=true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>12345678</value>

</property>

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

</property>

<property>

<name>hive.cli.print.header</name>

<value>true</value>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>hadoop01</value>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<property>

<name>datanucleus.schema.autoCreateAll</name>

<value>true</value>

</property>

<!--

<property>

<name>hive.metastore.uris</name>

<value>thrift://node03.hadoop.com:9083</value>

</property>

-->

</configuration>

core-site.xml

<configuration>

<!-- Specify the address of the NameNode in HDFS -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop01:9000</value>

</property>

<!-- Specify the storage directory for files generated by the Hadoop runtime -->

<property>

<name>hadoop.tmp.dir</name>

<value>/root/servers/hadoop-2.8.5/data/tmp</value>

</property>

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

</configuration>

hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<!-- Specify the Hadoop secondary name node host configuration Third -->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>hadoop03:50090</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

mapred-site.xml

<configuration>

<!-- Specify MR to run on Yarn -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<!-- Historical server-side address 3rd -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>hadoop03:10020</value>

</property>

<!-- History server webside address -->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>hadoop03:19888</value>

</property>

</configuration>

yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<!-- The way the Reducer gets its data -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- Specify the address of YARN's ResourceManager The second -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop02</value>

</property>

<!-- Log aggregation feature enabled -->

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<!-- Log retention time set to 7 days -->

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>604800</value>

</property>

</configuration>

Similar Posts:

- [Solved] hive beeline Connect Error: User:*** is not allowed to impersonate

- [Solved] spark Connect hive Error: javax.jdo.JDODataStoreException: Required table missing : “`DBS`” in Catalog “” Schema “”

- :org.apache.hadoop.hive.metastore.HiveMetaException: Failed to get schema version.

- Hive Error: root is not allowed to impersonate root (state=08S01,code=0)

- Solution to the error of MySQL: unrecognized service (CentOS)

- Ambari Unauthorized connection for super-user: root from IP

- Hive initialization metadata error [How to Solve]

- MySQLAccess denied for user ‘root’@’localhost’ [How to Solve]

- mysql ERROR 1044 (42000): Access denied for user ‘

- When Navicat connects to a database on a server, there is an error prompt: 1130 ‘* *’ host is not allowed to connect to this MySQL server