Call to localhost/127.0.0.1:9000 failed on connection exception:java.net.ConnectException Solutions for

When starting Hadoop, the following error occurred:

Call From java.net.UnknownHostException: ubuntu-larntin: ubuntu-larntin to localhost:9000 failed on connection exception: java.net.ConnectException: Connection refused;And start datanode, yarn are normal, only the namenode has been hanging up

The solution is as follows:

wrr@ubuntu:~$ hadoop namenode -formatAfter that, restart all the clusters

wrr@ubuntu:~$ cd /home/wrr/java/hadoop-2.7.6/sbin

wrr@ubuntu:~/java/hadoop-2.7.6/sbin$ ./hadoop-daemon.sh start namenode

starting namenode, logging to /home/wrr/java/hadoop-2.7.6/logs/hadoop-wrr-namenode-ubuntu.out

wrr@ubuntu:~/java/hadoop-2.7.6/sbin$ jps

8307 DataNode

9317 NameNode

7431

9352 Jps

8476 ResourceManagerAfter that, run the program on eclipse again, and it’s OK

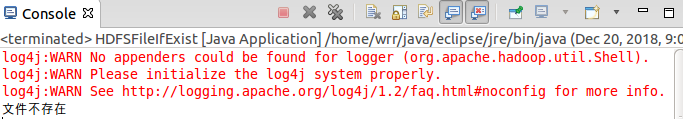

A new Java file is created

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

public class HDFSFileIfExist {

public static void main(String[] args){

try{

String fileName = "test";

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://localhost:9000");

conf.set("fs.hdfs.impl", "org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = FileSystem.get(conf);

if(fs.exists(new Path(fileName))){

System.out.println("Files Exsit");

}else{

System.out.println("Files Do Not Exsit");

}

}catch (Exception e){

e.printStackTrace();

}

}

}Determine whether the test file exists in the current hadoop-2.7.6 directory

Similar Posts:

- JAVA api Access HDFS Error: Permission denied in production environment

- Hadoop Connect hdfs Error: could only be replicated to 0 nodes instead of minReplication (=1).

- [Solved] HDFS Filed to Start namenode Error: Premature EOF from inputStream;Failed to load FSImage file, see error(s) above for more info

- [Solved] Exception in thread “main“ java.net.ConnectException: Call From

- [Solved] HDFS Error: org.apache.hadoop.security.AccessControlException: Permission denied

- HDFS: How to Operate API (Example)

- Namenode Initialize Error: java.lang.IllegalArgumentException: URI has an authority component

- “Execution error, return code 1 from org. Apache. Hadoop. Hive. QL. Exec. Movetask” error occurred when hive imported data locally

- Hadoop command error: permission problem [How to Solve]

- [Solved] hadoop:hdfs.DFSClient: Exception in createBlockOutputStream