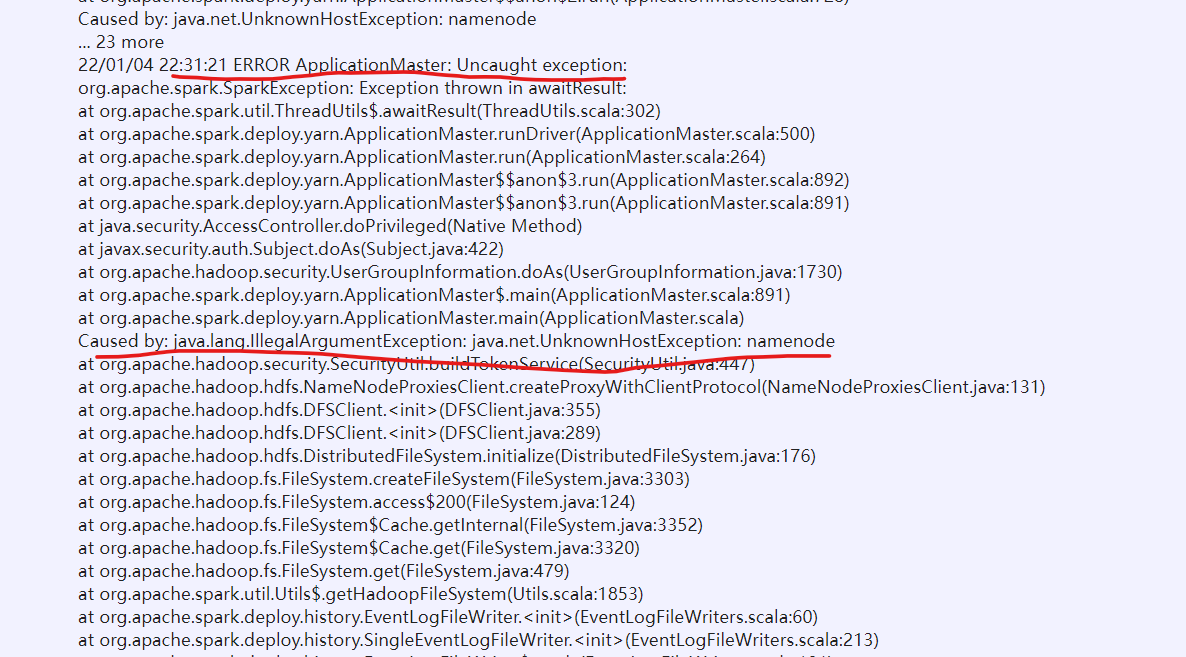

When starting spark shell with spark on yarn, an error is found:

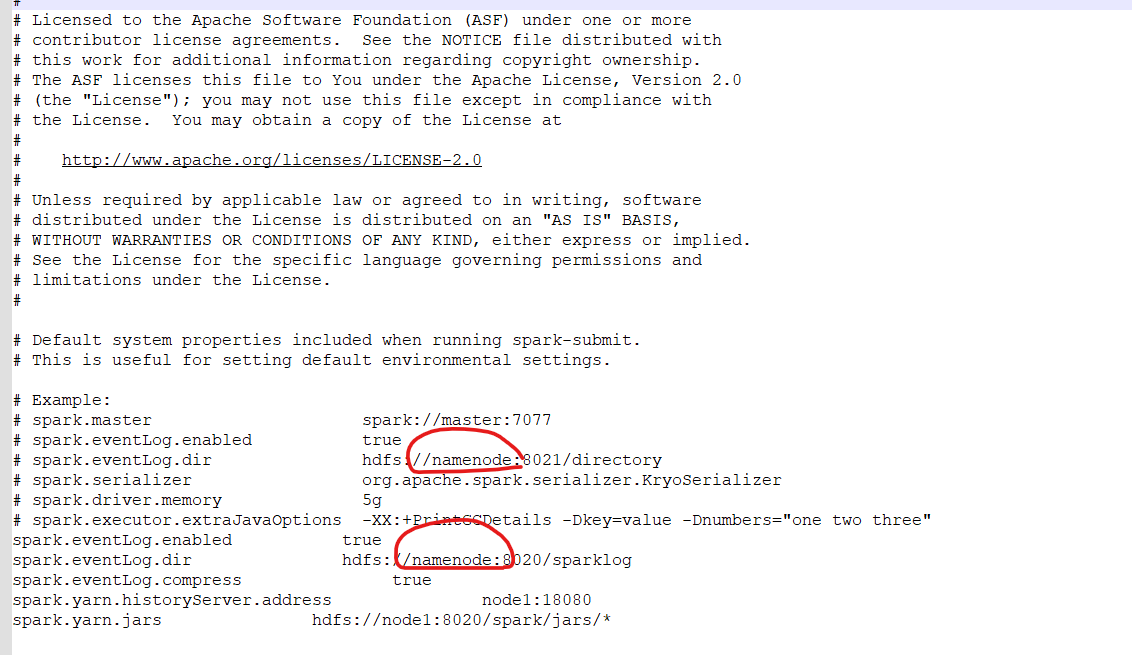

If the host named namenode cannot be found, there should be an error in the configuration file.

After checking, it is found that it is spark defaults The conf file is incorrectly configured. When configuring, the above file is copied directly, resulting in forgetting to modify it to node1, so you must be careful when configuring

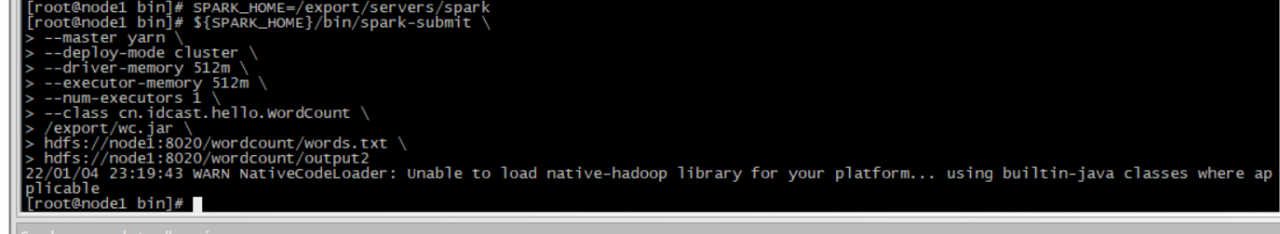

Perfect solution after change

I’ve always made low-level mistakes recently. It’s hard 🤦