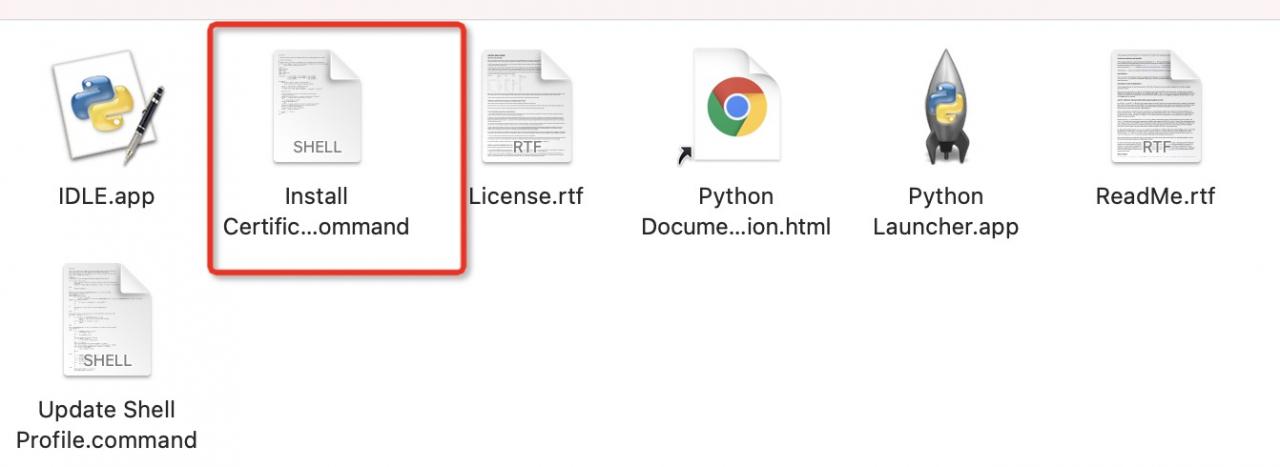

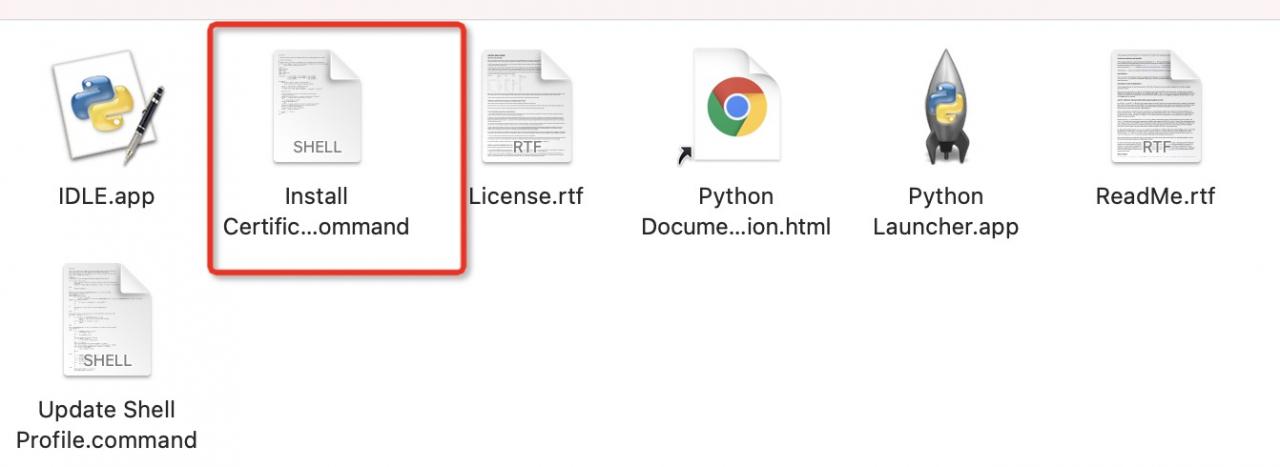

urllib.error.URLError: <urlopen error [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:xxx)>Find the Install folder of installation

then install the following files

urllib.error.URLError: <urlopen error [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:xxx)>

python3:

import urllib.request

from bs4 import BeautifulSoup

response = urllib.request.urlopen("http://php.net/")

html = response.read()

soup=BeautifulSoup(html, "html5lib")

text=soup.get_text(strip=True)

print(text)The code is very simple, just grabbing http://php.net/ Page text content, and then use the beautifulsoup module to clear and filter out redundant HTML tags. It seems that the permission was successful for the first time, and then stuck all the time and reported an error again:

File "C:\Python36\lib\urllib\request.py", line 504, in _call_chain

result = func(*args)

File "C:\Python36\lib\urllib\request.py", line 1361, in https_open

context=self._context, check_hostname=self._check_hostname)

File "C:\Python36\lib\urllib\request.py", line 1320, in do_open

raise URLError(err)

urllib.error.URLError: <urlopen error EOF occurred in violation of protocol (_ssl.c:841)>In fact, Google browser is accessible

This problem may be due to the fact that sslv2 is disabled on the web server, and the older Python library Python 2. X tries to work with protocol by default_ Sslv23 establishes the connection. Therefore, in this case, you need to select the SSL version that you want to use

To change the SSL version used in HTTPS, you need to subclass the httpadapter class and mount it to the session object. For example, if you want to force the use of tlsv1, the new transport adapter will look like this:

from requests.adapters import HTTPAdapter

from requests.packages.urllib3.poolmanager import PoolManager

class MyAdapter(HTTPAdapter):

def init_poolmanager(self, connections, maxsize, block=False):

self.poolmanager = PoolManager(num_pools=connections,

maxsize=maxsize,

block=block,

ssl_version=ssl.PROTOCOL_TLSv1)Then, you can mount it to the requests session object:

s=requests.Session()

s.mount('https://', MyAdapter())

response = urllib.request.urlopen("http://php.net/")It’s easy to write a universal transport adapter, which can get any SSL type from the package in the SSL constructor and use it

from requests.adapters import HTTPAdapter

from requests.packages.urllib3.poolmanager import PoolManager

class SSLAdapter(HTTPAdapter):

'''An HTTPS Transport Adapter that uses an arbitrary SSL version.'''

def __init__(self, ssl_version=None, **kwargs):

self.ssl_version = ssl_version

super(SSLAdapter, self).__init__(**kwargs)

def init_poolmanager(self, connections, maxsize, block=False):

self.poolmanager = PoolManager(num_pools=connections,

maxsize=maxsize,

block=block,

ssl_version=self.ssl_version)The above error code after modification:

import urllib.request

from bs4 import BeautifulSoup

import requests

from requests.adapters import HTTPAdapter

from requests.packages.urllib3.poolmanager import PoolManager

import ssl

class MyAdapter(HTTPAdapter):

def init_poolmanager(self, connections, maxsize, block=False):

self.poolmanager = PoolManager(num_pools=connections,

maxsize=maxsize,

block=block,

ssl_version=ssl.PROTOCOL_TLSv1)

s=requests.Session()

s.mount('https://', MyAdapter())

response = urllib.request.urlopen("http://php.net/")

html = response.read()

soup=BeautifulSoup(html, "html5lib")

text=soup.get_text(strip=True)

print(text)It can capture web page text information normally