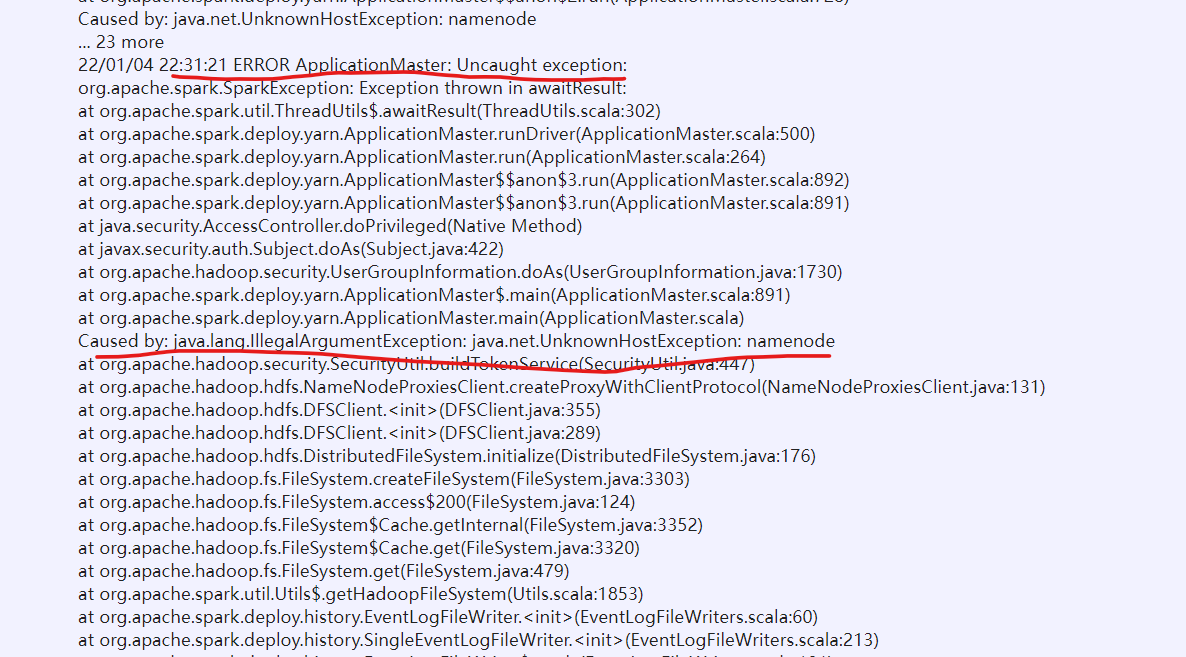

When starting spark shell with spark on yarn, an error is found:

If the host named namenode cannot be found, there should be an error in the configuration file.

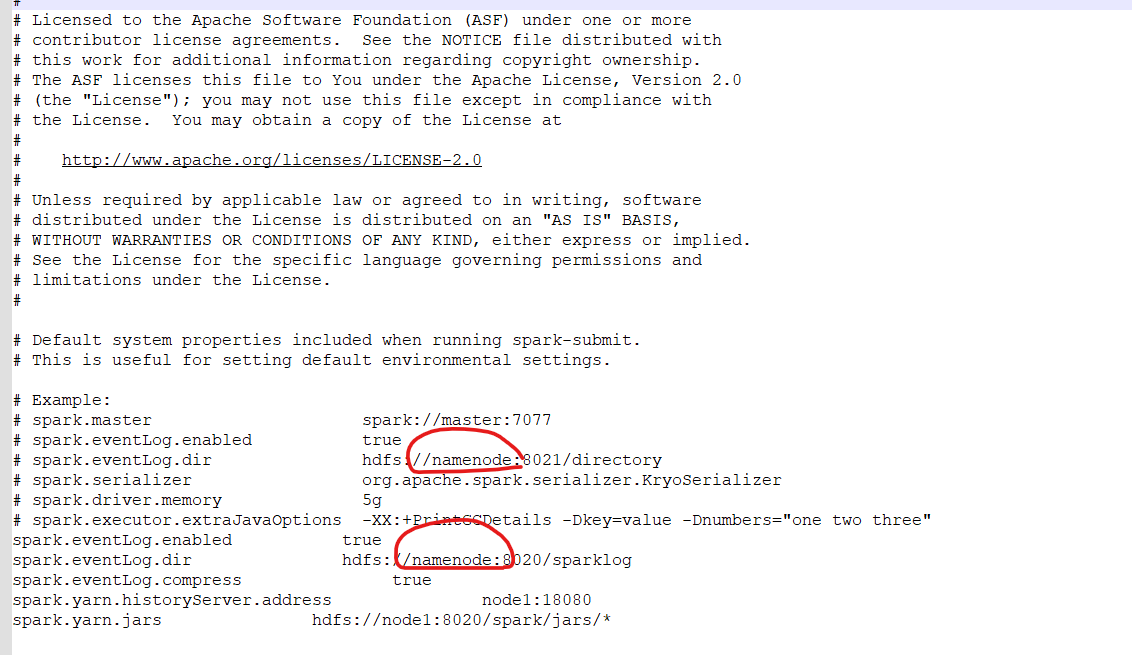

After checking, it is found that it is spark defaults The conf file is incorrectly configured. When configuring, the above file is copied directly, resulting in forgetting to modify it to node1, so you must be careful when configuring

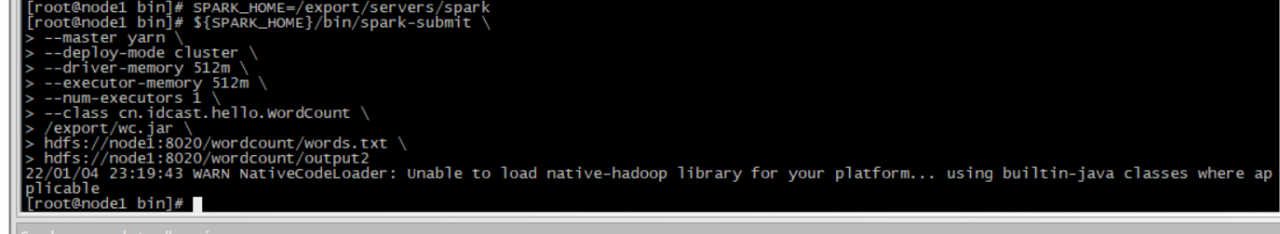

Perfect solution after change

I’ve always made low-level mistakes recently. It’s hard 🤦

Similar Posts:

- [Solved] Spark Install Error: ERROR SparkContext: Error initializing SparkContext. java.lang.reflect.InvocationTargetException

- Spark shell cannot start normally due to scala compiler

- [Solved] Exception in thread “main” java.lang.NoSuchMethodError: org.apache.hadoop.security.HadoopKerberosName.setRuleMechanism(Ljava/lang/String;)V

- [Solved] Spark Programmer Compile error: object apache is not a member of package org

- Spark Program Compilation error: object apache is not a member of package org

- [Solved] Error when calling different modules of Python functions: ModuleNotFoundError: No module named’python_base.test1′;’python_base’ is not a package

- CentOS Error -bash:systemctl:command not found

- [Solved] java.lang.NoSuchMethodError: scala.Product.$init$(Lscala/Product;) V sets the corresponding Scala version

- java.lang.RuntimeException: java.io.IOException: Initialize ConfOptimizer class occur error, javax.naming.AuthenticationException: Cannot get job queue name

- Eperm: operation not permitted, unlink