The backpropagation method in RNN and LSTM models, the problem at loss.backward()

The problem tends to occur after updating the pytorch version.

Problem 1:Error with loss.backward()

Trying to backward through the graph a second time (or directly access saved tensors after they have already been freed). Saved intermediate values of the graph are freed when you call .backward() or autograd.grad(). Specify retain_graph=True if you need to backward through the graph a second time or if you need to access saved tensors after calling backward.

(torchenv) star@lab407-1:~/POIRec/STPRec/Flashback_code-master$ python train.py

Prolem 2: Use loss.backward(retain_graph=True)

one of the variables needed for gradient computation has been modified by an inplace operation: [torch.FloatTensor [10, 10]], which is output 0 of AsStridedBackward0, is at version 2; expected version 1 instead. Hint: enable anomaly detection to find the operation that failed to compute its gradient, with torch.autograd.set_detect_anomaly(True).

Solution.

Some pitfalls about loss.backward() and its argumenttain_graph

First of all, the function loss.backward() is very simple, is to calculate the gradient of the current tensor associated with the leaf nodes in the graph

To use it, you can of course use it directly as follows

optimizer.zero_grad() clearing the past gradients.

loss.backward() reverse propagation, calculating the current gradient.

optimizer.step() updates the network parameters according to the gradient

or this case

for i in range(num):

loss+=Loss(input,target)

optimizer.zero_grad() clears the past gradients.

loss.backward() back-propagate and compute the current gradient.

optimizer.step() update the network parameters according to the gradient

However, sometimes, such errors occur: runtimeerror: trying to backward through the graph a second time, but the buffers have already been free

This error means that the mechanism of pytoch is that every time. Backward() is called, all buffers will be free. There may be multiple backward() in the model, and the gradient stored in the buffer in the previous backward() will be free because of the subsequent call to backward(). Therefore, here is retain_Graph = true, using this parameter, you can save the gradient of the previous backward() in the buffer until the update is completed. Note that if you write this:

optimizer.zero_grad() clearing the past gradients.

loss1.backward(retain_graph=True) backward propagation, calculating the current gradient.

loss2.backward(retain_graph=True) backward propagation, calculating the current gradient.

optimizer.step() updates the network parameters according to the gradient

Then you may have memory overflow, and each iteration will be slower than the previous one, and slower and slower later (because your gradients are saved and there is no free)

the solution is, of course:

optimizer.zero_grad() clearing the past gradients.

loss1.backward(retain_graph=True) backward propagation, calculating the current gradient.

loss2.backward() backpropagate and compute the current gradient.

optimizer.step() updates the network parameters according to the gradient

That is: do not add retain to the last backward()_Graph parameter, so that the occupied memory will be released after each update, so that it will not become slower and slower.

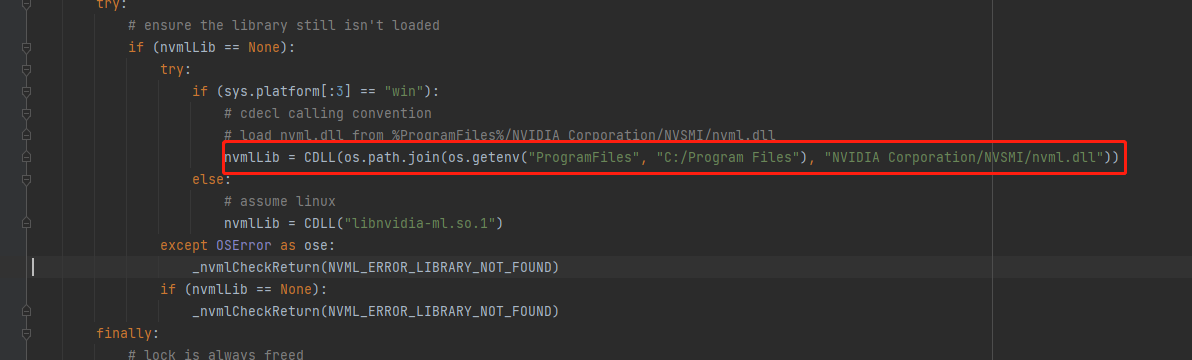

Someone here will ask, I don’t have so much loss, how can such a mistake happen? There may be a problem with the model you use. Such problems occur in both LSTM and Gru. The problem exists with the hidden unit, which also participates in backpropagation, resulting in multiple backward(),

in fact, I don’t understand why there are multiple backward()? Is it true that my LSTM network is n to N, that is, input n and output n, then calculate loss with n labels, and then send it back? Here, you can think about BPTT, that is, if it is n to 1, then gradient update requires all inputs of the time series and hidden variables to calculate the gradient, and then pass it forward from the last one, so there is only one backward(), In both N to N and N to m, multiple losses need to be backwarded(). If they continue to propagate in two directions (one from output to input and the other along time), there will be overlapping parts. Therefore, the solution is very clear. Use the detach() function to cut off the overlapping backpropagation, (here is only my personal understanding. If there is any error, please comment and point it out and discuss it together.) there are three ways to cut off, as follows:

hidden.detach_()

hidden = hidden.detach()

hidden = Variable(hidden.data, requires_grad=True)