After tensorflow 2.0.0 is installed, the following error occurs during the operation:

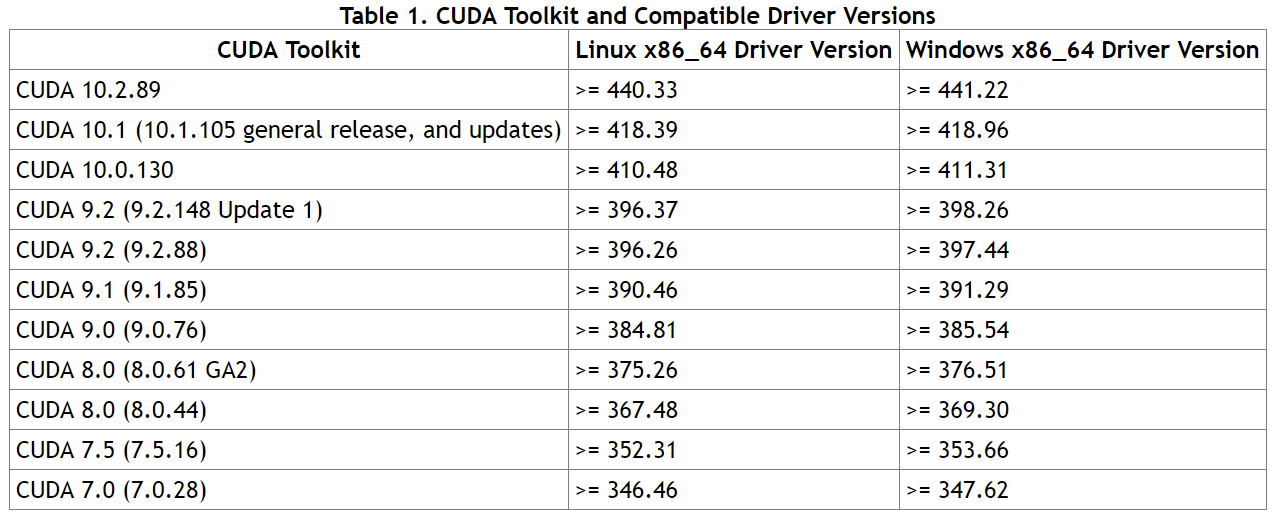

tensorflow.python.framework.errors_impl.InternalError: cudaGetDevice() failed. Status: CUDA driver version is insufficient for CUDA runtime versionThis is because the CUDA driver version does not meet the CUDA running version. Previously, in order to install tensorflow 2.0.0, the CUDA running version was upgraded to CUDA 10.0, but the CUDA driver version was not upgraded, CUDA driver version and CUDA running version should meet the requirements of the following table( https://docs.nvidia.com/cuda/cuda-toolkit-release-notes/index.html ):

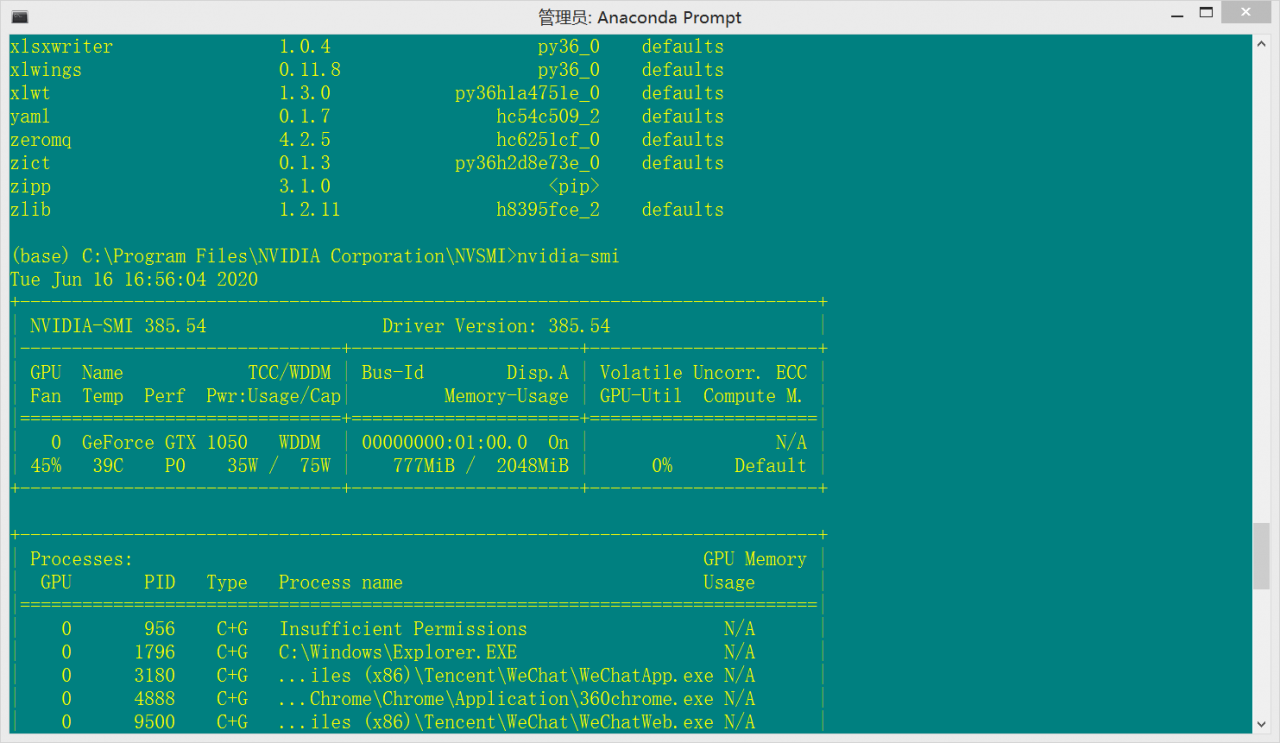

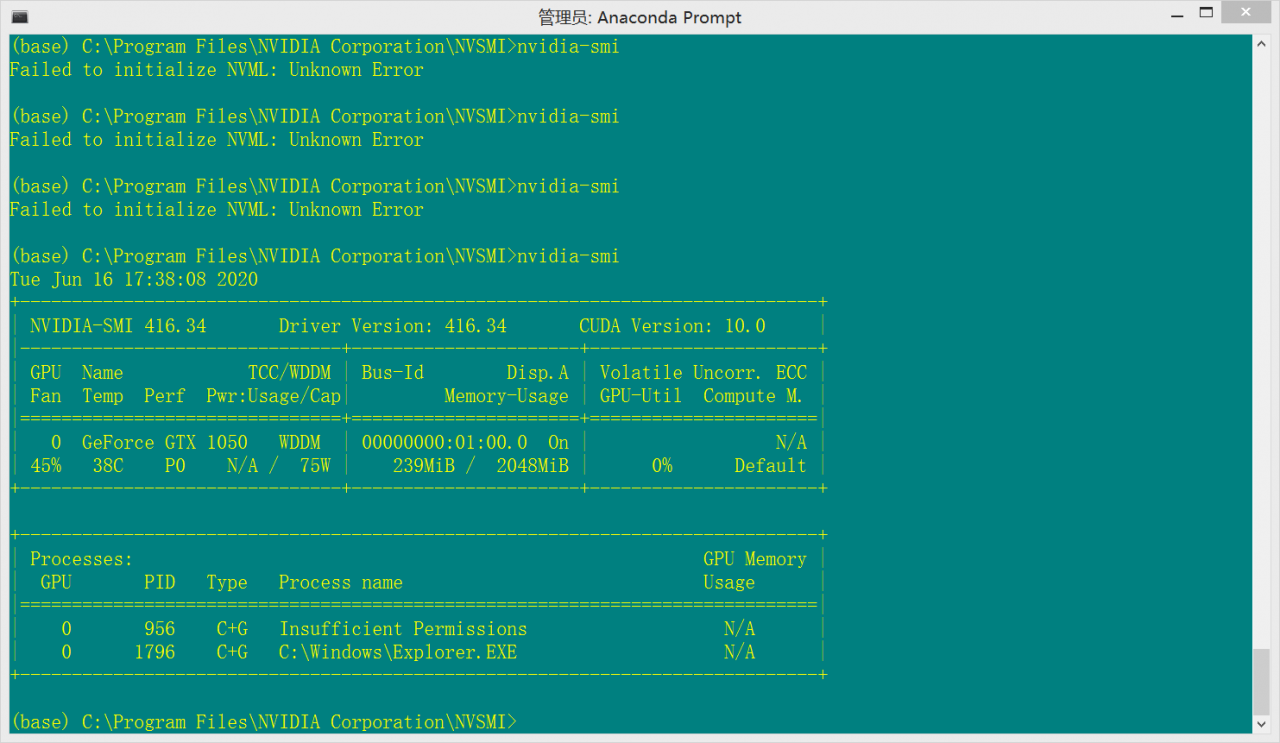

After running NVIDIA SMI, the CUDA driver version is 385.54, which does not meet the above requirements

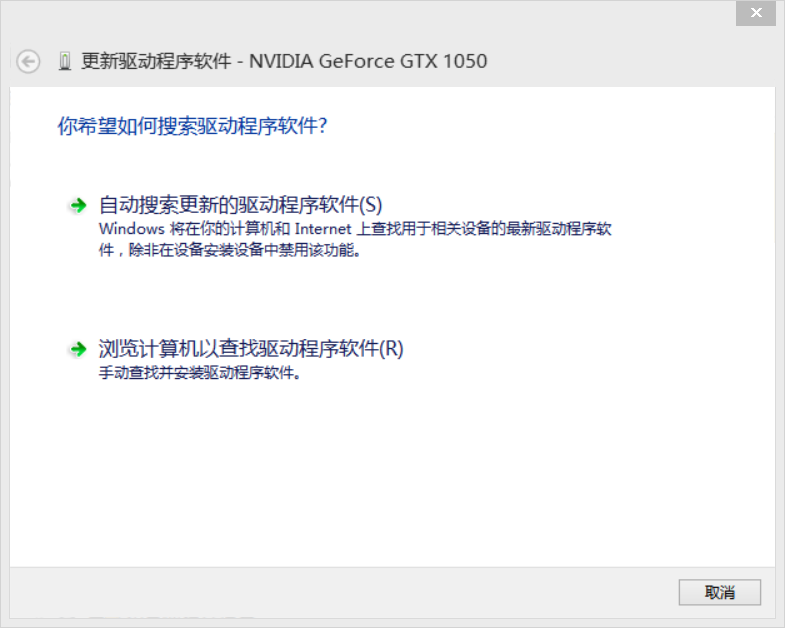

So we directly use the device manager of windows 8.1 to upgrade CUDA driver version with the driver software of automatic search update

After the upgrade, run NVIDIA SMI to show that the CUDA driver version has been upgraded to 416.34. At this time, if you run tensorflow 2.0.0, the above error will not appear

Similar Posts:

- Completely solve t ensorflow:ImportError : Could not find ‘cudart64_ 90. DLL ‘tensorflow installation

- [Solved] nvidia-smi Error: Failed to initialize NVML: Driver/library version mismatch

- ImportError: libcublas.so.9.0: cannot open shared object file: No such file or directory [Solved]

- RuntimeError: cuda runtime error (30) : unknown errorr

- [Solved] Apt-get -f install Error: No apport report written because MaxReports is reached already Errors were…

- Tensorflow encountered importerror: could not find ‘cudart64_ 100. DLL ‘error resolution

- [Solved] ImportError: libcublas.so.9.0: cannot open shared object file: No such file

- ImportError: DLL load failed: The specified module could not be found

- [Solved] Could not create cudnn handle: CUDNN_STATUS_INTERNAL_ERROR

- [Solved] Error caused by correspondence between tensorflow GPU version number and CUDA