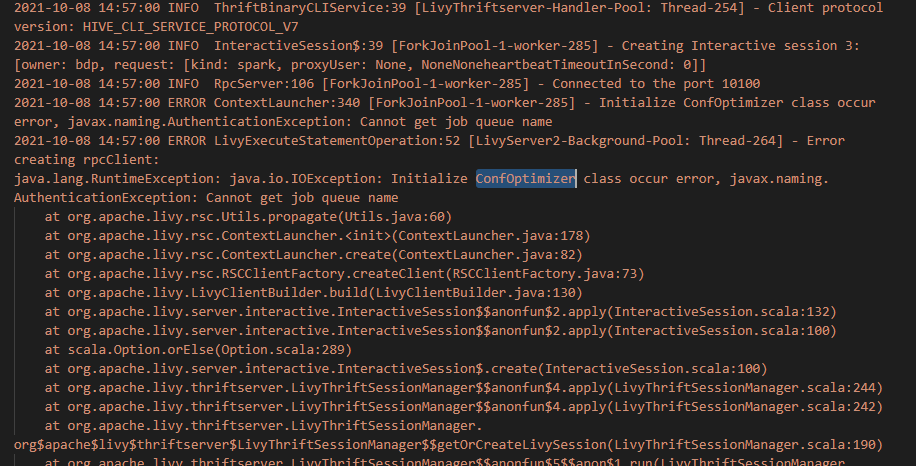

This error occurred during the recent expansion of livyserver on a cluster, and the creation of remote client failed

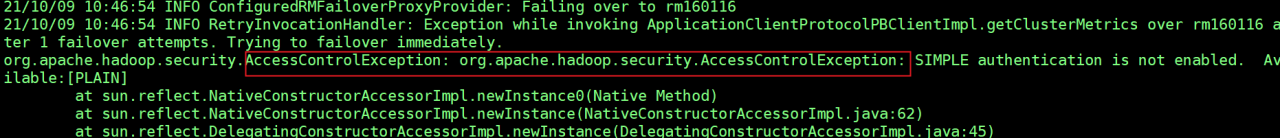

Remembering that the cluster seems to have white list restrictions, log in to the livyserver host and run the spark sample

./bin/spark-submit --class org.apache.spark.examples.SparkPi --master yarn-cluster ./examples/jars/spark-example

Access is restricted. Just add a white list

Similar Posts:

- org.apache.spark.SparkException: A master URL must be set in your configuration

- [Solved] java.lang.NoSuchMethodError: scala.Product.$init$(Lscala/Product;) V sets the corresponding Scala version

- [Solved] Exception in thread “main” java.lang.NoSuchMethodError: org.apache.hadoop.security.HadoopKerberosName.setRuleMechanism(Ljava/lang/String;)V

- [Solved] Error: JAVA_HOME is not set and could not be found.

- Only one SparkContext may be running in this JVM

- [Solved] spark-shell Error: java.lang.IllegalArgumentException: java.net.UnknownHostException: namenode

- SparkSQL Use DataSet to Operate createOrReplaceGlobalTempView Error

- [Solved] SparkException: Could not find CoarseGrainedScheduler or it has been stopped.

- [Solved] idea Remote Submit spark Error: java.io.IOException: Failed to connect to DESKTOP-H

- Spark2.x Error: Queue’s AM resource limit exceeded.