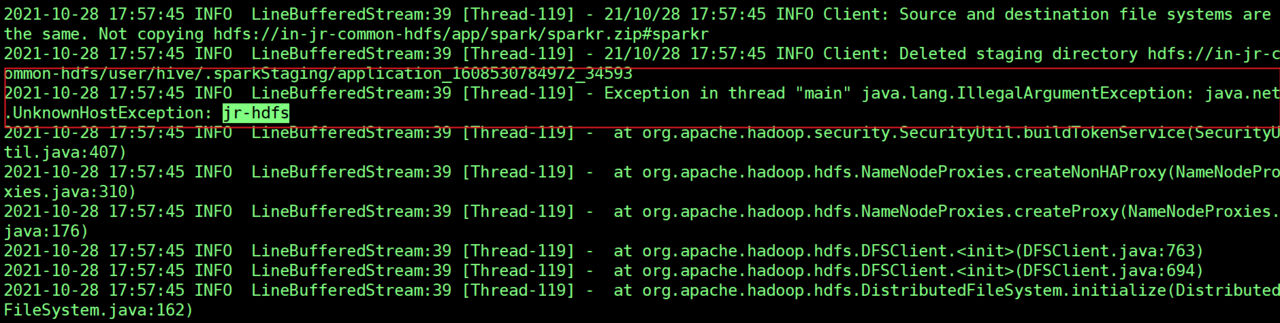

Recently, when deploying the live service, I found the error java.net.unknownhostexception: Jr HDFS. At first, I looked at the error and thought it was the problem of/etc/hosts. After trying, I found that it was not

The last discovery was pyspark_ ARCHIVES_ The address of path configuration is wrong

Similar Posts:

- [Solved] the default discovery settings are unsuitable for production use; at least one of [discovery.seed_hosts, discovery.seed_providers, cluster.initial_master_nodes] must be configured

- HDFS problem set (1), use the command to report an error: com.google.protobuf.servicee xception:java.lang.OutOfMemoryError :java heap space

- [Solved] Call to localhost/127.0.0.1:9000 failed on connection exception:java.net.ConnectException

- Namenode Initialize Error: java.lang.IllegalArgumentException: URI has an authority component

- JAVA api Access HDFS Error: Permission denied in production environment

- laravel : SQLSTATE[HY000] [2002] Connection refused

- Solution to unknown hostexception error when HBase starts regionserver

- [Solved] HDFS Filed to Start namenode Error: Premature EOF from inputStream;Failed to load FSImage file, see error(s) above for more info

- [Solved] hadoop:hdfs.DFSClient: Exception in createBlockOutputStream