Geeks, please accept the hero post of 2021 Microsoft x Intel hacking contest>>> ![]()

java.io.IOException: No FileSystem for scheme: hdfs

In this article, we show you how to package Maven dependent packages into jar packages. After using Maven assembly to create a jar, when providing this jar to other projects, the following error is reported:

log4j:WARN No appenders could be found for logger (org.apache.hadoop.metrics2.lib.MutableMetricsFactory).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder".

SLF4J: Defaulting to no-operation (NOP) logger implementation

SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details.

Exception in thread "main" java.io.IOException: No FileSystem for scheme: hdfs

at org.apache.hadoop.fs.FileSystem.getFileSystemClass(FileSystem.java:2421)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2428)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:88)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2467)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2449)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:367)

at org.apache.hadoop.fs.FileSystem$1.run(FileSystem.java:156)

at org.apache.hadoop.fs.FileSystem$1.run(FileSystem.java:153)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1491)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:153)

at com.cetc.di.HDFSFileSystem.<init>(HDFSFileSystem.java:41)

at callhdfs.Main.main(Main.java:11)

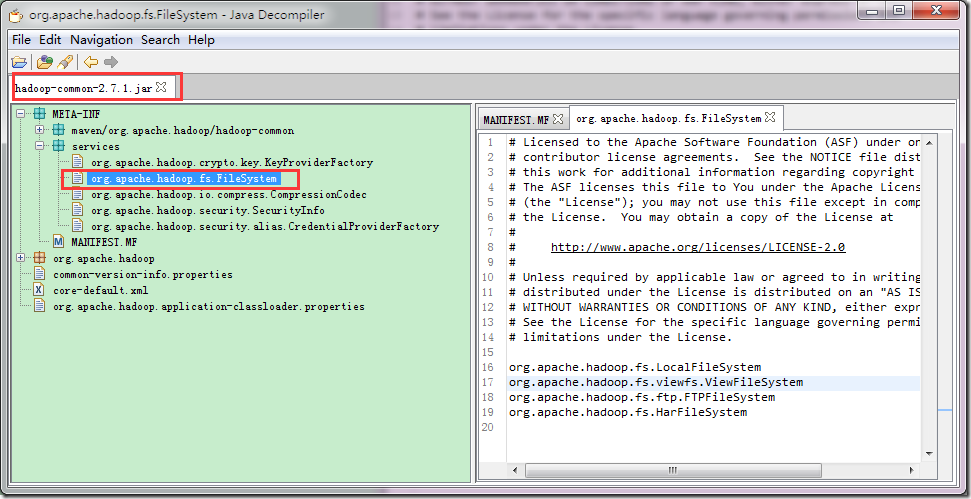

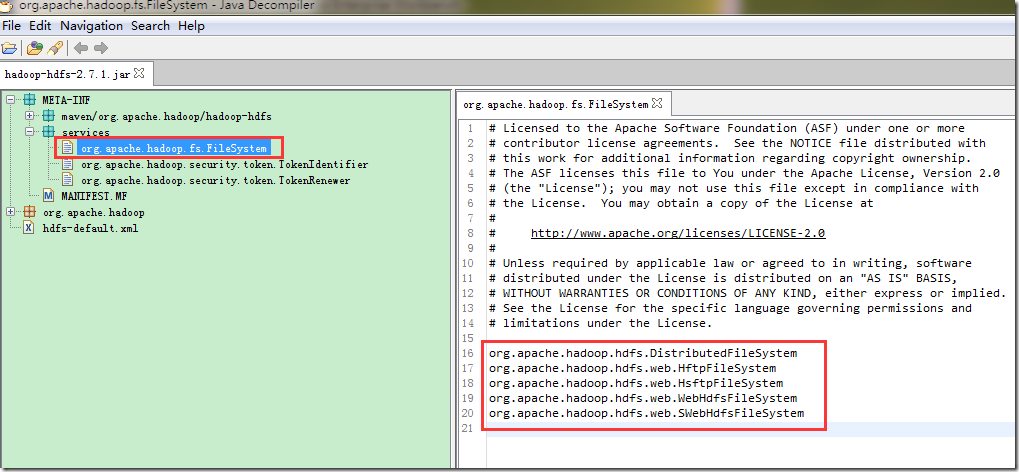

However, in the project without a jar package, it can run normally. Through long-time observation and analysis, it is found that there are two packages related to Hadoop filesystem: hadoop-hdfs-2.7.1.jar and hadoop-common-2.7.1.jar. The services directory in meta-inf of these two packages has the following contents:

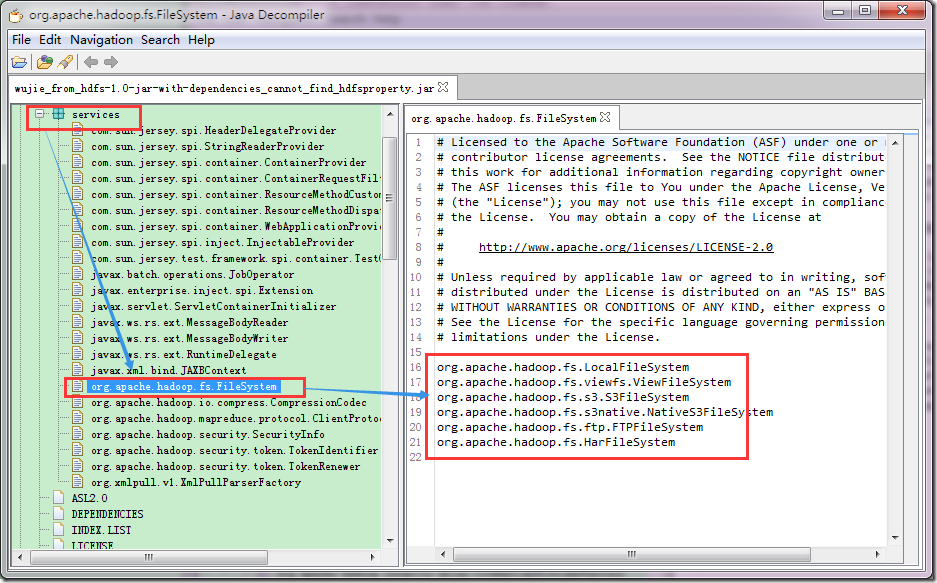

As you can see, there are org.apache.hadoop.fs.filesystem files in the services directory of both packages. When using Maven assembly plugin, all the dependent packages will be unpacked and then in the pack. In this way, the same file will be overwritten. Let’s see what is reserved in the typed package

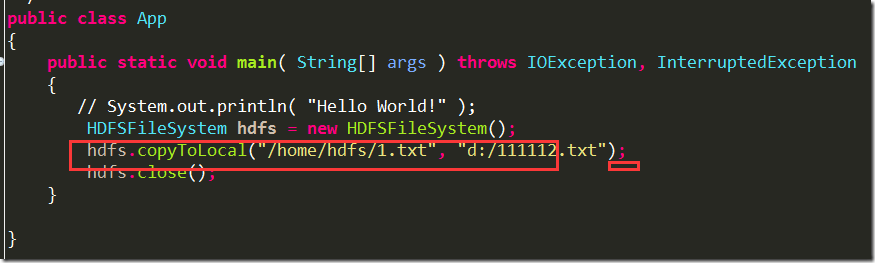

As you can see, Maven assembly plugin (fatjar is the same), which puts the services content in hadoop-common.jar into the final jar package, while the services content in hadoop-hdfs.jar package is covered. Because our function call is written like this:

Used in the function hdfs://IP : port, but the implementation of this schema cannot be found in the generated final jar package. So it was thrown out

java.io.IOException: No FileSystem for scheme: hdfs

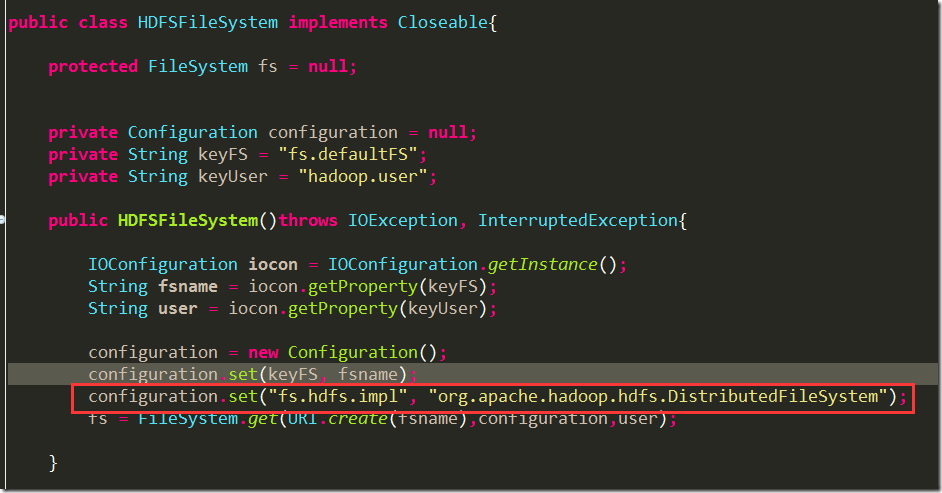

The solution is to display and set this class when setting Hadoop configuration: “org. Apache. Hadoop. HDFS. Distributed file system:

configuration.set("fs.hdfs.impl", "org.apache.hadoop.hdfs.DistributedFileSystem");

Then it’s repackaging and everything works OK

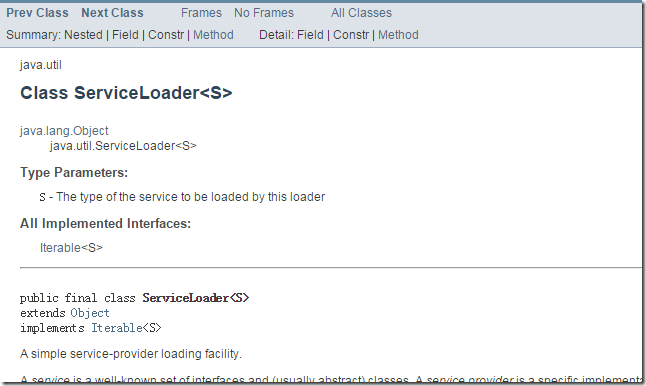

The contents of services file in meta-inf in jar package involve the concept of servicelocator

For a detailed introduction, see the official JAVA document: http://docs.oracle.com/javase/7/docs/api/java/util/ServiceLoader.html

Check out this article to see how servicelocator works http://www.concretepage.com/java/serviceloader-java-example

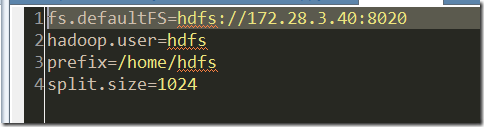

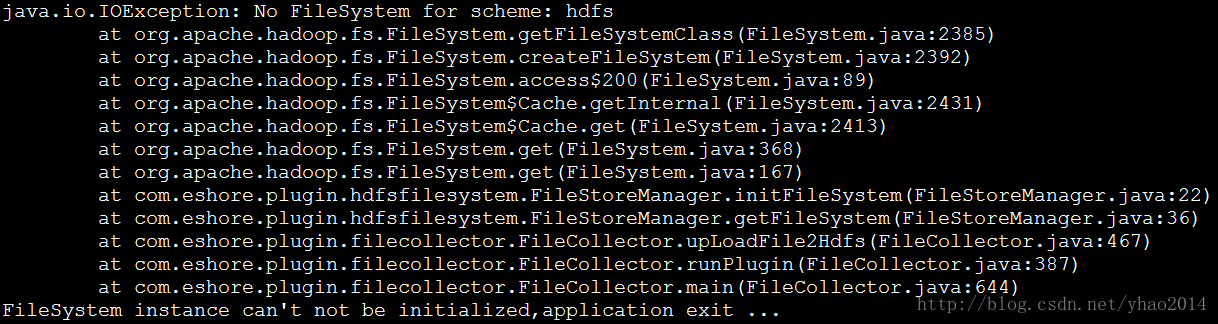

Recently, several project modules need to be upgraded from Hadoop 1 to Hadoop 2, but there are some problems in the process of modification, one of which is using file system FS = file system. Get (CONF); Call HDFS and report an error. The specific error information is as follows:

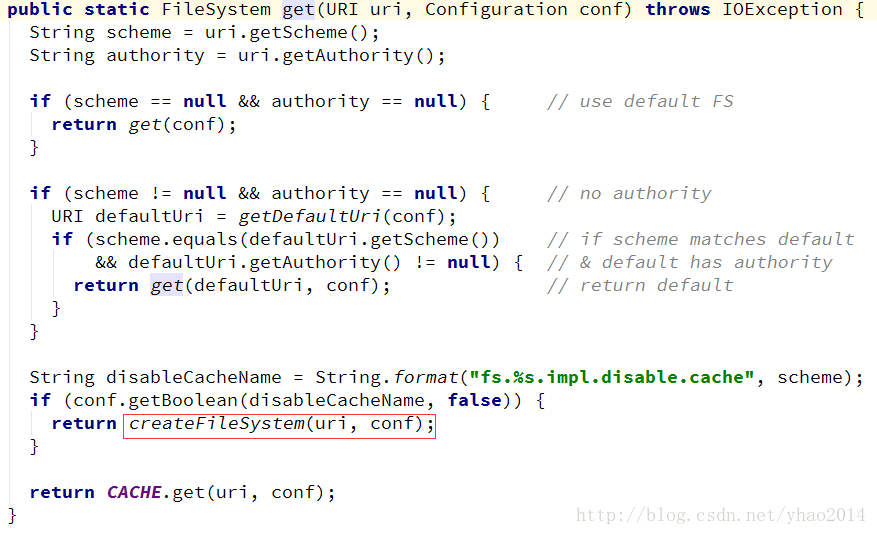

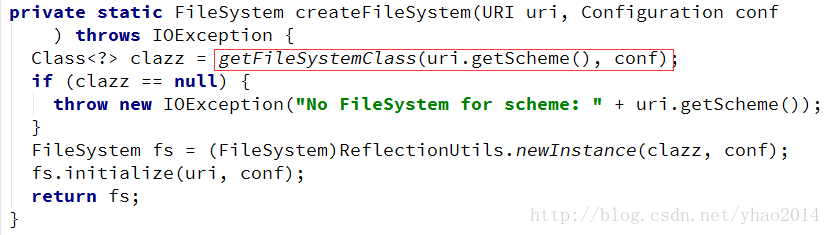

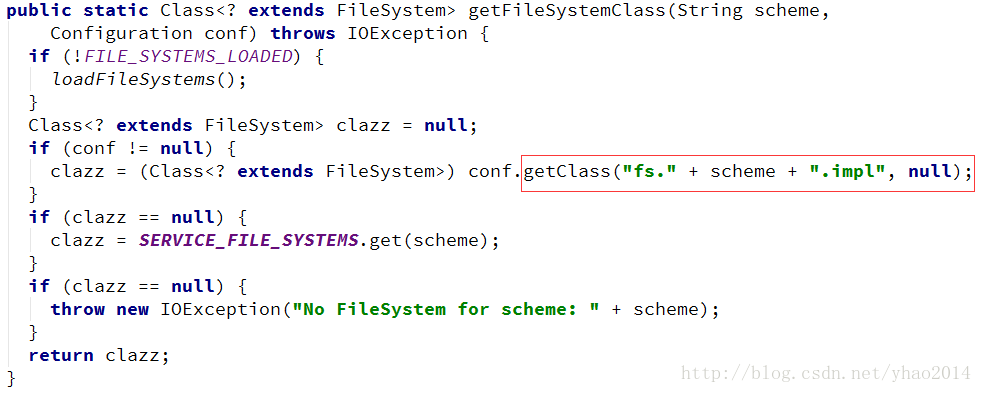

Look at the file system class. It is found that when initializing through file system. Get(), it needs to be implemented by static loading. The specific implementation is as follows:

It can be seen from the code that the getfilesystem class (string scheme, configuration CONF) method needs to be called finally. In this method, the configuration information of “FS.” + scheme + “. Impl” (in this case, FS. HDFS. Impl) defined in the core-default.xml file needs to be read, However, this configuration information is not configured in the default configuration file (core-default.xml file in hadoop-common-x.jar)

——————————————

Solution:

Add the following configuration information to the configuration file core-default.xml:

<property>

<name>fs.hdfs.impl</name>

<value>org.apache.hadoop.hdfs.DistributedFileSystem</value>

<description>The FileSystem for hdfs: uris.</description>

</property>After the program is packaged as a jar, it is executed on the server. The program can execute correctly as expected, but it is still very uncomfortable to see the following errors:

Java code

2014-08-1317:16:49[WARN]-[main]-[org.apache.hadoop.hbase.util.DynamicClassLoader]Failedtoidentifythefsofdir/tmp/hbase-ecm2/hbase/lib,ignored

java.io.IOException:NoFileSystemforscheme:file

atorg.apache.hadoop.fs.FileSystem.getFileSystemClass(FileSystem.java:2385)~[ecm.jar:na]

atorg.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2392)~[ecm.jar:na]

atorg.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:89)~[ecm.jar:na]

atorg.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2431)~[ecm.jar:na]

atorg.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2413)~[ecm.jar:na]

atorg.apache.hadoop.fs.FileSystem.get(FileSystem.java:368)~[ecm.jar:na] [problem analysis:]

after eclipse is packaged, the following two configuration items do not exist in the core-site.xml configuration file in the jar package

<property>

<name>fs.hdfs.impl</name>

<value>org.apache.hadoop.hdfs.DistributedFileSystem</value>

<description>TheFileSystemforhdfs:uris.</description>

</property>

<property>

<name>fs.file.impl</name>

<value>org.apache.hadoop.fs.LocalFileSystem</value>

<description>TheFileSystemforhdfs:uris.</description>

</property>just add the above two configurations to the core-site.xml, replace the XML in the jar package, and throw it back to the server, and it will run successfully