I found a problem when you use tensorboard for visualization: if you define

MERGED = tf.summary.merge_ all();

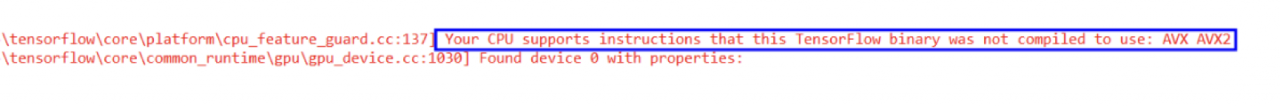

After this operation, if you use it alone SESS.run ([merge]), the above error will be reported

At this point, you should work with other pigs instead SESS.run ([train, merged]), this error will not be reported again after the change

It’s hard for me to explain the specific reasons. Before, I checked this error for a long time and found some solutions, but none of them solved my problem

https://stackoverflow.com/questions/35114376/error-when-computing-summaries-in-tensorflow

Later, I referred to a GitHub program and changed it according to its appearance.

# -*- coding: utf-8 -*-

"""

Created on Wed Oct 31 17:07:38 2018

@author: LiZebin

"""

from __future__ import print_function

import numpy as np

import tensorflow as tf

tf.reset_default_graph()

SESS = tf.Session()

LOGDIR = "logs/"

X = np.arange(0, 1000, 2, dtype=np.float32)

Y = X*2.3+5.6

X_ = tf.placeholder(tf.float32, name="X")

Y_ = tf.placeholder(tf.float32, name="Y")

W = tf.get_variable(name="Weights", shape=[1],

dtype=tf.float32, initializer=tf.random_normal_initializer())

B = tf.get_variable(name="bias", shape=[1],

dtype=tf.float32, initializer=tf.random_normal_initializer())

PRED = W*X_+B

LOSS = tf.reduce_mean(tf.square(Y_-PRED))

tf.summary.scalar("Loss", LOSS)

TRAIN = tf.train.GradientDescentOptimizer(learning_rate=0.0000001).minimize(LOSS)

WRITER = tf.summary.FileWriter(LOGDIR, SESS.graph)

MERGED = tf.summary.merge_all()

SESS.run(tf.global_variables_initializer())

for step in range(20000):

c1, c2, loss, RS, _ = SESS.run([W, B, LOSS, MERGED, TRAIN], feed_dict={X_:X, Y_:Y}) ####If you write RS=SESS.run(MERGED) after it alone, it will report the same error as before

WRITER.add_summary(RS)

if step%500 == 0:

temp = "c1=%s, c2=%s, loss=%s"%(c1, c2, loss)

print(temp)

SESS.close()