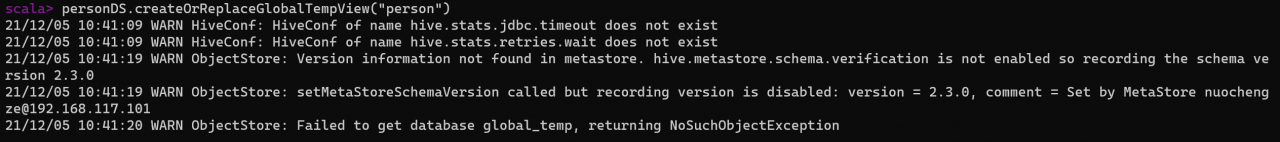

The error information is as follows

Cause analysis

The main reason is that the hive configuration file is not configured in spark. Copy the hive-site.xml file of hive configuration to the spark directory

mv ../hive/conf/hive-site.xml ../spark/conf/hive-site.xml

explain:

If a JDBC error is reported, you also need to copy the MySQL jdbc driver under ../hive/lib to the ../spark/jars directory

The jar package MySQL connector is in hive’s lib directory, and the path where spark stores Jia package is in jars directory

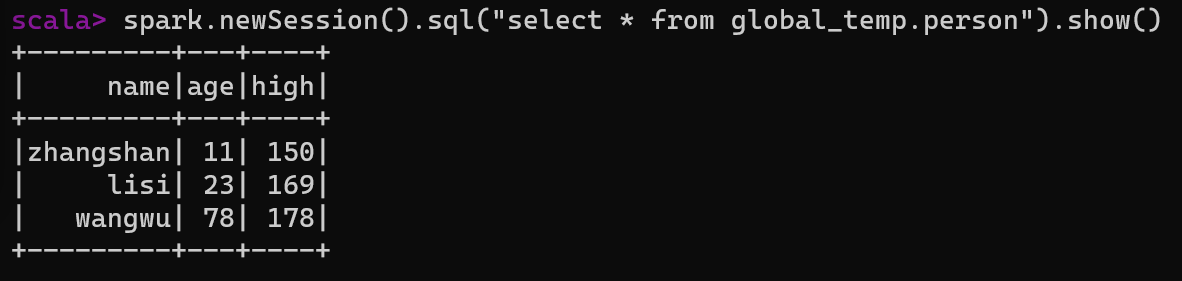

result

Similar Posts:

- Hive initialization metadata error [How to Solve]

- [Solved] spark Connect hive Error: javax.jdo.JDODataStoreException: Required table missing : “`DBS`” in Catalog “” Schema “”

- :org.apache.hadoop.hive.metastore.HiveMetaException: Failed to get schema version.

- Hive1.1.0 startup error reporting Missing Hive Execution Jar: lib/hive-exec-*.jar

- org.apache.thrift.TApplicationException: Required field ‘client_protocol’ is unset!

- [Solved] SparkSQL Error: org.apache.hadoop.security.HadoopKerberosName.setRuleMechanism

- [Solved] Hive Error: java.sql.SQLException: No suitable driver found for jdbc:hive://localhost:10000/default

- Sqoop Import MYSQL Datas to Hive Error: Could not load org.apache.hadoop.hive.conf.HiveConf. Make sure HIVE_CONF_DIR is set correctly

- [Solved] CDP7.1.7 Install hive on tez Error: Can’t create directory /mnt/ssd/yarn/nm/usercache/urika/appcache/application_1424508393097_0004 – Permission denied

- “Execution error, return code 1 from org. Apache. Hadoop. Hive. QL. Exec. Movetask” error occurred when hive imported data locally