1. Development environment

idea2019. 1 + apache-maven-3.6.2 + JDK 1.8.0_111

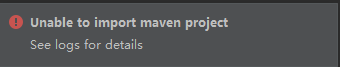

2. Problem description

After importing the maven multi-module project, it is found that the project has no multi-module expansion, and the related jar package reference is not seen in External Libraries . Idea also prompts an error: Unable to import maven project: See logs for details

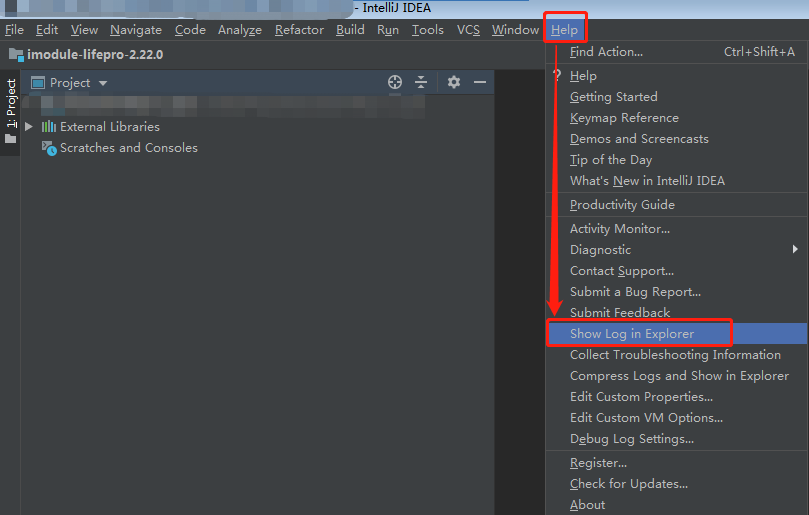

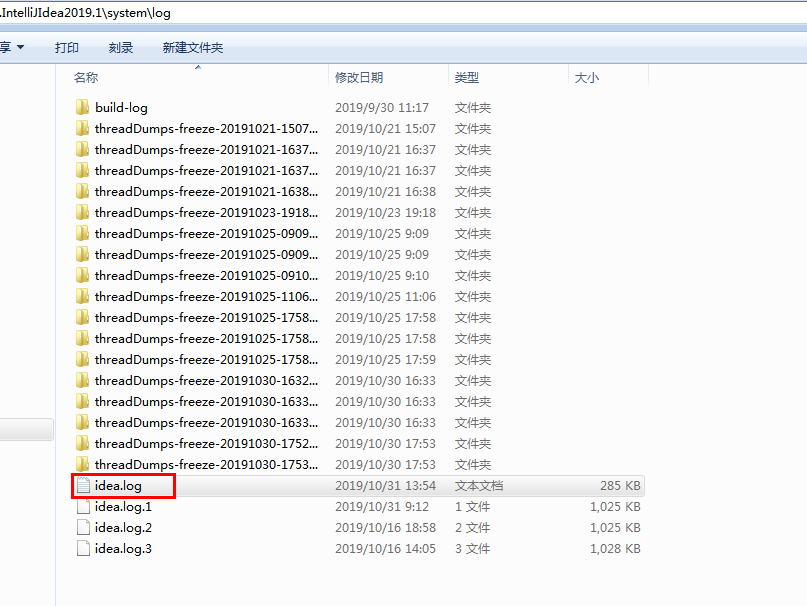

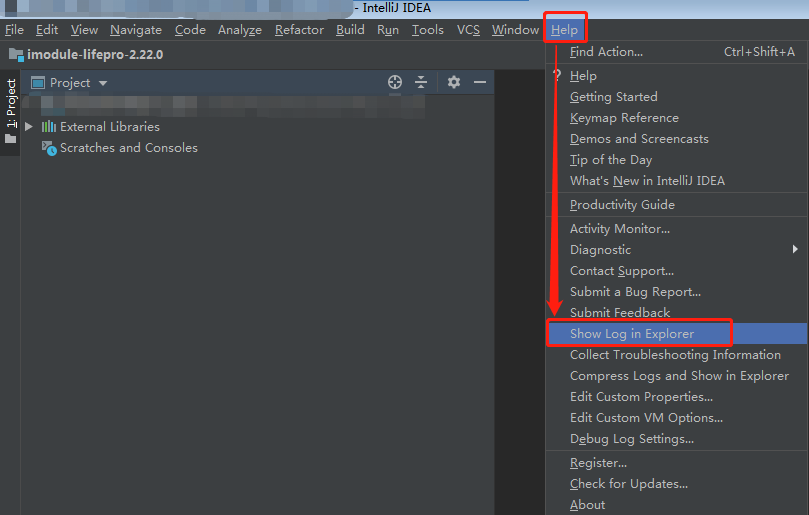

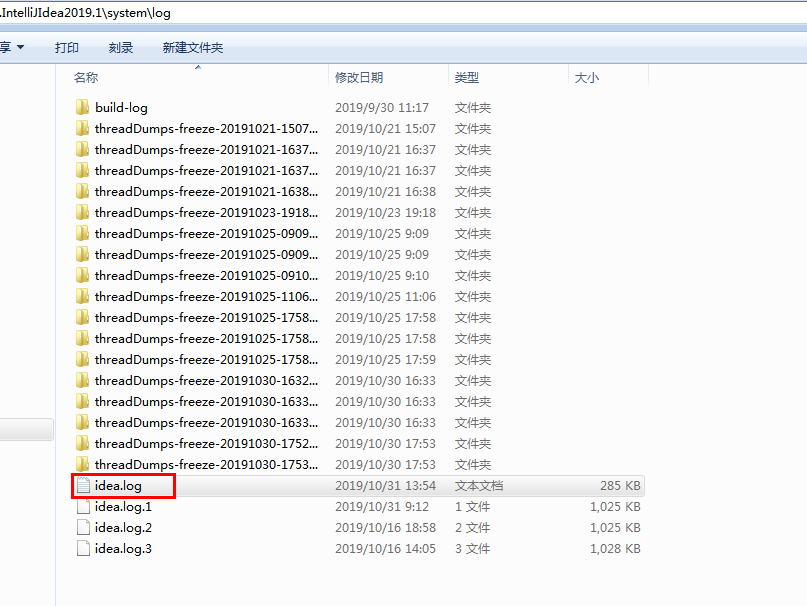

You can view the specific error log in help = = show log in Explorer:

The error information is as follows:

2019-10-31 13:53:26,315 [9780537] ERROR - #org.jetbrains.idea.maven - com.google.inject.CreationException: Unable to create injector, see the following errors:

1) No implementation for org.apache.maven.model.path.PathTranslator was bound.

while locating org.apache.maven.model.path.PathTranslator

for field at org.apache.maven.model.interpolation.AbstractStringBasedModelInterpolator.pathTranslator(Unknown Source)

at org.codehaus.plexus.DefaultPlexusContainer$1.configure(DefaultPlexusContainer.java:350)

2) No implementation for org.apache.maven.model.path.UrlNormalizer was bound.

while locating org.apache.maven.model.path.UrlNormalizer

for field at org.apache.maven.model.interpolation.AbstractStringBasedModelInterpolator.urlNormalizer(Unknown Source)

at org.codehaus.plexus.DefaultPlexusContainer$1.configure(DefaultPlexusContainer.java:350)

2 errors

java.lang.RuntimeException: com.google.inject.CreationException: Unable to create injector, see the following errors:

1) No implementation for org.apache.maven.model.path.PathTranslator was bound.

while locating org.apache.maven.model.path.PathTranslator

for field at org.apache.maven.model.interpolation.AbstractStringBasedModelInterpolator.pathTranslator(Unknown Source)

at org.codehaus.plexus.DefaultPlexusContainer$1.configure(DefaultPlexusContainer.java:350)

2) No implementation for org.apache.maven.model.path.UrlNormalizer was bound.

while locating org.apache.maven.model.path.UrlNormalizer

for field at org.apache.maven.model.interpolation.AbstractStringBasedModelInterpolator.urlNormalizer(Unknown Source)

at org.codehaus.plexus.DefaultPlexusContainer$1.configure(DefaultPlexusContainer.java:350)

2 errors

at com.google.inject.internal.Errors.throwCreationExceptionIfErrorsExist(Errors.java:543)

at com.google.inject.internal.InternalInjectorCreator.initializeStatically(InternalInjectorCreator.java:159)

at com.google.inject.internal.InternalInjectorCreator.build(InternalInjectorCreator.java:106)

at com.google.inject.Guice.createInjector(Guice.java:87)

at com.google.inject.Guice.createInjector(Guice.java:69)

at com.google.inject.Guice.createInjector(Guice.java:59)

at org.codehaus.plexus.DefaultPlexusContainer.addComponent(DefaultPlexusContainer.java:344)

at org.codehaus.plexus.DefaultPlexusContainer.addComponent(DefaultPlexusContainer.java:332)

at org.jetbrains.idea.maven.server.Maven3ServerEmbedderImpl.customizeComponents(Maven3ServerEmbedderImpl.java:556)

at org.jetbrains.idea.maven.server.Maven3ServerEmbedderImpl.customize(Maven3ServerEmbedderImpl.java:526)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at sun.rmi.server.UnicastServerRef.dispatch(UnicastServerRef.java:324)

at sun.rmi.transport.Transport$1.run(Transport.java:200)

at sun.rmi.transport.Transport$1.run(Transport.java:197)

at java.security.AccessController.doPrivileged(Native Method)

at sun.rmi.transport.Transport.serviceCall(Transport.java:196)

at sun.rmi.transport.tcp.TCPTransport.handleMessages(TCPTransport.java:568)

at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler.run0(TCPTransport.java:826)

at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler.lambda$run$0(TCPTransport.java:683)

at java.security.AccessController.doPrivileged(Native Method)

at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler.run(TCPTransport.java:682)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

at sun.rmi.transport.StreamRemoteCall.exceptionReceivedFromServer(StreamRemoteCall.java:283)

at sun.rmi.transport.StreamRemoteCall.executeCall(StreamRemoteCall.java:260)

at sun.rmi.server.UnicastRef.invoke(UnicastRef.java:161)

at java.rmi.server.RemoteObjectInvocationHandler.invokeRemoteMethod(RemoteObjectInvocationHandler.java:227)

at java.rmi.server.RemoteObjectInvocationHandler.invoke(RemoteObjectInvocationHandler.java:179)

at com.sun.proxy.$Proxy111.customize(Unknown Source)

at sun.reflect.GeneratedMethodAccessor584.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at com.intellij.execution.rmi.RemoteUtil.invokeRemote(RemoteUtil.java:175)

at com.intellij.execution.rmi.RemoteUtil.access$200(RemoteUtil.java:38)

at com.intellij.execution.rmi.RemoteUtil$1$1$1.compute(RemoteUtil.java:156)

at com.intellij.openapi.util.ClassLoaderUtil.runWithClassLoader(ClassLoaderUtil.java:66)

at com.intellij.execution.rmi.RemoteUtil.executeWithClassLoader(RemoteUtil.java:227)

at com.intellij.execution.rmi.RemoteUtil$1$1.invoke(RemoteUtil.java:153)

at com.sun.proxy.$Proxy111.customize(Unknown Source)

at org.jetbrains.idea.maven.server.MavenEmbedderWrapper.doCustomize(MavenEmbedderWrapper.java:96)

at org.jetbrains.idea.maven.server.MavenEmbedderWrapper.onWrappeeCreated(MavenEmbedderWrapper.java:49)

at org.jetbrains.idea.maven.server.RemoteObjectWrapper.getOrCreateWrappee(RemoteObjectWrapper.java:42)

at org.jetbrains.idea.maven.server.MavenEmbedderWrapper.doCustomize(MavenEmbedderWrapper.java:96)

at org.jetbrains.idea.maven.server.MavenEmbedderWrapper.lambda$customizeForResolve$1(MavenEmbedderWrapper.java:69)

at org.jetbrains.idea.maven.server.RemoteObjectWrapper.perform(RemoteObjectWrapper.java:76)

at org.jetbrains.idea.maven.server.MavenEmbedderWrapper.customizeForResolve(MavenEmbedderWrapper.java:68)

at org.jetbrains.idea.maven.project.MavenProjectsTree.resolve(MavenProjectsTree.java:1272)

at org.jetbrains.idea.maven.project.MavenProjectsProcessorResolvingTask.perform(MavenProjectsProcessorResolvingTask.java:45)

at org.jetbrains.idea.maven.project.MavenProjectsProcessor.doProcessPendingTasks(MavenProjectsProcessor.java:135)

at org.jetbrains.idea.maven.project.MavenProjectsProcessor.access$000(MavenProjectsProcessor.java:32)

at org.jetbrains.idea.maven.project.MavenProjectsProcessor$2.run(MavenProjectsProcessor.java:109)

at org.jetbrains.idea.maven.utils.MavenUtil.lambda$runInBackground$5(MavenUtil.java:458)

at com.intellij.openapi.application.impl.ApplicationImpl$1.run(ApplicationImpl.java:311)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

2019-10-31 13:53:26,316 [9780538] ERROR - #org.jetbrains.idea.maven - IntelliJ IDEA 2019.1 Build #IU-191.6183.87

2019-10-31 13:53:26,316 [9780538] ERROR - #org.jetbrains.idea.maven - JDK: 1.8.0_202-release; VM: OpenJDK 64-Bit Server VM; Vendor: JetBrains s.r.o

2019-10-31 13:53:26,316 [9780538] ERROR - #org.jetbrains.idea.maven - OS: Windows 7

2019-10-31 13:53:26,330 [9780552] ERROR - #org.jetbrains.idea.maven - Last Action: CloseProject

2019-10-31 13:53:34,447 [9788669] INFO - mponents.impl.stores.StoreUtil - saveProjectsAndApp took 285 ms

2019-10-31 13:53:57,391 [9811613] WARN - gin.utils.ProfilingUtilAdapter - YourKit controller initialization failed : To profile application, you should run it with the profiler agent

2019-10-31 13:54:21,528 [9835750] INFO - mponents.impl.stores.StoreUtil - saveProjectsAndApp took 66 ms

2019-10-31 13:55:52,584 [9926806] INFO - ide.actions.ShowFilePathAction -

Exit code 1

2019-10-31 13:55:53,004 [9927226] INFO - mponents.impl.stores.StoreUtil - saveProjectsAndApp took 46 ms

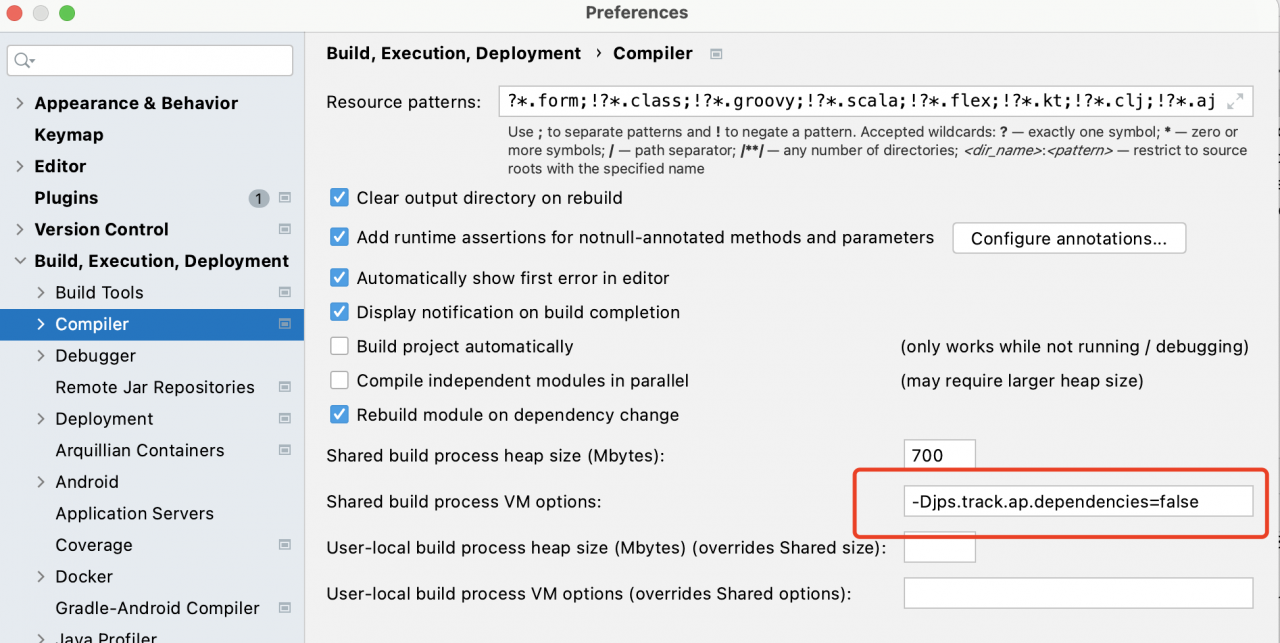

3. Solution

Change to a lower version of maven, such as apache-maven-3.5.4 or apache-maven-3.3.9. This may be idea2019.1 is not compatible with the higher version of Maven (apache-maven-3.6.2).

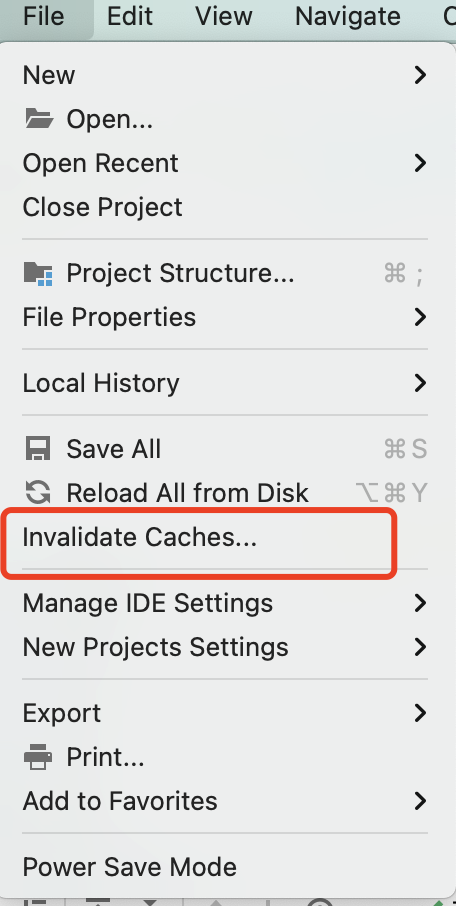

2.Clear the caches

2.Clear the caches