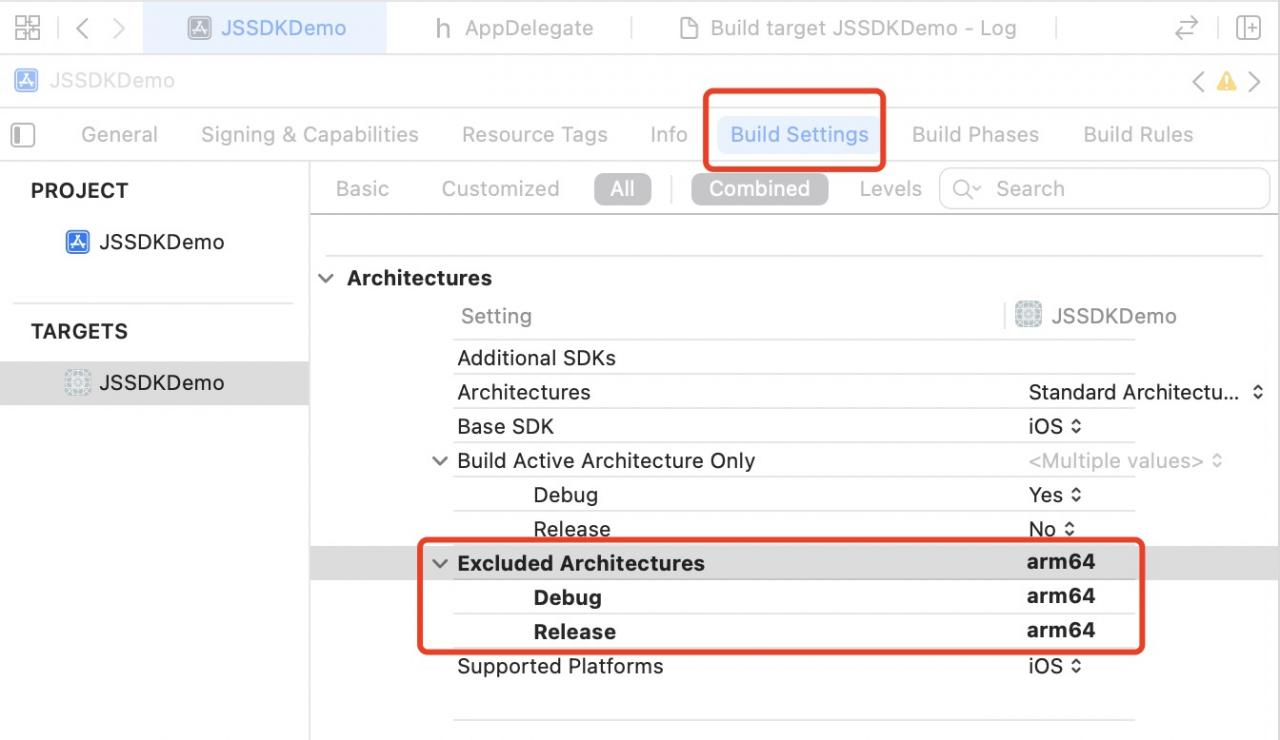

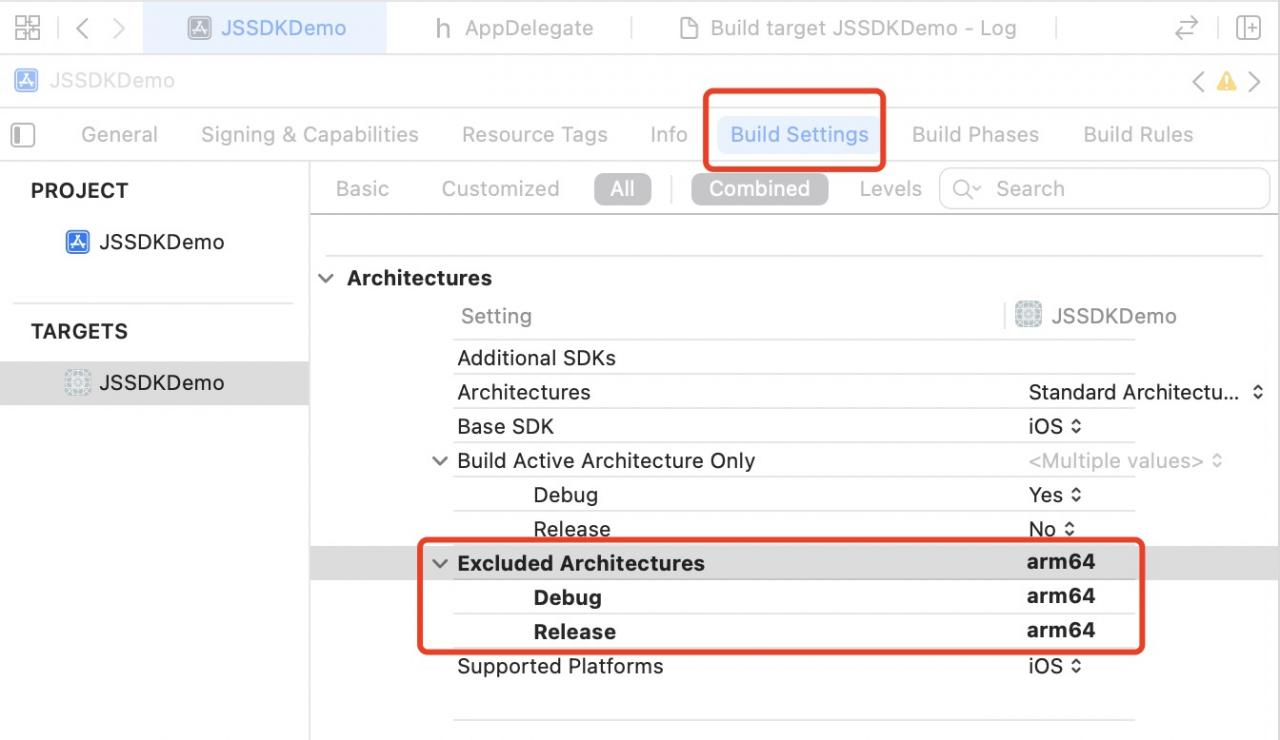

Development environment: IOS 12 + Xcode 12.0

Solution:

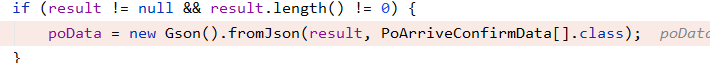

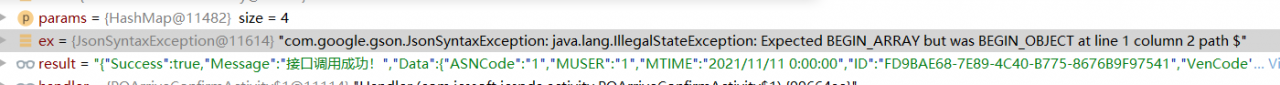

This error is caused by the inconsistency between the incoming string and the class of the object when gson parses the JSON string into an object

Solution:

Either modify the background method and change the structure type of the return value to be consistent with the specified class;

Either modify the class structure of the foreground transformation. In short, the data to be parsed must correspond to the structure of the transformed instance

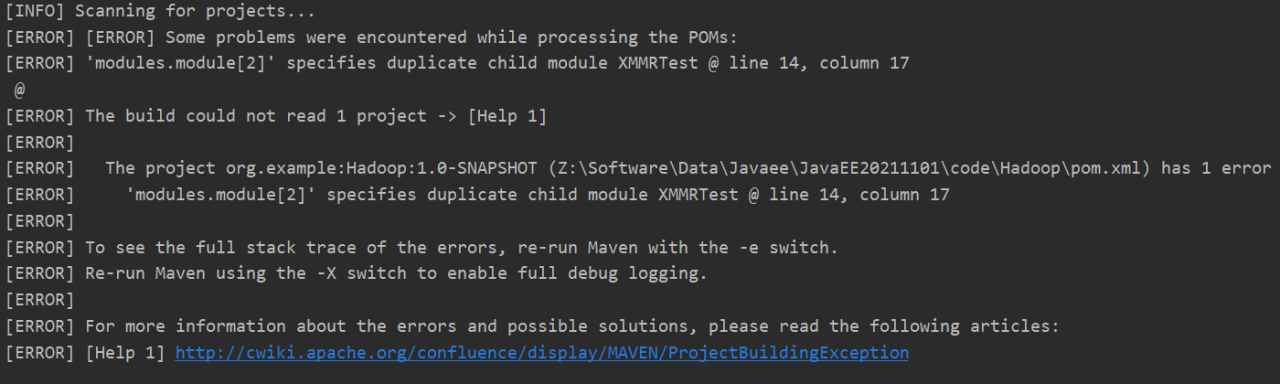

Engineering errors are as follows:

Reason: engineering document POM Duplicate XML

When the modules module was created before, the file was simply deleted, but the project configuration was not deleted. An error occurred when the module with the same name was created again

Redis version, above 6.0

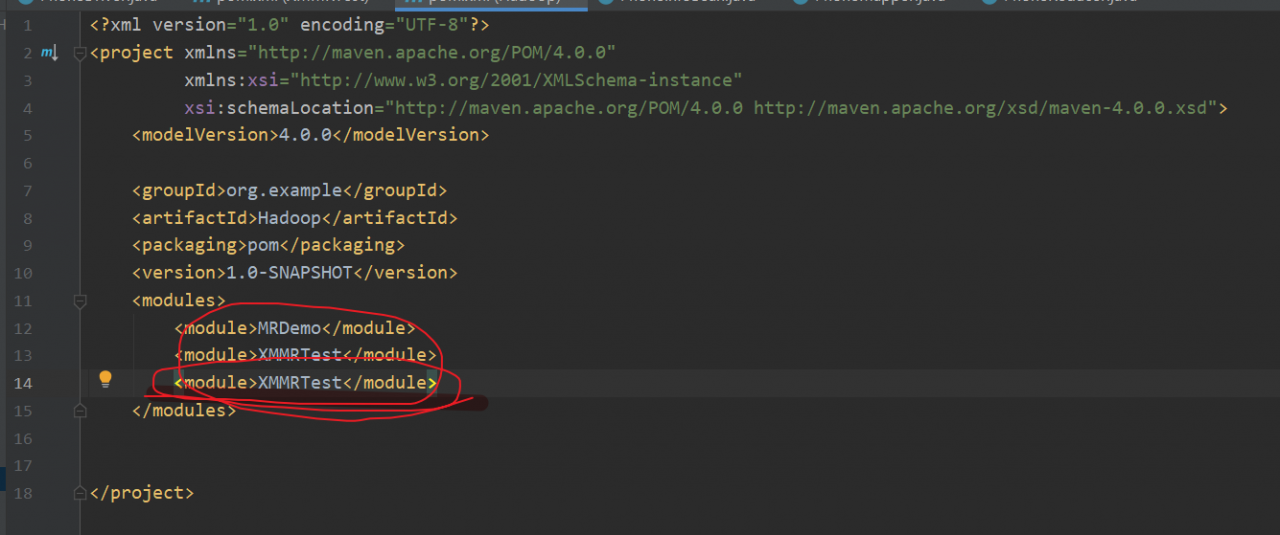

Error record: the failed redis uses port 6380 as the slave node.

Error reason:

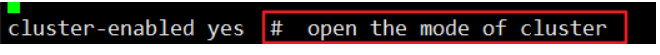

The reason is that it is not allowed to add comments after the same valid code line in some configuration files (such as. Conf files, which are widely seen here). The following comments will be compiled and executed as the parameters passed in by the current command line; however, the command configuration has no parameters or the format of the parameters passed in is wrong. Naturally, an error will be reported.

as shown in the figure:

Change the comment to a separate line to solve the problem.

Incidentally, there is a receipt. If redis fails to start, you might as well look at the configured log file

In OpenCV 3 we use CV_FOURCC to identify codec , for example:

writer.open("image.jpg", CV_FOURCC('M', 'J', 'P', 'G'), fps, size);

In this line of code, we name the storage target file of cv::VideoWriter writer image.jpg, use the codec of MJPG (here MJPG is the abbreviation of motion jpeg), and enter the corresponding number of frames per second and the video lens size.

Unfortunately, in OpenCV 4 (4.5.4-dev) in, CV_FOURCC VideoWriter has been replaced by a function of the fourcc. If we continue to use the macro CV_FOURCC, an error will be reported when compiling:

error: ‘CV_ FOURCC’ was not declared in this scope

Here is a simple usage of FourCC in opencv4:

writer.open("image.jpg", cv::VideoWriter::fourcc('M', 'J', 'P', 'G'), fps, size);

In order to better show fourcc standard usage, and how the video is stored in OpenCV 4, below we give a complete example of how to store video:

1 #include <opencv2/core.hpp>

2 #include <opencv2/videoio.hpp>

3 #include <opencv2/highgui.hpp>

4 #include <iostream>

5 #include <stdio.h>

6 using namespace cv;

7 using namespace std;

8 int main(int, char**)

9 {

10 Mat src;

11 // use default camera as video source

12 VideoCapture cap(0);

13 // check if we succeeded

14 if (!cap.isOpened()) {

15 cerr << "ERROR! Unable to open camera\n";

16 return -1;

17 }

18 // get one frame from camera to know frame size and type

19 cap >> src;

20 // check if we succeeded

21 if (src.empty()) {

22 cerr << "ERROR! blank frame grabbed\n";

23 return -1;

24 }

25 bool isColor = (src.type() == CV_8UC3);

26 //--- INITIALIZE VIDEOWRITER

27 VideoWriter writer;

28 int codec = VideoWriter::fourcc('M', 'J', 'P', 'G'); // select desired codec (must be available at runtime)

29 double fps = 25.0; // framerate of the created video stream

30 string filename = "./live.avi"; // name of the output video file

31 writer.open(filename, codec, fps, src.size(), isColor);

32 // check if we succeeded

33 if (!writer.isOpened()) {

34 cerr << "Could not open the output video file for write\n";

35 return -1;

36 }

37 //--- GRAB AND WRITE LOOP

38 cout << "Writing videofile: " << filename << endl

39 << "Press any key to terminate" << endl;

40 for (;;)

41 {

42 // check if we succeeded

43 if (!cap.read(src)) {

44 cerr << "ERROR! blank frame grabbed\n";

45 break;

46 }

47 // encode the frame into the videofile stream

48 writer.write(src);

49 // show live and wait for a key with timeout long enough to show images

50 imshow("Live", src);

51 if (waitKey(5) >= 0)

52 break;

53 }

54 // the videofile will be closed and released automatically in VideoWriter destructor

55 return 0;

56 }

preface

An error occurs when installing PostgreSQL, as shown below

configure: error: readline library not found

If you have readline already installed, see config.log for details on the

failure. It is possible the compiler isn't looking in the proper directory.

Use --without-readline to disable readline support.

Solution:

Check whether the readLine package is installed in the system

rpm -qa | grep readline

Install readline-devel pack

yum -y install -y readline-devel

Execute configure again successfully

The explanation of readLine comes from the official website

--without-readline

Prevents use of the Readline library (and libedit as well). Thisoption disables command-line

editing and history in psql, so it is notrecommended.

Note: when you execute configure, you can add “– without readLine” to avoid this error, but PostgreSQL officials do not recommend it

problem

MSBUILD : error MSB3428: The Visual C++ component "VCBuild.exe" could not be loaded. To solve this problem, 1) install .NET Framework 2.0 SDK; 2) install Microsoft Visual Stu

Solution:

Run the following command as Administrator

npm install --global --production windows-build-tools

If there is any other issue:

Please restart this script from an administrative PowerShell

fatal: unable to connect to gitee. com:

go. com[0: 180.97.125.228]: errno=Unknown error

Solution:

Find the .gitconfig file directly, delete these configurations, and you can push normally

[url "[email protected]"] insteadOf = https://github.com/: [url "git://"] insteadOf = https:// [url "https://"] insteadOf = git:/ /

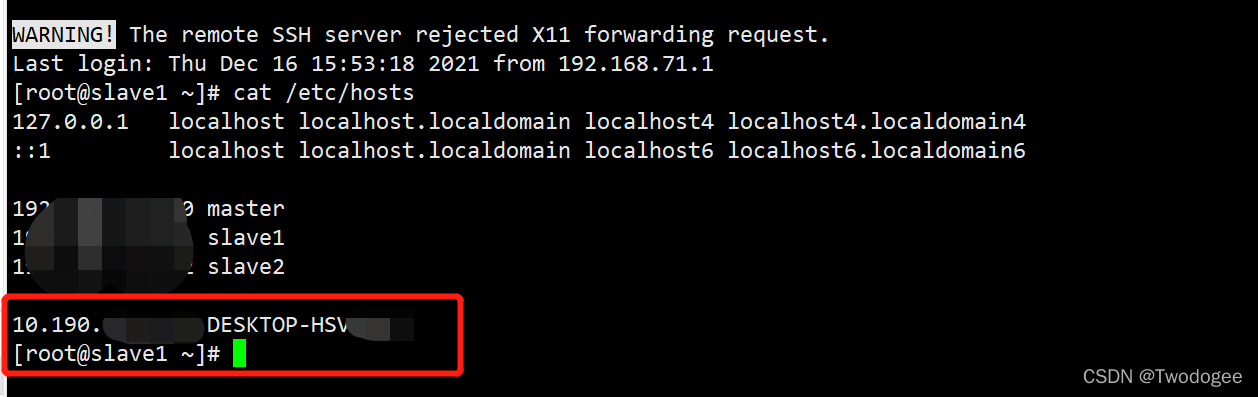

Idea remotely submits spark job Java io. IOException: Failed to connect to DESKTOP-H

1. Error report log

Exception in thread "main" java.lang.reflect.UndeclaredThrowableException

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1713)

at org.apache.spark.deploy.SparkHadoopUtil.runAsSparkUser(SparkHadoopUtil.scala:63)

at org.apache.spark.executor.CoarseGrainedExecutorBackend$.run(CoarseGrainedExecutorBackend.scala:188)

at org.apache.spark.executor.CoarseGrainedExecutorBackend$.main(CoarseGrainedExecutorBackend.scala:293)

at org.apache.spark.executor.CoarseGrainedExecutorBackend.main(CoarseGrainedExecutorBackend.scala)

Caused by: org.apache.spark.SparkException: Exception thrown in awaitResult:

at org.apache.spark.util.ThreadUtils$.awaitResult(ThreadUtils.scala:205)

at org.apache.spark.rpc.RpcTimeout.awaitResult(RpcTimeout.scala:75)

at org.apache.spark.rpc.RpcEnv.setupEndpointRefByURI(RpcEnv.scala:101)

at org.apache.spark.executor.CoarseGrainedExecutorBackend$$anonfun$run$1.apply$mcV$sp(CoarseGrainedExecutorBackend.scala:201)

at org.apache.spark.deploy.SparkHadoopUtil$$anon$2.run(SparkHadoopUtil.scala:64)

at org.apache.spark.deploy.SparkHadoopUtil$$anon$2.run(SparkHadoopUtil.scala:63)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698)

... 4 more

Caused by: java.io.IOException: Failed to connect to DESKTOP-HSVM

at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:245)

at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:187)

at org.apache.spark.rpc.netty.NettyRpcEnv.createClient(NettyRpcEnv.scala:198)

at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:194)

at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:190)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: io.netty.channel.AbstractChannel$AnnotatedConnectException: 拒绝连接: DESKTOP-HSVM

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at io.netty.channel.socket.nio.NioSocketChannel.doFinishConnect(NioSocketChannel.java:323)

at io.netty.channel.nio.AbstractNioChannel$AbstractNioUnsafe.finishConnect(AbstractNioChannel.java:340)

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:633)

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:580)

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:497)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:459)

at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

... 1 more

Caused by: java.net.ConnectException: denied to connect

... 11 more

2. cause analysis

Idea cannot establish a connection with the local machine while submitting spark jobs to the remote cluster and returning the results to the local machine.

Caused by: java.io.IOException: Failed to connect to DESKTOP-HSVM

3. solution

Add the native name desktop-hsvm and IP to the/etc/hosts file of the remote cluster, as shown in the following figure