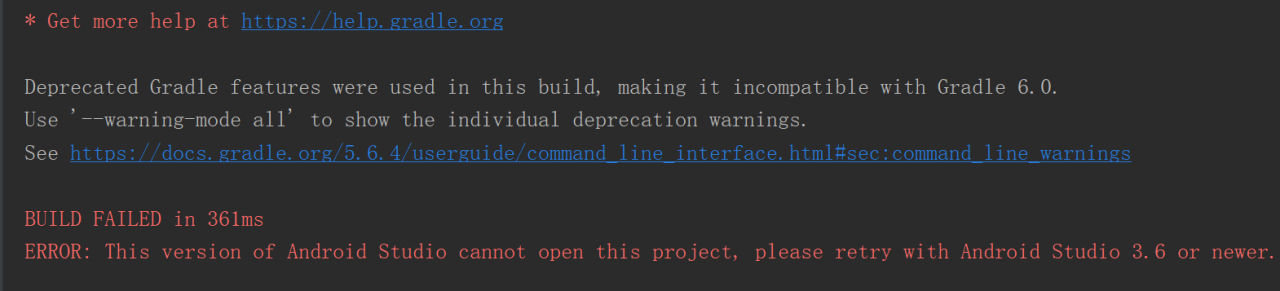

The project is reacting. Bpmnjs process engine is introduced. It was good before. Suddenly, an error is reported on the process design page after the project is updated

unhandled error in event listener TypeError: bo.get is not a function

unhandled error in event listener Error: plane base already exists

The code hasn’t changed anything,

Here are some steps for troubleshooting

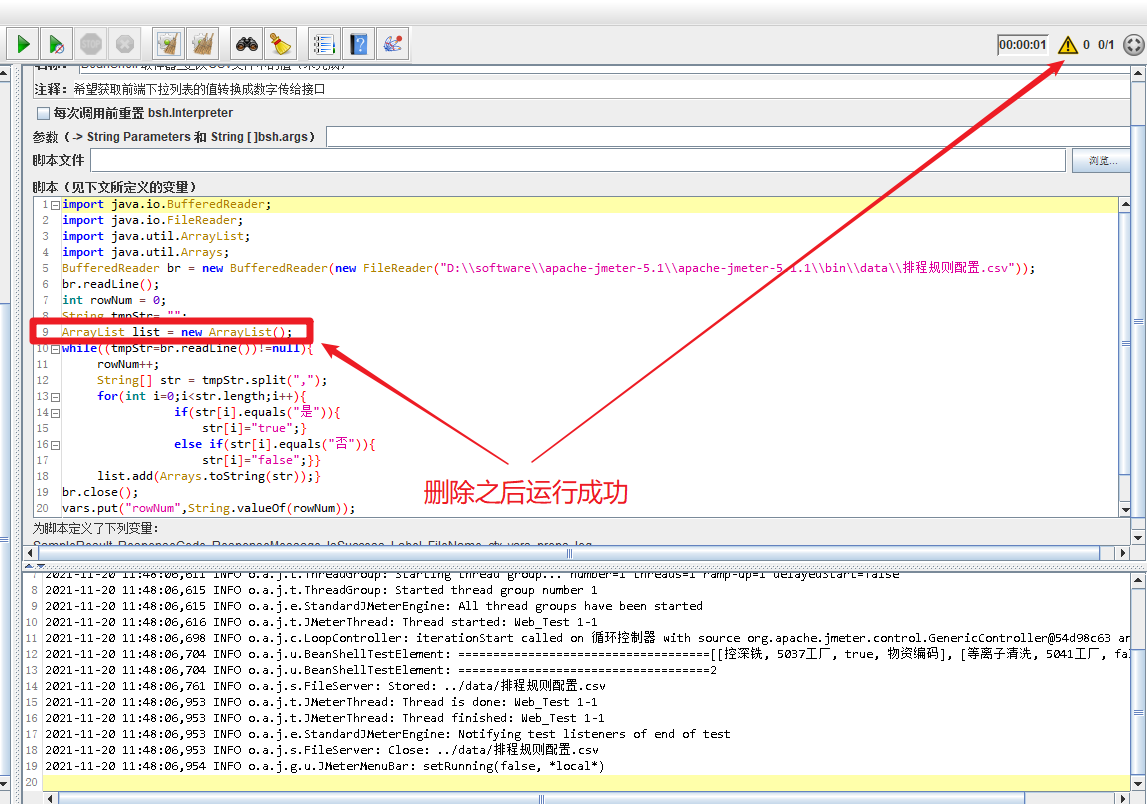

1. According to the error reporting prompt, find the corresponding error reporting place, comment the code, and whether the operation is successful. If it is successful, there is a problem with the commented code

2. I used step 1 to annotate the code. The code can run, but the process can’t get out. The annotated part refers to the process’s own methods. The preliminary analysis is that there is a problem with the introduced process components

3. Verify whether it is the original component problem. Go to the official website of BPMN, download and run the official project, and everything is normal. Copy the official code to your own project, and an error is reported. At this time, it can be determined that it is the problem of introducing the component installation package

4. Compared with the package.json installation package version in the official website project, it is found that the BPMN installation package version of my project is higher, which is changed to the official version number

5. Delete node_Modlues installation package, Download dependency again, run, OK, no problem

If the imported component reports an error and the use method is consistent with the official document, you can check whether it is the problem of the installation version. There are still many holes in the version of the installation package,

The package version of the official BPMN project: my own project works normally,

“bpmn-js”: “^6.3.4”,

“bpmn-js-properties-panel”: “^0.33.1”,

“bpmn-moddle”: “^6.0.0”,