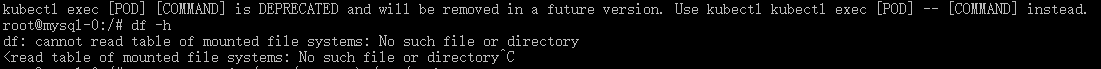

Phenomenon

df -h can’t query the file system

df: cannot read table of mounted file systems: No such file or directory

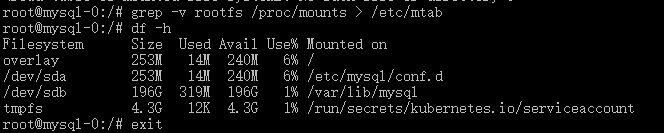

Solution:

grep -v rootfs /proc/mounts > /etc/mtab

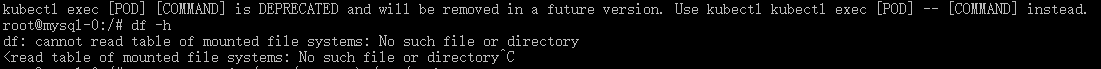

df -h can’t query the file system

df: cannot read table of mounted file systems: No such file or directory

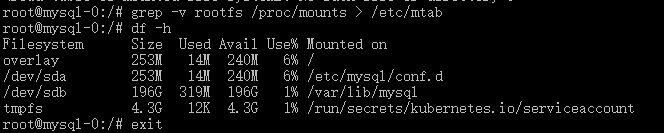

grep -v rootfs /proc/mounts > /etc/mtab

There are two types of data: numpy and torch

z = mean.clone().detach() + eps * torch.exp(logstd)

An error is reported in the source code, which is modified as follows

eps = eps.cuda() z = mean.cuda()+ eps * torch.exp(logstd).cuda()

It is to add CUDA() after numpy, such as reshape

Errors are reported as follows:

prepare base dir is set to /root/harbor

no config file: /root/harbor/harbor.yml

Reason: the configuration file ends with YML, not tmpl

Solution:

harbor.yml.tmpl Modify the configuration file suffix to harbor.yml

mv harbor.yml.tmpl harbor.yml

npm i @dcloudio/uni-cli-i18n

Because of the problem of PowerShell execution policy, we need to configure it manually

1. Run vscode as administrator; 2. Execute: get-ExecutionPolicy; the display shows Restricted, indicating that the status is disabled; 3. Execute: set-ExecutionPolicy RemoteSigned. 4; 4. Execute get-ExecutionPolicy again, and it will show RemoteSigned;

Then there will be no more problems.

The SSL connection could not be established, see inner exception.sudo yum update ca-certificatescriterion = nn.MSELoss()

criterion(a, b)

This is dtype=torch.float for a and dtype=torch.int64 for b

So, both change to float

Error adding data to datatable: column 1 cannot be found

The code is as follows:

StreamReader sr = new StreamReader(fs, encoding);

string strLine = "";

string[] DataLine = null;

dt.Columns.Add(new DataColumn());

while ((strLine = sr.ReadLine()) != null)

{

DataLine = strLine.Split(',');

DataRow dr = dt.NewRow();

for (int i = 0; i < DataLine.Length; i++)

{

dr[i] = DataLine[i];

}

dt.Rows.Add(dr);

}

Reason: data columns must be created before adding data rows, but the appropriate columns cannot be initialized because the number of columns is uncertain. In this way, each row is written into the DataTable as a string, so that a column can be created.

StreamReader sr = new StreamReader(fs, encoding);

string strLine = "";

dt.Columns.Add(new DataColumn());

while ((strLine = sr.ReadLine()) != null)

{

DataRow dr = dt.NewRow();

dr[0] = strLine;

dt.Rows.Add(dr);

}

A compromise solution to this problem.

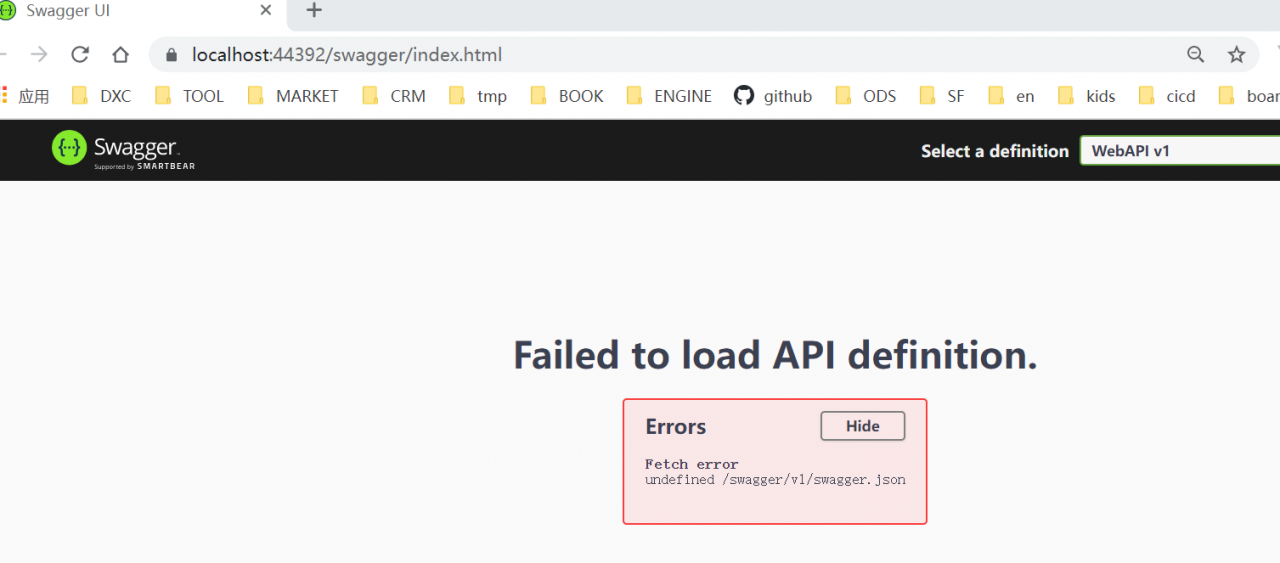

Exclusion method:

https://localhost:44392/swagger/v1/swagger.json

Error when TensorFlow GPU executes model training:

InternalError: Failed copying input tensor from /job:localhost/replica:0/task:0/device:CPU:0 to /job:localhost/replica:0/task:0/device:GPU:0 in order to run _EagerConst: Dst tensor is not initialized.

Solution:

Link: https://stackoverflow.com/questions/37313818/tensorflow-dst-tensor-is-not-initialized

The main reason is the batch_size is too large to load the memory. If the Batch_size is properly reduced, it can run normally.

By default, TF will allocate as much GPU memory as possible. By adjusting gpuconfig, it can be set to allocate memory on demand. Refer to this document and this code.

Also, During long-term model training in Jupiter notebook, this error may be caused by the failure of GPU memory to be released in time. This problem can be solved by referring to this answer. The following functions are defined:

from keras.backend import set_session

from keras.backend import clear_session

from keras.backend import get_session

import gc

# Reset Keras Session

def reset_keras():

sess = get_session()

clear_session()

sess.close()

sess = get_session()

try:

del classifier # this is from global space - change this as you need

except:

pass

print(gc.collect()) # if it does something you should see a number as output

# use the same config as you used to create the session

config = tf.compat.v1.ConfigProto()

config.gpu_options.per_process_gpu_memory_fraction = 1

config.gpu_options.visible_device_list = "0"

set_session(tf.compat.v1.Session(config=config))

Called directly when GPU memory needs to be cleared reset_keras Function. For example:

dense_layers = [0, 1, 2]

layer_sizes = [32, 64, 128]

conv_layers = [1, 2, 3]

for dense_layer in dense_layers:

for layer_size in layer_sizes:

for conv_layer in conv_layers:

reset_keras()

# training your model here