Vsftpd is an FTP server program, and SELinux is the firewall component of CentOS. Since vsftpd is intercepted by SELinux by default, the following FTP problems are encountered:

226 transfer done (but failed to open directory)

550 failed to change directory

550 create directory operation failed

553 Could not create file.

Or simply after sending the list command, the server does not respond and disconnects after timeout (500 oops: vsftpd: chroot)

In case of such a problem, usually vsftpd does not have sufficient permissions, which is likely to be blocked by SELinux. The popular solution on the network is to turn off SELinux directly, which will cause other security problems, so there are other better ways

To determine if this is the problem, we need to try to turn off SELinux to see if it is the cause

setenforce 0 #Temporarily put SELinux into Permissive modeTry again after running. If FTP can get the directory, upload and download, it is proved that SELinux is the cause

Solution: we can run getsebool – a | grep ftpd to determine the view permissions

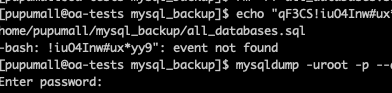

getsebool -a | grep ftp

#The following is the displayed permissions, off is off permissions, on is open permissions, has been set, not set when all is off

ftpd_anon_write --> off

ftpd_connect_all_unreserved --> off

ftpd_connect_db --> on

ftpd_full_access --> on

ftpd_use_cifs --> off

ftpd_use_fusefs --> off

ftpd_use_nfs --> off

ftpd_use_passive_mode --> off

httpd_can_connect_ftp --> off

httpd_enable_ftp_server --> off

tftp_anon_write --> off

tftp_home_dir --> on

Among them, FTP_ home_ Dir and allow_ ftpd_ full_ Access must be on to enable vsftpd to access the FTP root directory and transfer files

Run the following command:

setsebool -P ftp_home_dir 1

setsebool -P allow_ftpd_full_access 1Note that these two commands usually take more than ten seconds to run

After running, we will resume SELinux and enter the forcing mode

setenforce 1 #Entering Enforcing ModeIf there is no accident, we can access the FTP directory, and vsftpd can upload and download files normally

But if this problem has not been solved, it may be that the directory attribute of FTP access is not enough. It is recommended to use Chmod – R 777 path to set the read-write property of the path to 777, and then try again, which can usually solve the problem