Please check the logs or run fsck in order to identify the missing blocks. See the Hadoop FAQ for common causes and potential solutions.

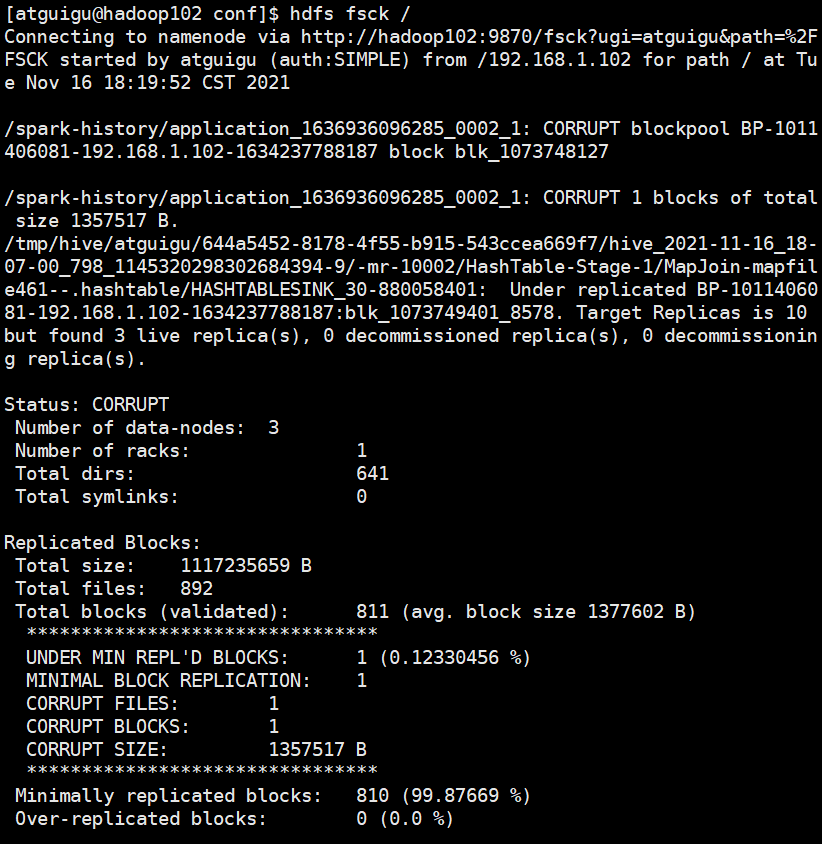

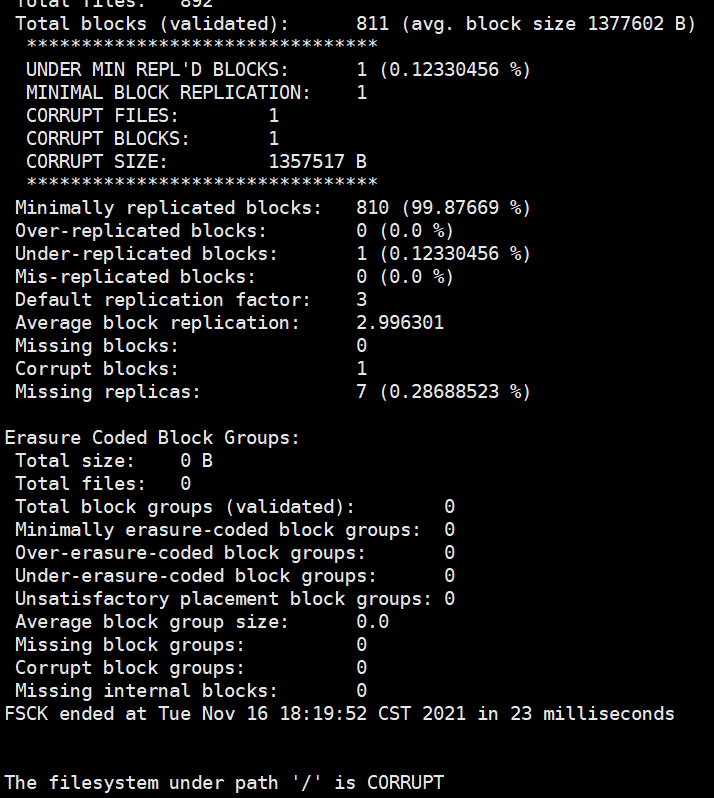

hadoop fsck

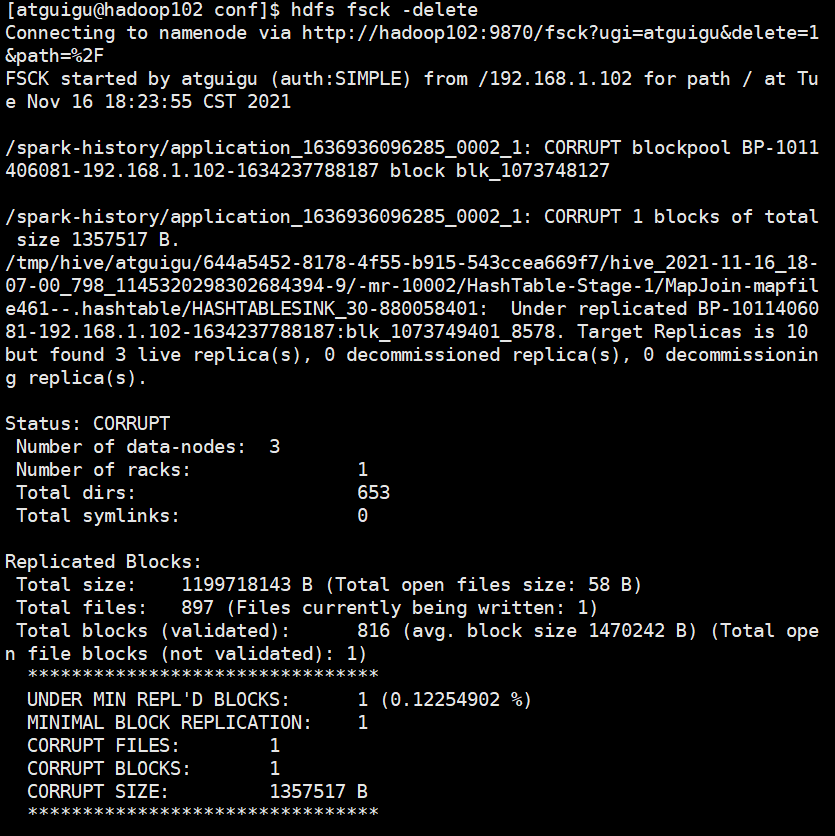

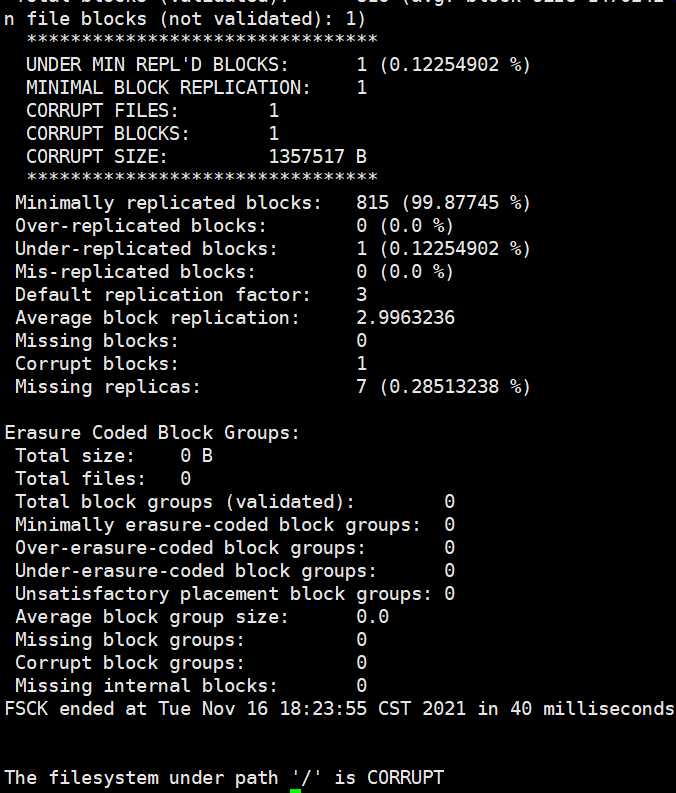

hadoop fsck -delete

Cause analysis:

This problem occurs after deleting data on several HDFS. The exception information is

In HDFS, data is stored in block mode (blk_1073748128 is a block without understanding error), and After BLK_1073748128 is deleted, the metadata is still there, but the data block is gone, so this error is reported. However, this part of the data is not needed, so the metadata information of the abnormal file block can be deleted directly.

Similar Posts:

- [Solved] hadoop:hdfs.DFSClient: Exception in createBlockOutputStream

- [Solved] Hadoop Error: The directory item limit is exceeded: limit=1048576 items=1048576

- HDFS problem set (1), use the command to report an error: com.google.protobuf.servicee xception:java.lang.OutOfMemoryError :java heap space

- [Solved] hadoop Configuration Modify Error: hive.ql.metadata.HiveException

- [Solved] HDFS Filed to Start namenode Error: Premature EOF from inputStream;Failed to load FSImage file, see error(s) above for more info

- [Solved] Hbase Startup Normally but Execute Error: Server is not running yet

- [Solved] Exception in thread “main“ java.net.ConnectException: Call From

- “Execution error, return code 1 from org. Apache. Hadoop. Hive. QL. Exec. Movetask” error occurred when hive imported data locally

- [Solved] hbase ERROR: org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: Server is not running yet

- Namenode Initialize Error: java.lang.IllegalArgumentException: URI has an authority component