Spark version: 1.6.1

Scala version: 2.10

problem scenario:

when idea debugged the local program, an error was reported when creating the hivecontext. This problem was not found in the morning. In the afternoon, a small Deamon was written in the project. This problem occurred. Here is my code:

import cn.com.xxx.common.config.SparkConfig

import org.apache.spark.sql.hive.HiveContext

object test{

def main(args: Array[String]): Unit = {

SparkConfig.init("local[2]", this.getClass.getName)

val sc = SparkConfig.getSparkContext()

sc.setLogLevel("WARN")

val hqlContext = new HiveContext(sc)

println("hello")

sc.stop()

}

}

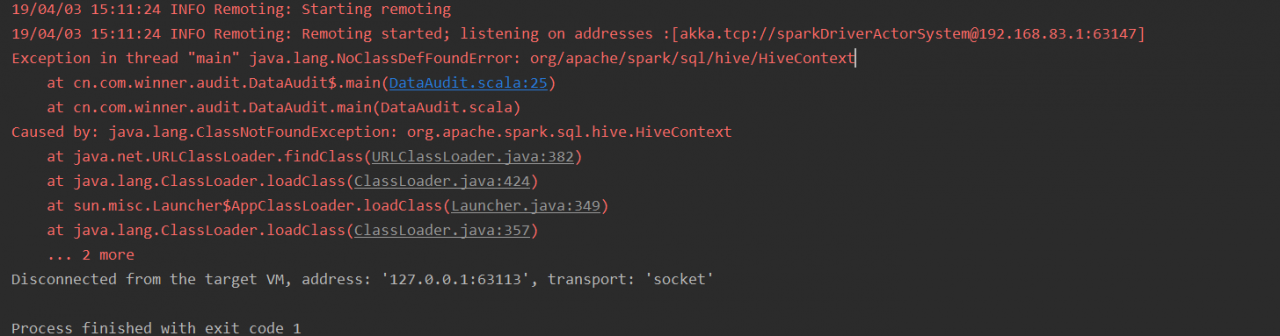

error reporting:

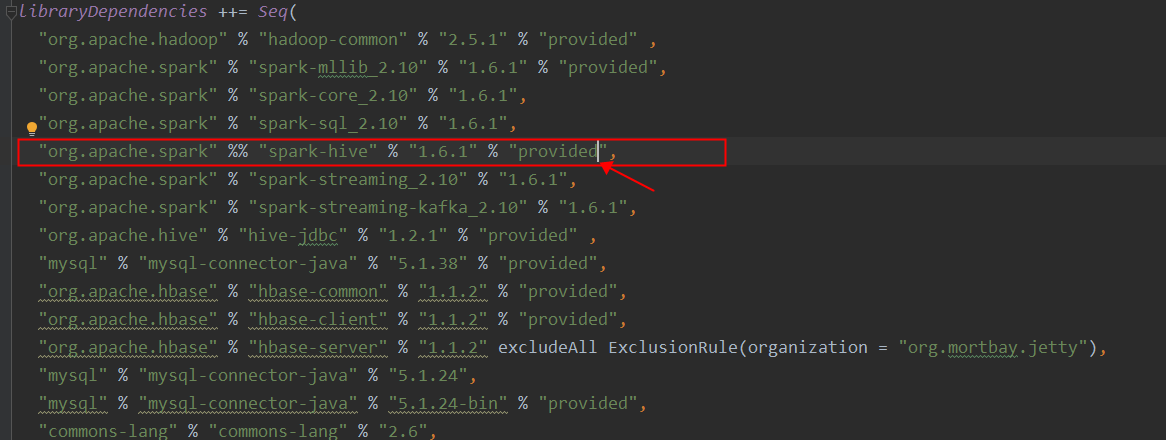

After worrying all morning, I thought my dependency package was not downloaded at first, but there was this package in the build.sbt file in my SBT. I tracked the source code, looked for Du Niang, and so on. Finally, I found the problem. Finally, I located the problem because the type setting of the spark hive dependency package was wrong:

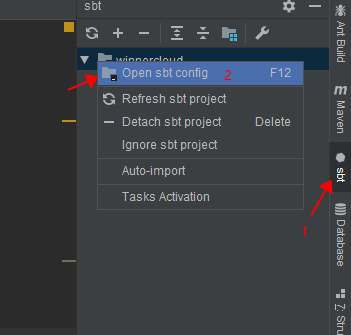

By the way, how do I find the build.sbt file in idea

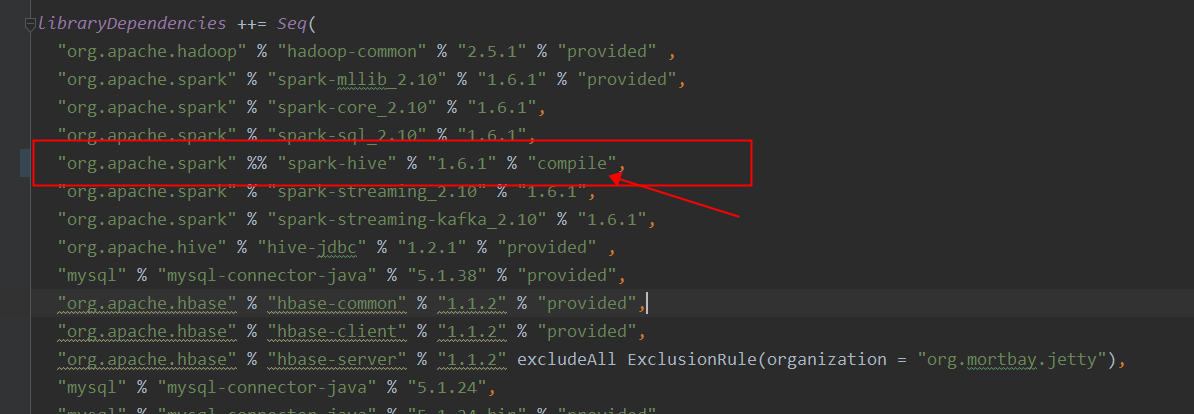

The culprit is this word, which needs to be changed to “compile”, as shown in the following figure:

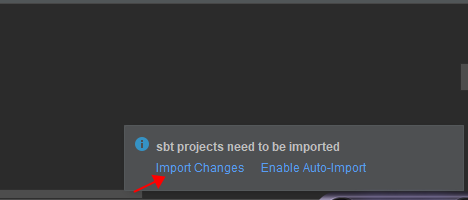

Then, click the prompt in the lower right corner, import:

Wait until the update is completed, and then compile here. Success

Big pit, record it here to prevent encountering it again in the future

Similar Posts:

- [Solved] SparkSQL Error: org.apache.hadoop.security.HadoopKerberosName.setRuleMechanism

- SparkSQL Use DataSet to Operate createOrReplaceGlobalTempView Error

- [Solved] Spark Programmer Compile error: object apache is not a member of package org

- [Solved] Unable to import Maven project: see logs for details when importing Maven dependent package of Spark Program

- org.apache.thrift.TApplicationException: Required field ‘client_protocol’ is unset!

- Spark Program Compilation error: object apache is not a member of package org

- Hive1.1.0 startup error reporting Missing Hive Execution Jar: lib/hive-exec-*.jar

- [Solved] Spark-HBase Error: java.lang.NoClassDefFoundError: org/htrace/Trace

- [Solved] import spark.implicits._ Red, unable to import

- Idea Run Scala Error: Exception in thread “main” java.lang.NoSuchMethodError:com.google.common.base.Preconditions.checkArgument(ZLjava/lang/String;Ljava/lang/Object;)V