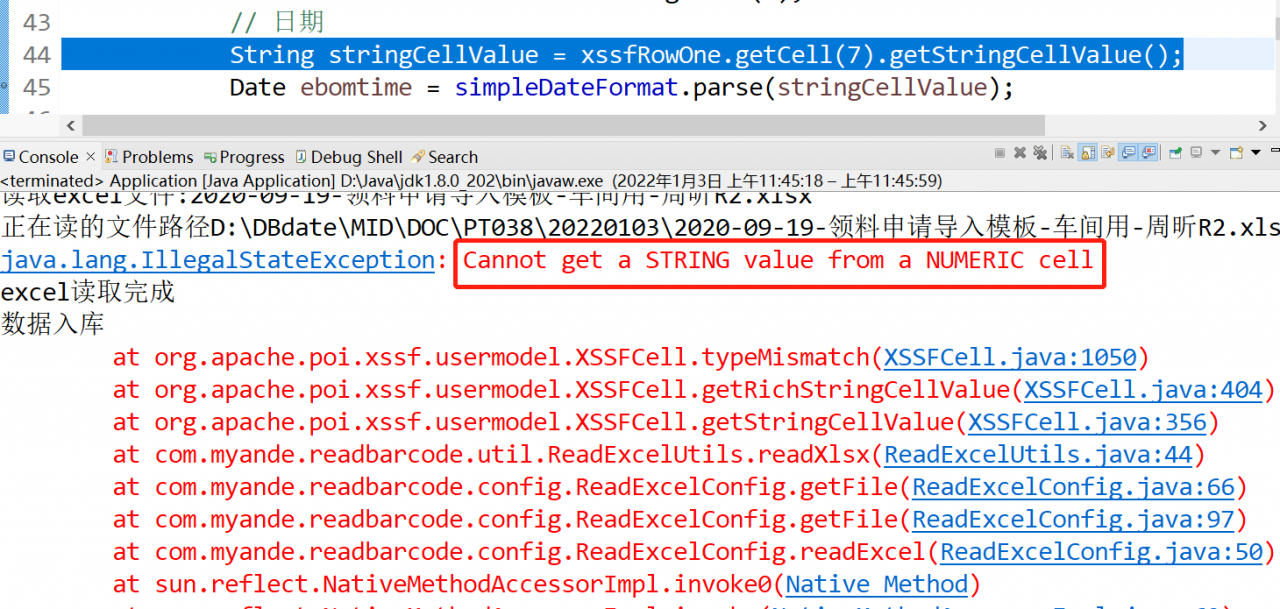

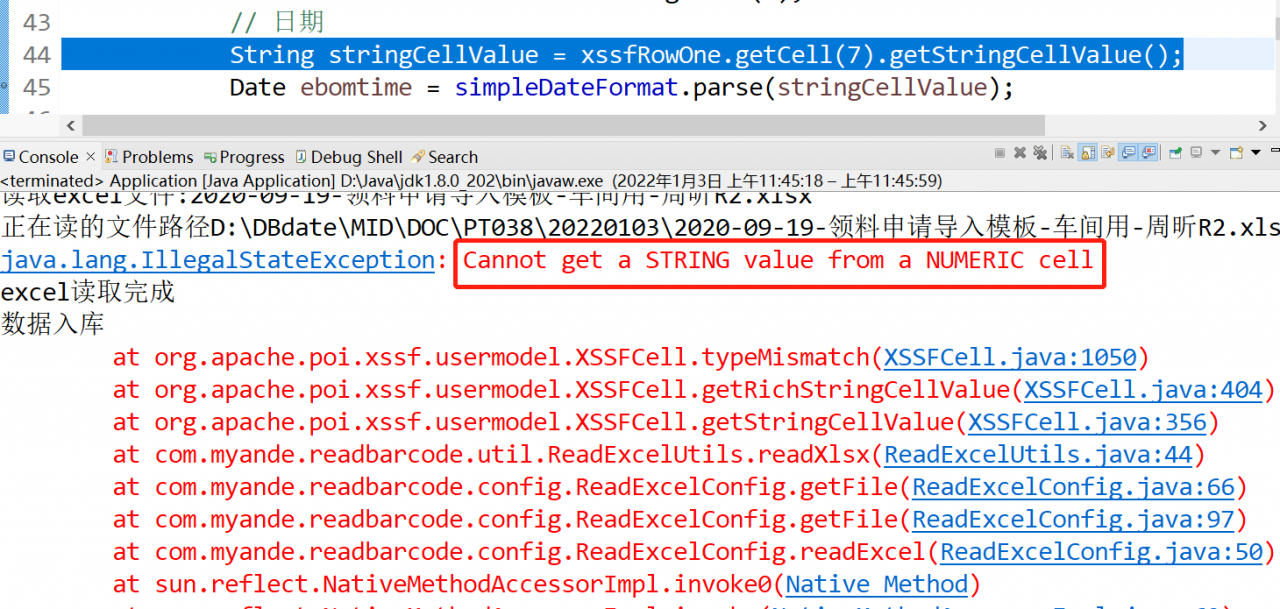

1. Error information

2. Solutions

Declare the cell type as string before calling the getstringcellvalue() method

xssfRowOne.getCell(7).setCellType(CellType.STRING);1. Error information

2. Solutions

Declare the cell type as string before calling the getstringcellvalue() method

xssfRowOne.getCell(7).setCellType(CellType.STRING);Reason: the local file of file has been lost after the file is modified

Solution:

1. When uploading beforeupload, change the file to Base64 (bese64 has nothing to do with the local file status), and then change it to file. (this is troublesome. You can ask the background if you can change it and get the data through blob or arraybuffer)

Save Base64 format

const reader1 = new FileReader();

reader1.readAsDataURL(file);

reader1.onload = e => {

this.base64Excel = e.target.result;

};Base64 to file method:

base64ConvertFile (urlData, filename) { // 64 file

var arr = urlData.split(',');

var type = arr[0].match(/:(.*?);/)[1];

var fileExt = type.split('/')[1];

var bstr = atob(arr[1]);

var n = bstr.length;

var u8arr = new Uint8Array(n);

while (n--) {

u8arr[n] = bstr.charCodeAt(n);

}

return new File([ u8arr ], filename, {

type: type

});

},Finally, upload the transferred file through formdata

let excel = new FormData();

let form = this.base64ConvertFile(this.base64Excel, this.excelFile.name);

excel.append('file', form);2. When you click upload, you will be prompted to modify the file

this.file.slice( 0, 1 ) // only the first byte

.arrayBuffer() // try to read

.then( () => {

// The file hasn't changed, and you can send requests here

console.log( 'should be fine' );

axios({.........})

} )

.catch( (err) => {

// There is a problem with the file, terminate it here

console.log( 'failed to read' );

this.file = null; // Empty the cached file

} );catalogue

The text is truncated, or one or more characters do not match in the target code page

Cause

Solution

The value violates the integrity constraint of the column

Blank line

Not set to allow null

I have imported data before without reporting any errors. When importing data again today, I found two errors, as follows

It’s easy to understand that your data is too large and the size you set is not enough. There may be questions here

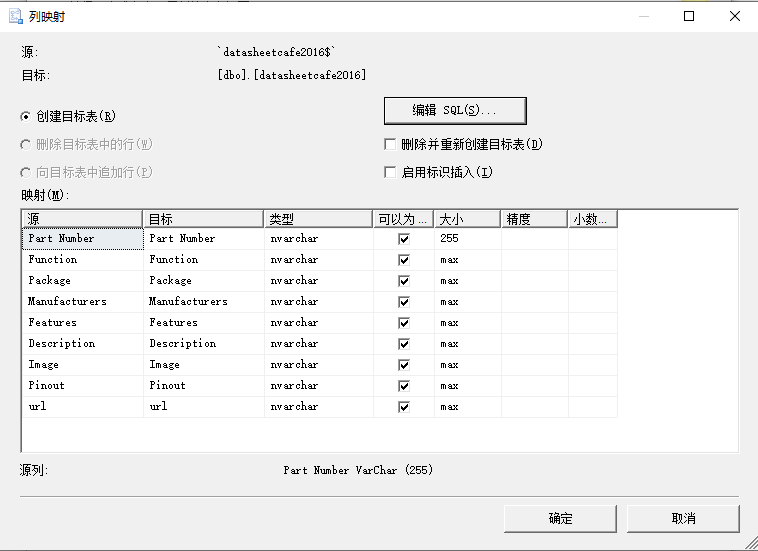

As shown in the figure below, if the size can be set, an error will still be reported after setting the size

This is because in order to determine the field type of the data table, the import and export of SQL server takes the first 8 lines of the excel file. If the first eight strings are less than 255, they are set to nvarchar (255). However, if the length of the records behind excel exceeds 255, an error will occur during import. Even if the length of this field is adjusted when importing the setting mapping

I added a row to the first row of Excel data, and each field is filled with long characters, so that SQL server will automatically set the maximum value when detecting the first 8 rows

This is because the new column behind you is a space. You can select the column behind the last field and delete it to eliminate the possibility of spaces. As long as there is no f beginning such as F10 when importing

There is another reason. This field is set to allow null. I cancelled it and the setting is not allowed to be Mull. Then an error is reported that a column violates data integrity

if it is reset to allow null, there is no problem

Beyond compare is an Excel to TXT script to solve the problems of special characters unable to output, multiple sheet pages unable to compare, and files too large to exceed the system memory

' XLS_to_CSV.vbs

'

' Converts an Excel workbook to a comma-separated text file. Requires Microsoft Excel.

' Usage:

' WScript XLS_to_CSV.vbs <input file> <output file>

Option Explicit

' MsoAutomationSecurity

Const msoAutomationSecurityForceDisable = 3

' OpenTextFile iomode

Const ForReading = 1

Const ForAppending = 8

Const TristateTrue = -1

' XlFileFormat

Const xlCSV = 6 ' Comma-separated values

Const xlUnicodeText = 42

' XlSheetVisibility

Const xlSheetVisible = -1

Dim App, AutoSec, Doc, FileSys, AppProtect

Set FileSys = CreateObject("Scripting.FileSystemObject")

If FileSys.FileExists(WScript.Arguments(1)) Then

FileSys.DeleteFile WScript.Arguments(1)

End If

Set App = CreateObject("Excel.Application")

'Set AppProtect = CreateObject("Excel.Application")

On Error Resume Next

App.DisplayAlerts = False

AutoSec = App.AutomationSecurity

App.AutomationSecurity = msoAutomationSecurityForceDisable

Err.Clear

Dim I, J, SheetName, TgtFile, TmpFile, TmpFilenames(), Content

Set Doc = App.Workbooks.Open(WScript.Arguments(0), False, True)

If Err = 0 Then

I = 0

For J = 1 To Doc.Sheets.Count

If Doc.Sheets(J).Visible = xlSheetVisible Then

I = I + 1

End If

Next

ReDim TmpFilenames(I - 1)

Set TgtFile = FileSys.OpenTextFile(WScript.Arguments(1), ForAppending, True, TristateTrue)

I = 0

For J = 1 To Doc.Sheets.Count

If Doc.Sheets(J).Visible = xlSheetVisible Then

SheetName = Doc.Sheets(J).Name

TgtFile.WriteLine """SHEET " & SheetName & """"

Doc.Sheets(J).Activate

TmpFilenames(I) = FileSys.GetSpecialFolder(2) & "\" & FileSys.GetTempName

Doc.SaveAs TmpFilenames(I), xlUnicodeText

Set TmpFile = FileSys.OpenTextFile(TmpFilenames(I), ForReading, False, TristateTrue)

'Write Writing the entire file will cause all the contents of the file to be lost if the write fails, so use the line-by-line method.

' It also prevents the problem of insufficient memory for too large files

while not TmpFile.AtEndOfStream

TgtFile.WriteLine TmpFile.ReadLine

Wend

'TgtFile.Write TmpFile.ReadAll

TmpFile.Close

If I <> UBound(TmpFilenames) Then

TgtFile.WriteLine

End If

Doc.Sheets(J).Name = SheetName

I = I + 1

End If

Next

TgtFile.Close

Doc.Close False

End If

App.AutomationSecurity = AutoSec

App.Quit

Set App = Nothing

For I = 0 To UBound(TmpFilenames)

If FileSys.FileExists(TmpFilenames(I)) Then

FileSys.DeleteFile TmpFilenames(I)

End If

Next

WScript.Sleep(1000)

'This step is to expose the failed window to the foreground for the user to close manually, which should be ignored by the On Error Resume Next catch above

App.Visible = true

If AppProtect.Workbooks.

' 'Protected processes can not just exit, the user may be using

' AppProtect.Quit

'End If

'AppProtect.Visible = true

'Set AppProtect = Nothing‘ XLS_to_CSV.vbs” Converts an Excel workbook to a comma-separated text file. Requires Microsoft Excel.’ Usage:’ WScript XLS_to_CSV.vbs <input file> <output file>

Option Explicit

‘ MsoAutomationSecurityConst msoAutomationSecurityForceDisable = 3′ OpenTextFile iomodeConst ForReading = 1Const ForAppending = 8Const TristateTrue = -1’ XlFileFormatConst xlCSV = 6 ‘ Comma-separated valuesConst xlUnicodeText = 42′ XlSheetVisibilityConst xlSheetVisible = -1

Dim App, AutoSec, Doc, FileSys, AppProtectSet FileSys = CreateObject(“Scripting.FileSystemObject”)If FileSys.FileExists(WScript.Arguments(1)) ThenFileSys.DeleteFile WScript.Arguments(1)End IfSet App = CreateObject(“Excel.Application”)’Set AppProtect = CreateObject(“Excel.Application”)

On Error Resume Next

App.DisplayAlerts = FalseAutoSec = App.AutomationSecurityApp.AutomationSecurity = msoAutomationSecurityForceDisableErr.Clear

Dim I, J, SheetName, TgtFile, TmpFile, TmpFilenames(), ContentSet Doc = App.Workbooks.Open(WScript.Arguments(0), False, True)If Err = 0 ThenI = 0For J = 1 To Doc.Sheets.CountIf Doc.Sheets(J).Visible = xlSheetVisible ThenI = I + 1End IfNextReDim TmpFilenames(I – 1)Set TgtFile = FileSys.OpenTextFile(WScript.Arguments(1), ForAppending, True, TristateTrue)I = 0For J = 1 To Doc.Sheets.CountIf Doc.Sheets(J).Visible = xlSheetVisible ThenSheetName = Doc.Sheets(J).NameTgtFile.WriteLine “””SHEET ” & SheetName & “”””Doc.Sheets(J).ActivateTmpFilenames(I) = FileSys.GetSpecialFolder(2) & “\” & FileSys.GetTempNameDoc.SaveAs TmpFilenames(I), xlUnicodeTextSet TmpFile = FileSys.OpenTextFile(TmpFilenames(I), ForReading, False, TristateTrue)’Write If you write the whole file, a write failure will cause all the contents of the whole file to be lost, so a line-by-line approach is used.’ It can also prevent the problem of too large file with insufficient memory while not TmpFile.AtEndOfStream TgtFile.WriteLine TmpFile.ReadLine Wend ‘TgtFile.Write TmpFile.ReadAllTmpFile.CloseIf I <> UBound(TmpFilenames) ThenTgtFile.WriteLineEnd IfDoc.Sheets(J).Name = SheetNameI = I + 1End IfNextTgtFile.CloseDoc.Close FalseEnd If

App.AutomationSecurity = AutoSecApp.QuitSet App = Nothing

For I = 0 To UBound(TmpFilenames)If FileSys.FileExists(TmpFilenames(I)) ThenFileSys.DeleteFile TmpFilenames(I)End IfNext

WScript.Sleep(1000)

‘This step is designed to expose the failed window to the foreground for the user to close manually, which should be ignored by the above On Error Resume Next capture App.Visible = true

If AppProtect.Workbooks.Count = 0 Then’ ‘The protection process can’t just exit, the user may be using ‘ AppProtect.Quit’End If’AppProtect.Visible = true’Set AppProtect = Nothing

Today’s development colleagues read the data from PostgreSQL and wrote it to excel. Due to the large amount of data, the development colleagues wrote the code that was not good enough and the speed was too slow, so there was a problem. I found the following information and modified the configuration of nginx to solve this problem. The detailed errors are as follows:

An error occurred.

Sorry, the page you are looking for is currently unavailable.

Please try again later.

If you are the system administrator of this resource then you should check theerror log for details.

Faithfully yours, nginx.Solution:

Change localhost in nginx configuration file to IP

Or

Modify the hosts file and add 127.0.0.1 localhost

location/{

proxy_pass http://localhost:8080 # change to 127.0.0.1

}Set connection time

proxy_ connect_ timeout 300; # The connection timeout between nginx and back-end server (proxy connection timeout)

proxy_ send_ timeout 300; # Back end server data return time (proxy sending timeout)

proxy_ read_ timeout 600; # After successful connection, the response time of back-end server (proxy receiving timeout)

is shorter

After modifying the configuration file, reload the configuration file

nginx -s reload

Error:

jxl.read.biff.BiffException: Unable to recognize OLE stream

at jxl.read.biff.CompoundFile.<init>(CompoundFile.java:116)

at jxl.read.biff.File.<init>(File.java:127)

at jxl.Workbook.getWorkbook(Workbook.java:268)

at jxl.Workbook.getWorkbook(Workbook.java:253)

at test1.main(test1.java:25)

Solution: No expenditure to read excel 2007 files (*.xlsx). Only excel 2003 (*.xls) is supported.

Udatatable is a component provided by UE4 to read and write files. The advantage is that you don’t need to write the logic related to C + + STL API, such as fopen and Fclose, to avoid the differences between different platforms; The disadvantage is that the DataTable function you want is not implemented, so you have to use fopen to play it yourself

Reading and writing excel needs to be exported as a CSV file. At present, *. XLS format is not supported

In the following official documents, there is no specific description about the usage of defining line structure with C + + Code:

If a DataTable is created from a blueprint, then the row structure structure can also use the structure component provided by UE4. The creation method is: add new blueprints structure, and then set the row structure in this structure

If you create a DataTable with C + + code, you can directly create a new C + + class and choose to inherit the DataTable. In addition, ftablerowbase can be directly defined in the header file of the custom datatable, for example:

#pragma once

#include "Engine/DataTable.h"

#include "CharactersDT.generated.h"

USTRUCT(BlueprintType)

struct FLevelUpData : public FTableRowBase

{

GENERATED_USTRUCT_BODY()

public:

FLevelUpData()

: XPtoLvl(0)

, AdditionalHP(0)

{}

/** The 'Name' column is the same as the XP Level */

/** XP to get to the given level from the previous level */

UPROPERTY(EditAnywhere, BlueprintReadWrite, Category = LevelUp)

int32 XPtoLvl;

/** Extra HitPoints gained at this level */

UPROPERTY(EditAnywhere, BlueprintReadWrite, Category = LevelUp)

int32 AdditionalHP;

/** Icon to use for Achivement */

UPROPERTY(EditAnywhere, BlueprintReadWrite, Category = LevelUp)

TAssetPtr<UTexture> AchievementIcon;

};Using excel to store gameplay data – DataTables

https://wiki.unrealengine.com/Using_excel_to_store_gameplay_data_-_DataTables

Data Driven Gameplay Elements

https://docs.unrealengine.com/latest/INT/Gameplay/DataDriven/index.html

Driving Gameplay with Data from Excel

https://forums.unrealengine.com/showthread.php?12572-Driving-Gameplay-with-Data-from-Excel

Methods for manipulating DataTable with blueprints.

Unreal Engine, Datatables for Blueprints (build & Use)

Excel to Unreal

https://www.youtube.com/watch?v=WLv67ddnzN0

How to load *.CSV dynamically with C++ code

If you have few tables you can use this self-contained DataTable, if you have many tables and will change them frequently, then you have to manually operate one by one in the UE editor after each change, so it is recommended to load *.csv dynamically in C++:.

FString csvFile = FPaths::GameContentDir() + "Downloads\\DownloadedFile.csv";

if (FPaths::FileExists(csvFile ))

{

FString FileContent;

//Read the csv file

FFileHelper::LoadFileToString(FileContent, *csvFile );

TArray<FString> problems = YourDataTable->CreateTableFromCSVString(FileContent);

if (problems.Num() > 0)

{

for (int32 ProbIdx = 0; ProbIdx < problems.Num(); ProbIdx++)

{

//Log the errors

}

}

else

{

//Updated Successfully

}

}