[root@k8smain01 ~]# kubectl exec -it deploy-nginx-8458f6dbbb-vc2jd -- bash

error: unable to upgrade connection: pod does not exist

[root@k8smain01 ~]# kubectl logs nginx-test

Error from server (NotFound): the server could not find the requested resource ( pods/log nginx-test)

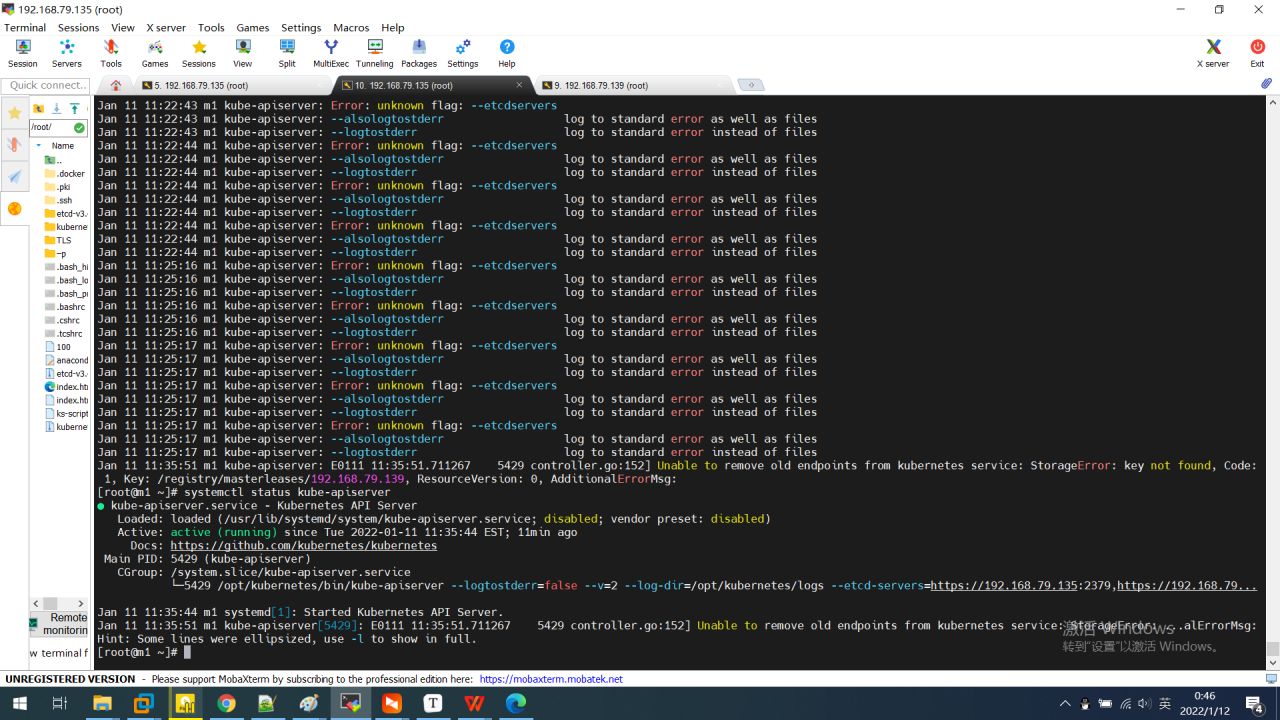

Cause: the virtual machine has two network cards and k8s uses the wrong network card

#Carry the -v=9 parameter when using the command to increase the output level of the log

[root@k8smain01 ~]# kubectl logs nginx-test -v=9

I1022 16:45:22.676030 418422 loader.go:372] Config loaded from file: /etc/kubernetes/admin.conf

I1022 16:45:22.684784 418422 round_trippers.go:435] curl -v -XGET -H "Accept: application/json, */*" -H "User-Agent: kubectl/v1.22.1 (linux/amd64) kubernetes/632ed30" 'https://192.168.56.108:6443/api/v1/namespaces/default/pods/nginx-test'

I1022 16:45:22.701372 418422 round_trippers.go:454] GET https://192.168.56.108:6443/api/v1/namespaces/default/pods/nginx-test 200 OK in 16 milliseconds

I1022 16:45:22.701423 418422 round_trippers.go:460] Response Headers:

I1022 16:45:22.701448 418422 round_trippers.go:463] Audit-Id: 3617bb1d-11dd-49ca-8e9d-fc2e048e9db1

I1022 16:45:22.701459 418422 round_trippers.go:463] Cache-Control: no-cache, private

I1022 16:45:22.701472 418422 round_trippers.go:463] Content-Type: application/json

I1022 16:45:22.701480 418422 round_trippers.go:463] X-Kubernetes-Pf-Flowschema-Uid: b85220fe-e7c3-47df-a746-6624f4a44353

I1022 16:45:22.701486 418422 round_trippers.go:463] X-Kubernetes-Pf-Prioritylevel-Uid: 46ccf519-799d-40e5-8bdf-1a4250479d75

I1022 16:45:22.701493 418422 round_trippers.go:463] Date: Fri, 22 Oct 2021 08:45:22 GMT

I1022 16:45:22.702103 418422 request.go:1181] Response Body: {"kind":"Pod","apiVersion":"v1","metadata":{"name":"nginx-test","namespace":"default","uid":"ab3ccffc-f477-4556-8db8-54fc2a2f3632","resourceVersion":"10005","creationTimestamp":"2021-10-21T11:11:40Z","labels":{"run":"nginx-test"},"annotations":{"cni.projectcalico.org/containerID":"c136174460e3d55deb4ef60e14e5f1af000ee6ea500a03e2e96f517fce22da4f","cni.projectcalico.org/podIP":"10.50.249.65/32","cni.projectcalico.org/podIPs":"10.50.249.65/32"},"managedFields":[{"manager":"kubectl-run","operation":"Update","apiVersion":"v1","time":"2021-10-21T11:11:40Z","fieldsType":"FieldsV1","fieldsV1":{"f:metadata":{"f:labels":{".":{},"f:run":{}}},"f:spec":{"f:containers":{"k:{\"name\":\"nginx-test\"}":{".":{},"f:image":{},"f:imagePullPolicy":{},"f:name":{},"f:ports":{".":{},"k:{\"containerPort\":80,\"protocol\":\"TCP\"}":{".":{},"f:containerPort":{},"f:protocol":{}}},"f:resources":{},"f:terminationMessagePath":{},"f:terminationMessagePolicy":{}}},"f:dnsPolicy":{},"f:enableServiceLinks":{},"f:restartPolicy":{},"f:schedulerName":{},"f:securityContext":{},"f:terminationGracePeriodSeconds":{}}}},{"manager":"calico","operation":"Update","apiVersion":"v1","time":"2021-10-21T11:11:41Z","fieldsType":"FieldsV1","fieldsV1":{"f:metadata":{"f:annotations":{".":{},"f:cni.projectcalico.org/containerID":{},"f:cni.projectcalico.org/podIP":{},"f:cni.projectcalico.org/podIPs":{}}}},"subresource":"status"},{"manager":"kubelet","operation":"Update","apiVersion":"v1","time":"2021-10-21T11:50:42Z","fieldsType":"FieldsV1","fieldsV1":{"f:status":{"f:conditions":{"k:{\"type\":\"ContainersReady\"}":{".":{},"f:lastProbeTime":{},"f:lastTransitionTime":{},"f:status":{},"f:type":{}},"k:{\"type\":\"Initialized\"}":{".":{},"f:lastProbeTime":{},"f:lastTransitionTime":{},"f:status":{},"f:type":{}},"k:{\"type\":\"Ready\"}":{".":{},"f:lastProbeTime":{},"f:lastTransitionTime":{},"f:status":{},"f:type":{}}},"f:containerStatuses":{},"f:hostIP":{},"f:phase":{},"f:podIP":{},"f:podIPs":{".":{},"k:{\"ip\":\"10.50.249.65\"}":{".":{},"f:ip":{}}},"f:startTime":{}}},"subresource":"status"}]},"spec":{"volumes":[{"name":"kube-api-access-4kv5q","projected":{"sources":[{"serviceAccountToken":{"expirationSeconds":3607,"path":"token"}},{"configMap":{"name":"kube-root-ca.crt","items":[{"key":"ca.crt","path":"ca.crt"}]}},{"downwardAPI":{"items":[{"path":"namespace","fieldRef":{"apiVersion":"v1","fieldPath":"metadata.namespace"}}]}}],"defaultMode":420}}],"containers":[{"name":"nginx-test","image":"nginx","ports":[{"containerPort":80,"protocol":"TCP"}],"resources":{},"volumeMounts":[{"name":"kube-api-access-4kv5q","readOnly":true,"mountPath":"/var/run/secrets/kubernetes.io/serviceaccount"}],"terminationMessagePath":"/dev/termination-log","terminationMessagePolicy":"File","imagePullPolicy":"Always"}],"restartPolicy":"Always","terminationGracePeriodSeconds":30,"dnsPolicy":"ClusterFirst","serviceAccountName":"default","serviceAccount":"default","nodeName":"k8snode01","securityContext":{},"schedulerName":"default-scheduler","tolerations":[{"key":"node.kubernetes.io/not-ready","operator":"Exists","effect":"NoExecute","tolerationSeconds":300},{"key":"node.kubernetes.io/unreachable","operator":"Exists","effect":"NoExecute","tolerationSeconds":300}],"priority":0,"enableServiceLinks":true,"preemptionPolicy":"PreemptLowerPriority"},"status":{"phase":"Running","conditions":[{"type":"Initialized","status":"True","lastProbeTime":null,"lastTransitionTime":"2021-10-21T11:11:40Z"},{"type":"Ready","status":"True","lastProbeTime":null,"lastTransitionTime":"2021-10-21T11:50:42Z"},{"type":"ContainersReady","status":"True","lastProbeTime":null,"lastTransitionTime":"2021-10-21T11:50:42Z"},{"type":"PodScheduled","status":"True","lastProbeTime":null,"lastTransitionTime":"2021-10-21T11:11:40Z"}],"hostIP":"10.0.2.15","podIP":"10.50.249.65"

From the output log, you can see the used “hostIP”:”10.0.2.15″, Not the IP used for mutual access between virtual machines: 192.168.56. X

Solution:

#centos

vi /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS=--node-ip=192.168.56.xxx

systemctl restart kubelet

#ubuntu system, add a line before ExecStart

vi /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

Environment="KUBELET_EXTRA_ARGS=--node-ip=192.168.56.xxx"

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS

systemctl restart kubelet