First configure the hadoop/etc/capacity-scheduler.xml file

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<!-- The maximum number of jobs the capacity scheduler can hold-->

<property&>

<name&>yarn.scheduler.capacity.maximum-applications</name>

<value&>10000</value>

<description&>

Maximum number of applications that can be pending and running.

</description>

</property>

<!-- How much of the total resources of the queue can be occupied by the MRAppMaster process started in the current queue

This parameter allows you to limit the number of submitted Jobs in the queue

--&>

<property&>

<name&>yarn.scheduler.capacity.maximum-am-resource-percent</name&>

<value&>0.1</value&>

<description&>

Maximum percent of resources in the cluster which can be used to run

application masters i.e. controls number of concurrent running

applications.

</description&>

</property&>

<!-- What strategy is used to calculate when allocating resources to a Job

--&>

<property>

<name&>yarn.scheduler.capacity.resource-calculator</name>

<value&>org.apache.hadoop.yarn.util.resource.DefaultResourceCalculator</value>

<description>

The ResourceCalculator implementation to be used to compare

Resources in the scheduler.

The default i.e. DefaultResourceCalculator only uses Memory while

DominantResourceCalculator uses dominant-resource to compare

multi-dimensional resources such as Memory, CPU etc.

</description&>

</property>

<!-- What sub queues are in the root queue, new a, b queues are added----&>

<property&>

<name&>yarn.scheduler.capacity.root.queues</name>

<value&>default,a,b</value>

<description>

The queues at the this level (root is the root queue).

</description>

</property>

<!-- Percentage of capacity occupied by the default queue in the root queue

The capacity of all subqueues must add up to 100

--&>

<property&>

<name&>yarn.scheduler.capacity.root.default.capacity</name&>

<value&>40</value&>

<description&>Default queue target capacity.</description&>

</property&>

<property&>

<name&>yarn.scheduler.capacity.root.a.capacity</name&>

<value&>30</value&>

<description&>Default queue target capacity.</description&>

</property>

<property>

<name&>yarn.scheduler.capacity.root.b.capacity</name>

<value&>30</value>

<description&>Default queue target capacity.</description>

</property>

<!-- Limit percentage of users in the queue that can use the resources of this queue

--&>

<property&>

<name&>yarn.scheduler.capacity.root.default.user-limit-factor</name&>

<value&>1</value&>

<description&>

Default queue user limit a percentage from 0.0 to 1.0.

</description&>

</property&>

<property&>

<name&>yarn.scheduler.capacity.root.a.user-limit-factor</name&>

<value&>1</value&>

<description&>

Default queue user limit a percentage from 0.0 to 1.0.

</description&>

</property&>

<property&>

<name&>yarn.scheduler.capacity.root.b.user-limit-factor</name&>

<value&>1</value&>

<description&>

Default queue user limit a percentage from 0.0 to 1.0.

</description&>

</property&>

<!-- The maximum value of the percentage of capacity occupied by the default queue in the root queue

--&>

<property&>

<name&>yarn.scheduler.capacity.root.default.maximum-capacity</name&>

<value&>100</value&>

<description&>

The maximum capacity of the default queue.

</description&>

</property&>

<property&>

<name&>yarn.scheduler.capacity.root.a.maximum-capacity</name&>

<value&>100</value&>

<description&>

The maximum capacity of the default queue.

</description&>

</property&>

<property&>

<name&>yarn.scheduler.capacity.root.b.maximum-capacity</name&>

<value&>100</value&>

<description&>

The maximum capacity of the default queue.

</description&>

</property&>

<!-- The state of the default queue in the root queue

--&>

<property&>

<name&>yarn.scheduler.capacity.root.default.state</name&>

<value&>RUNNING</value&>

<description&>

The state of the default queue. State can be one of RUNNING or STOPPED.

</description&>

</property&>

<property&>

<name&>yarn.scheduler.capacity.root.a.state</name&>

<value&>RUNNING</value&>

<description>

The state of the default queue. State can be one of RUNNING or STOPPED.

</description&>

</property&>

<property&>

<name&>yarn.scheduler.capacity.root.b.state</name&>

<value&>RUNNING</value&>

<description&>

The state of the default queue. State can be one of RUNNING or STOPPED.

</description&>

</property&>

<!-- Restrict users who submit to the default queue, i.e. access rights--&>

<property&>

<name&>yarn.scheduler.capacity.root.default.acl_submit_applications</name&>

<value&>*</value&>

<description&>

The ACL of who can submit jobs to the default queue.

</description&>

</property&>

<property&>

<name&>yarn.scheduler.capacity.root.a.acl_submit_applications</name&>

<value&>*</value&>

<description&>

The ACL of who can submit jobs to the default queue.

</description&>

</property&>

<property&>

<name&>yarn.scheduler.capacity.root.b.acl_submit_applications</name&>

<value&>*</value&>

<description&>

The ACL of who can submit jobs to the default queue.

</description&>

</property&>

<!-- set as admin--&>

<property&>

<name&>yarn.scheduler.capacity.root.default.acl_administer_queue</name&>

<value&>*</value&>

<description&>

The ACL of who can administer jobs on the default queue.

</description&>

</property&>

<property&>

<name&>yarn.scheduler.capacity.root.a.acl_administer_queue</name&>

<value&>*</value&>

<description&>

The ACL of who can administer jobs on the default queue.

</description&>

</property&>

<property&>

<name&>yarn.scheduler.capacity.root.b.acl_administer_queue</name&>

<value&>*</value&>

<description&>

The ACL of who can administer jobs on the default queue.

</description&>

</property&>

<property&>

<name&>yarn.scheduler.capacity.node-locality-delay</name&>

<value&>40</value&>

<description&>

Number of missed scheduling opportunities after which the CapacityScheduler

attempts to schedule rack-local containers.

Typically this should be set to number of nodes in the cluster, By default is setting

approximately number of nodes in one rack which is 40.

</description&>

</property&>

<property&>

<name&>yarn.scheduler.capacity.queue-mappings</name&>

<value&></value&>

<description>

A list of mappings that will be used to assign jobs to queues

The syntax for this list is [u|g]:[name]:[queue_name][,next mapping]*

Typically this list will be used to map users to queues,

for example, u:%user:%user maps all users to queues with the same name

as the user.

</description&>

</property&>

<property>

<name&>yarn.scheduler.capacity.queue-mappings-override.enable</name&>

<value&>false</value>

<description>

If a queue mapping is present, will it override the value specified

by the user?This can be used by administrators to place jobs in queues

that are different than the one specified by the user.

The default is false.

</description>

</property>

</configuration>Use the refresh command after configuration

yarn rmadmin -refreshQueues

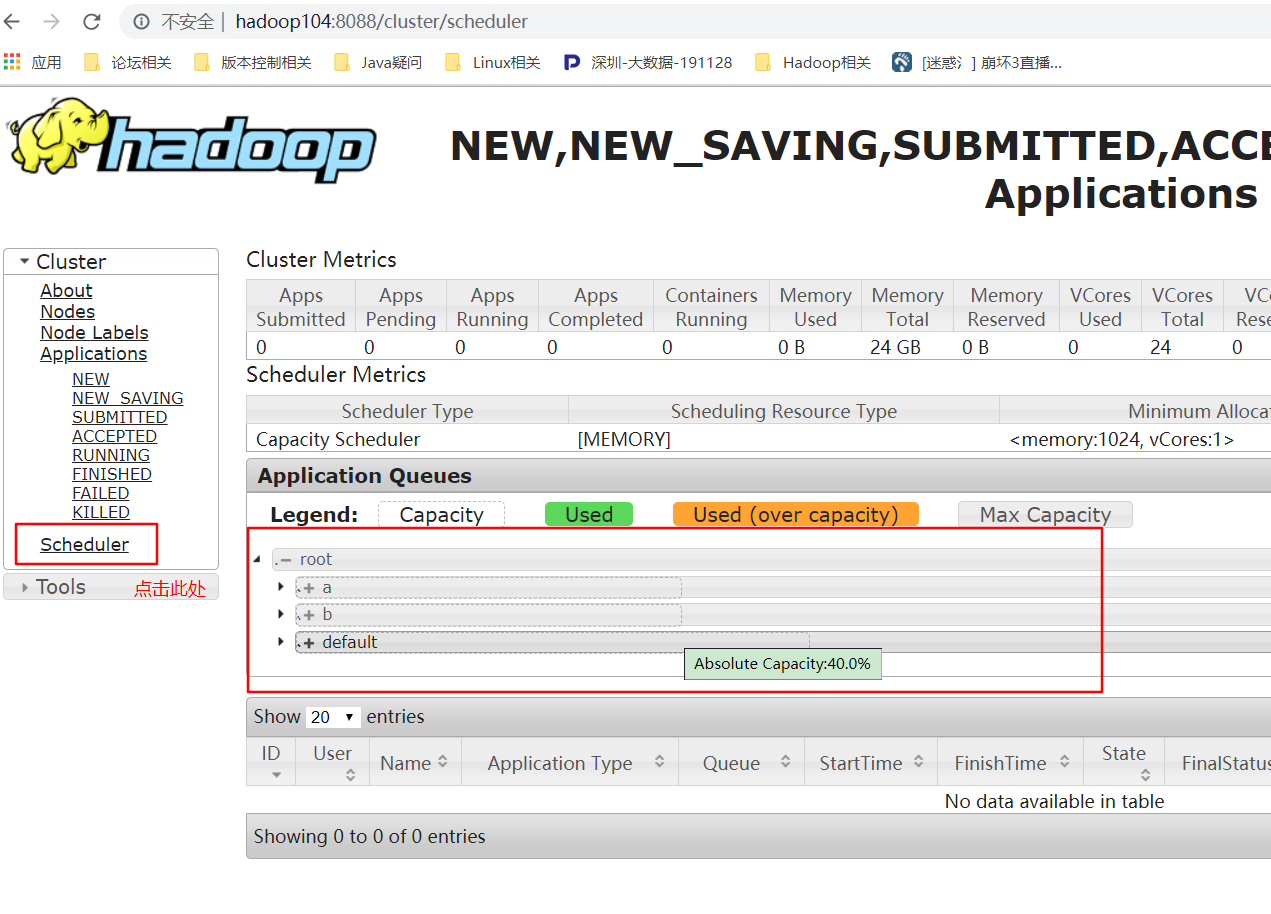

Then enter the yarn interface of the cluster, and you can see that the queue becomes three

Then the next step is how to set the job to run in other queues

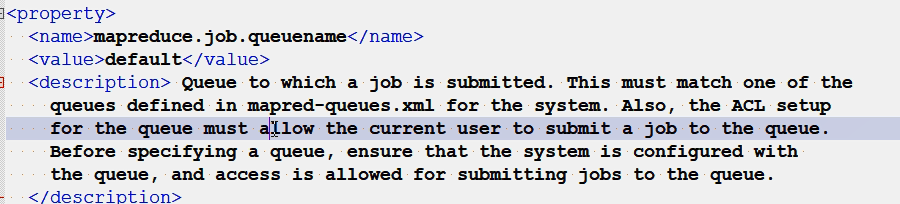

You know, it’s up to mapred to decide which queue the job runs on- default.xml It’s decided in the document

So you need to change this configuration:

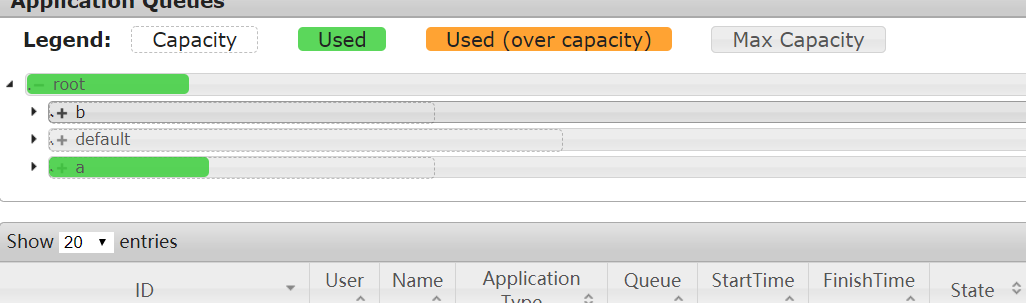

1. If idea is used, it can be used

conf.set (” mapred.job.queue .name”, “a”);

This specifies that the job should be run in the a queue

2. If you run jar package on Linux, you can use

hadoop jar hadoop-mapreduce-examples-2.7.2.jar wordcount -D mapreduce.job.queuename=a/mapjoin/output3

As shown in the figure, the job switches to the a queue

Similar Posts:

- Spark2.x Error: Queue’s AM resource limit exceeded.

- Tuning and setting of memory and CPU on yarn cluster

- [Solved] CDH6.3.2 Hive on spark Error: is running beyond physical memory limits

- [Solved] ERROR 1805 (HY000): Column count of mysql.user is wrong. Expected 45, found 42. The table is probably corrupted

- Jstack Run Command Error:Unable to open socket file: target process not responding or HotSpot VM not loaded

- Error: Could not find or load main class org.apache.hadoop.mapreduce.v2.app.MRAppMaster

- [Solved] hive beeline Connect Error: User:*** is not allowed to impersonate

- [Solved] Hadoop Error: ERROR: Attempting to operate on yarn resourcemanager as root

- [Solved] scheduler Error: maximum number of running instances reached

- YARN Restart Issue: RM Restart/RM HA/Timeline Server/NM Restart