Solution

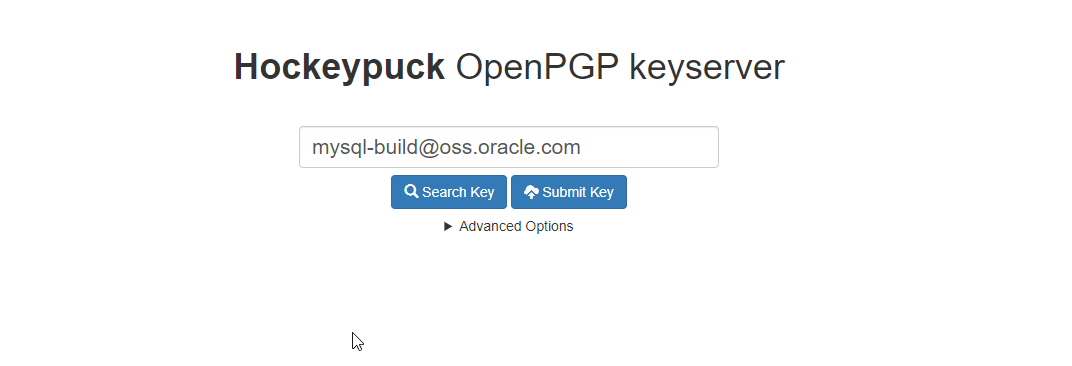

Go to this website: http://keyserver.ubuntu.com/

Find the email address in the error message and enter the email address on this website to search

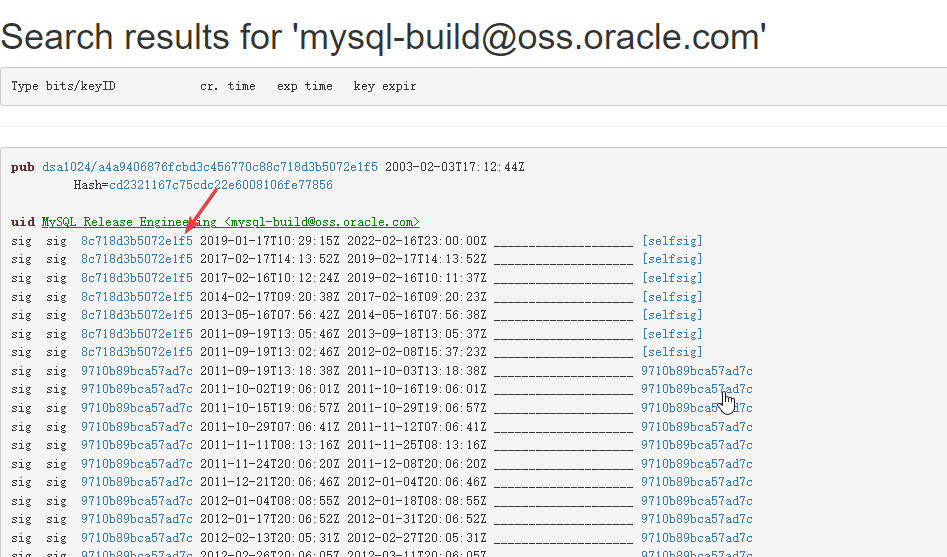

Search for these keys and click the first one

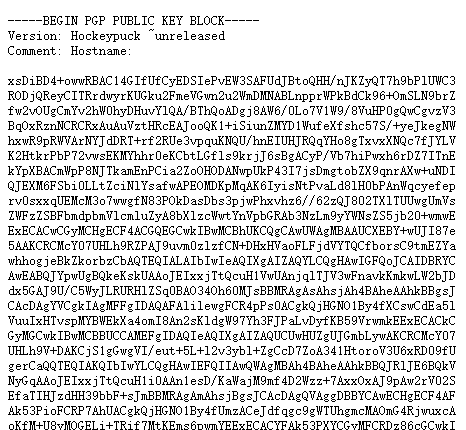

Click in to see the contents of the key

Copy all, create a text file on Linux, take any name, and copy the content

touch gpgKey

vim gpgKey

sudo apt-key add gpgKey

Then you can

Error reporting process

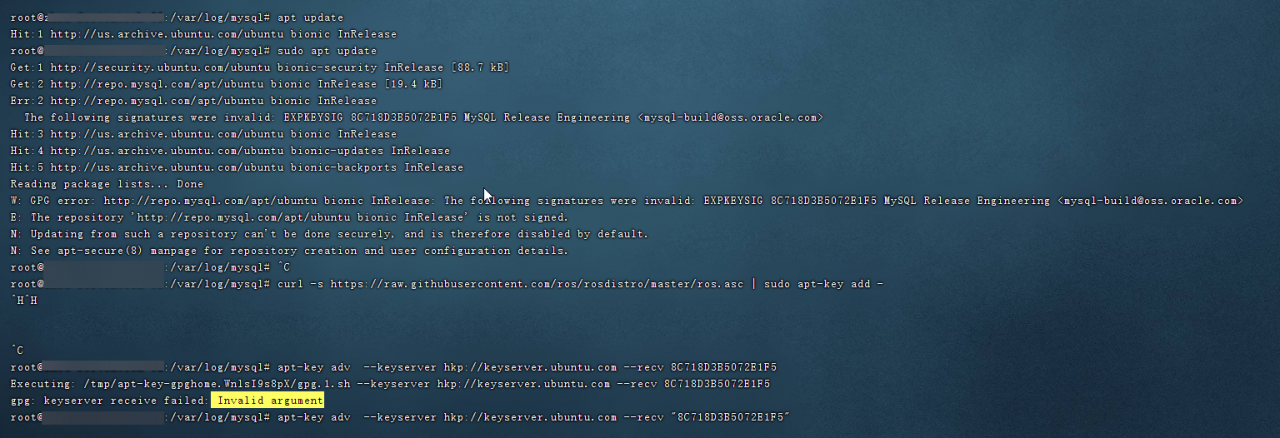

Before installing mysql, an error was reported when executing apt update:

Err:2 http://repo.mysql.com/apt/ubuntu bionic InRelease

The following signatures were invalid: EXPKEYSIG 8C718D3B5072E1F5 MySQL Release Engineering <[email protected]>

It is said on the Internet that this method:

apt-key adv --keyserver hkp://keyserver.ubuntu.com --recv yourKey

The result is still not good, and an error is reported:

root@xx:/var/log/mysql# apt-key adv --keyserver hkp://keyserver.ubuntu.com --recv 8C718D3B5072E1F5

Executing: /tmp/apt-key-gpghome.WnlsI9s8pX/gpg.1.sh --keyserver hkp://keyserver.ubuntu.com --recv 8C718D3B5072E1F5

gpg: keyserver receive failed: Invalid argument