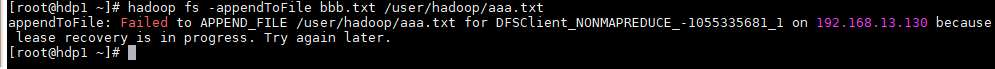

Error message: failed to replace a bad datanode on the existing pipeline due to no more good datanodes being available to try (Nodes: current=[DatanodeInfoWithStorage[192.168.13.130:50010,DS-d105d41c-49cc-48b9-8beb-28058c2a03f7,DISK]], original=[DatanodeInfoWithStorage[192.168.13.130:50010,DS-d105d41c-49cc-48b9-8beb-28058c2a03f7,DISK]]). The current failed datanode replacement policy is DEFAULT, and a client may configure this via ‘dfs. client. block. write. replace-datanode-on-failure. policy’ in its configuration

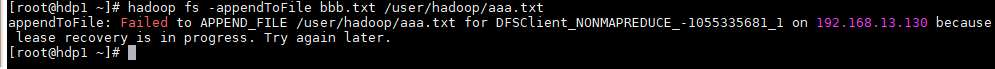

This error occurs because I append the local file to the txt file of HDFS. When I added the second time, the error message became

From the first error message, we can find dfs.client.block.write.replace-datanode-on-failure.policy.

So I went to check hadoop in etc/hdfs-site.xml. found that I did not define the number of copies, so in which to add. restart can be.

<property>

<name>dfs.client.block.write.replace-datanode-on-failure.policy</name>

<value>NEVER</value>

</property>

Analysis: By default, the number of copies is 3. When performing the write to HDFS operation, when one of my Datenodes fails to write, it has to keep the number of copies as 3, it will look for an available DateNode node to write, but there are only 3 on the pipeline, all resulting in the error Failed to replace a bad datanode on the existing pipeline due to no more good datanodes being available to try.

The following lines of code do not exist, then the number of copies default to 3. Refer to the official apache documentation that NEVER: never add a new datanode is equivalent to After setting to NEVER, no new DataNode will be added. Generally speaking, it is not recommended to turn on DataNode nodes less than or equal to 3 in a cluster.

<property>

<name>dfs.replication</name>

<value>3</value>

</property>