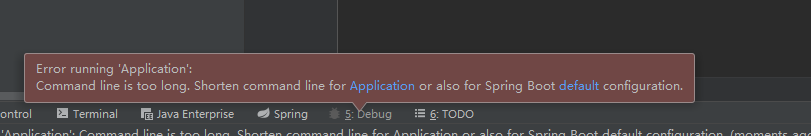

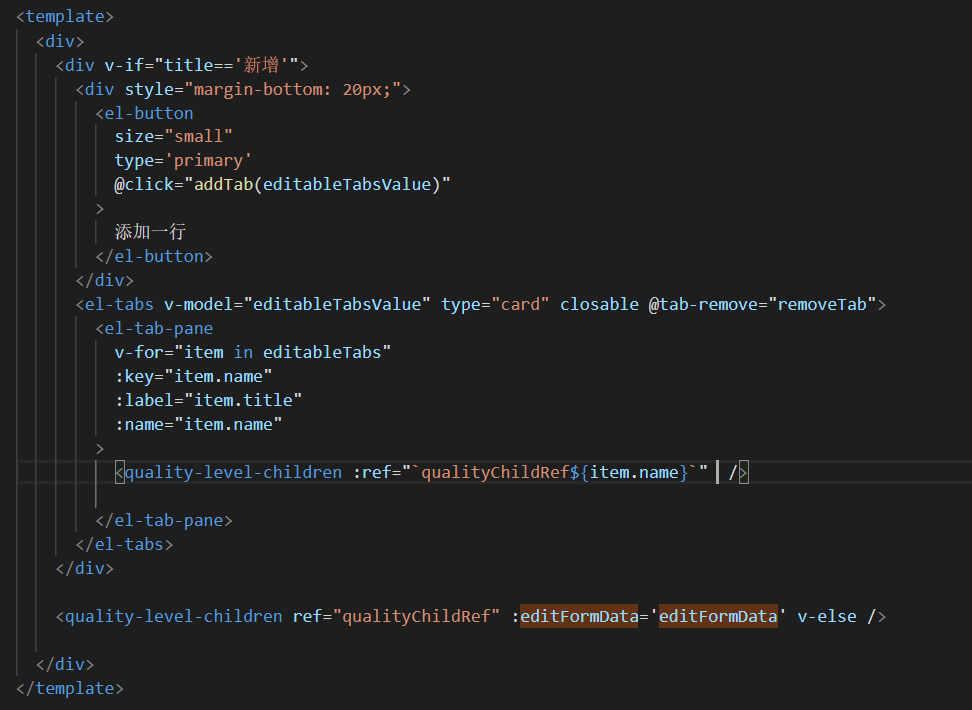

It encapsulates a component and never reports an error at the beginning. After adding data, it starts to report an error

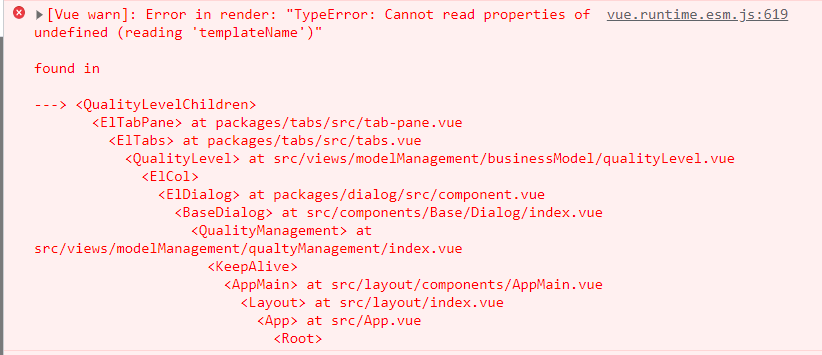

TypeError: Cannot read properties of undefined (reading 'templateName')

When I add a new component, I always report an error, but when I modify it, I don’t report an error. I always thought it was caused by El-tab-pane . I thought that the dynamic rendering component had been debugged for a long time. I thought the problem was always in dynamic rendering. Later, I found that it was not this problem

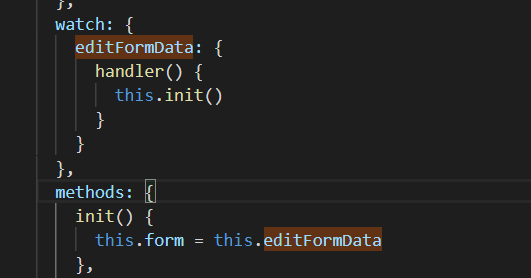

The final problem is that my child component uses the data transmitted by the parent component, but the parent component does not transmit this data when adding

This leads to an error. Therefore, when adding a new component, the parent component passes in a null value, or determines whether to add or modify to control the assignment in the child component