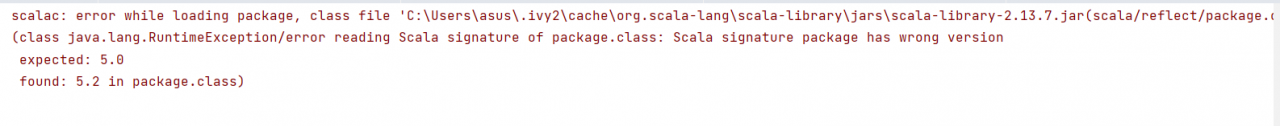

An error occurred while connecting spark with Scala:

scalac: error while loading package, class file 'C:\Users\asus\. ivy2\cache\org. scala-lang\scala-library\jars\scala-library-2.13. 7.jar(scala/reflect/package.class)' is broken (class java.lang.RuntimeException/error reading Scala signature of package.class: Scala signature package has wrong version expected: 5.0 found: 5.2 in package.class)

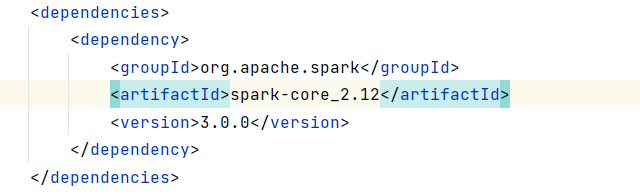

Because of the version conflict, the SDK version of scala added by the compiler is consistent with the version of POM file

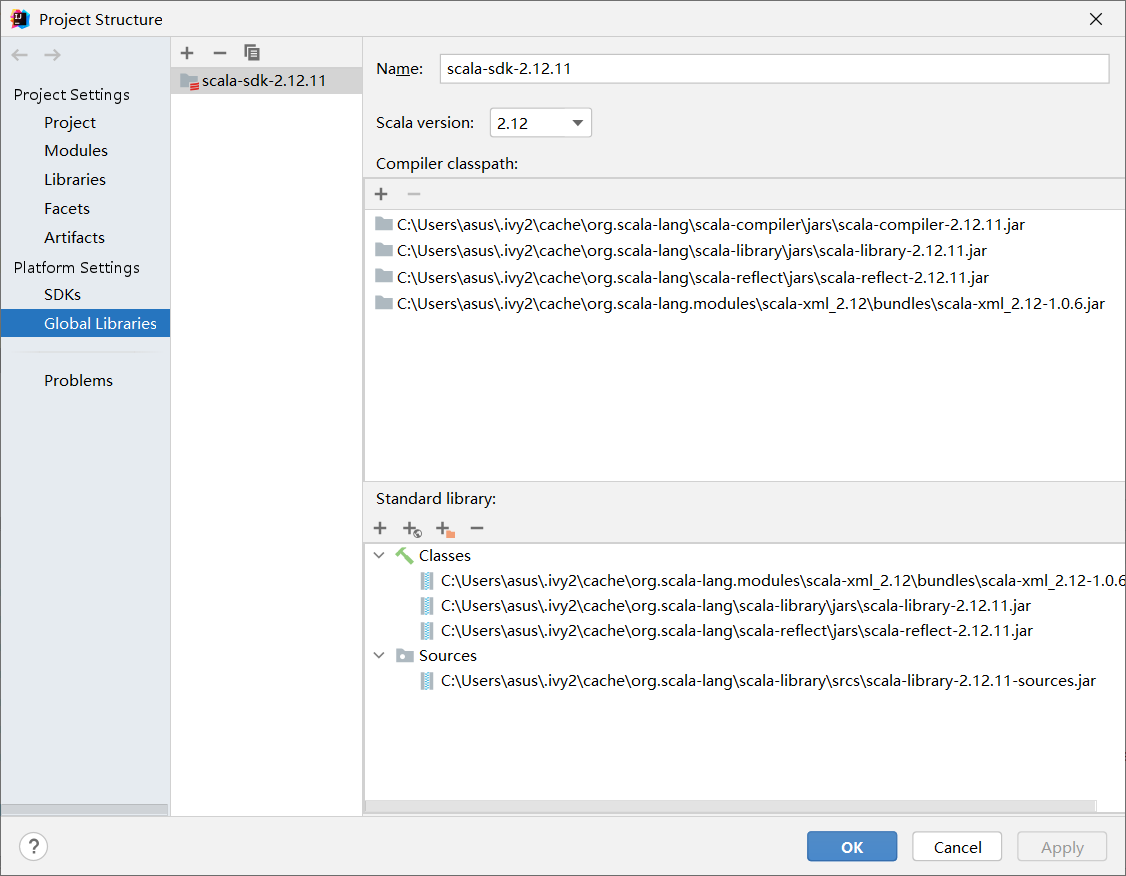

Open file >> Project Structure >> Global librarier can add a version consistent with the POM file

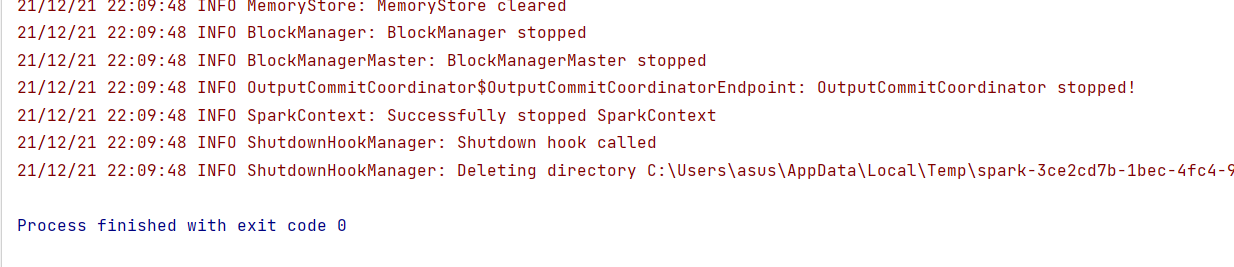

Run successfully

Finally, the spark version used is 3.0

Similar Posts:

- Spark shell cannot start normally due to scala compiler

- [Solved] java.lang.NoSuchMethodError: scala.Product.$init$(Lscala/Product;) V sets the corresponding Scala version

- [Solved] Exception in thread “main” java.lang.NoSuchMethodError: org.apache.hadoop.security.HadoopKerberosName.setRuleMechanism(Ljava/lang/String;)V

- org.apache.spark.SparkException: A master URL must be set in your configuration

- [Solved] Spark Programmer Compile error: object apache is not a member of package org

- Spark Program Compilation error: object apache is not a member of package org

- Only one SparkContext may be running in this JVM

- [Solved] Spark-HBase Error: java.lang.NoClassDefFoundError: org/htrace/Trace

- [Solved] SparkException: Could not find CoarseGrainedScheduler or it has been stopped.

- IntelliJ idea always prompts no Scala SDK in module solution