Knowledge map advanced must read: read how large-scale map data efficient storage and retrieval>>>

Execute the create HBase external table in hive, and execute the create script:

hive>CREATEEXTERNALTABLEhbase_userFace(idstring,mobilestring,namestring)

>STOREDBY'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

>WITHSERDEPROPERTIES("hbase.columns.mapping"=":key,faces:mobile,faces:name")

>TBLPROPERTIES("hbase.table.name"="userFace");

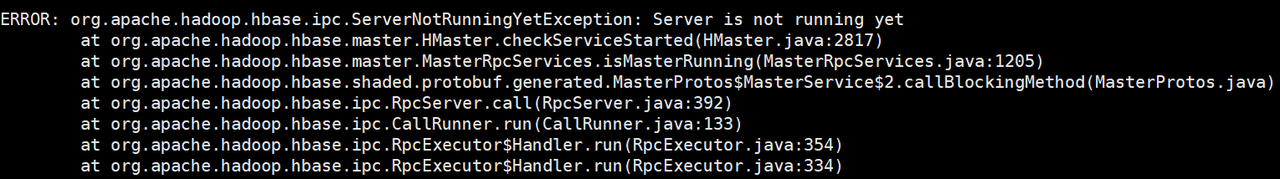

The error is as follows:

FAILED:ExecutionError,returncode1fromorg.apache.hadoop.hive.ql.exec.DDLTask.MetaException(message:org.apache.hadoop.hbase.client.RetriesExhaustedException:Can'tgetthelocations

atorg.apache.hadoop.hbase.client.RpcRetryingCallerWithReadReplicas.getRegionLocations(RpcRetryingCallerWithReadReplicas.java:312)

atorg.apache.hadoop.hbase.client.ScannerCallableWithReplicas.call(ScannerCallableWithReplicas.java:153)

atorg.apache.hadoop.hbase.client.ScannerCallableWithReplicas.call(ScannerCallableWithReplicas.java:61)

atorg.apache.hadoop.hbase.client.RpcRetryingCaller.callWithoutRetries(RpcRetryingCaller.java:200)

atorg.apache.hadoop.hbase.client.ClientScanner.call(ClientScanner.java:320)

atorg.apache.hadoop.hbase.client.ClientScanner.nextScanner(ClientScanner.java:295)

atorg.apache.hadoop.hbase.client.ClientScanner.initializeScannerInConstruction(ClientScanner.java:160)

atorg.apache.hadoop.hbase.client.ClientScanner.<init>(ClientScanner.java:155)

atorg.apache.hadoop.hbase.client.HTable.getScanner(HTable.java:811)

atorg.apache.hadoop.hbase.MetaTableAccessor.fullScan(MetaTableAccessor.java:602)

atorg.apache.hadoop.hbase.MetaTableAccessor.tableExists(MetaTableAccessor.java:366)

atorg.apache.hadoop.hbase.client.HBaseAdmin.tableExists(HBaseAdmin.java:303)

atorg.apache.hadoop.hbase.client.HBaseAdmin.tableExists(HBaseAdmin.java:313)

atorg.apache.hadoop.hive.hbase.HBaseStorageHandler.preCreateTable(HBaseStorageHandler.java:200)

atorg.apache.hadoop.hive.metastore.HiveMetaStoreClient.createTable(HiveMetaStoreClient.java:664)

atorg.apache.hadoop.hive.metastore.HiveMetaStoreClient.createTable(HiveMetaStoreClient.java:657)

atsun.reflect.NativeMethodAccessorImpl.invoke0(NativeMethod)

atsun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

atsun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

atjava.lang.reflect.Method.invoke(Method.java:606)

atorg.apache.hadoop.hive.metastore.RetryingMetaStoreClient.invoke(RetryingMetaStoreClient.java:156)

atcom.sun.proxy.$Proxy8.createTable(UnknownSource)

atorg.apache.hadoop.hive.ql.metadata.Hive.createTable(Hive.java:714)

atorg.apache.hadoop.hive.ql.exec.DDLTask.createTable(DDLTask.java:4135)

atorg.apache.hadoop.hive.ql.exec.DDLTask.execute(DDLTask.java:306)

atorg.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:160)

atorg.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:88)

atorg.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:1653)

atorg.apache.hadoop.hive.ql.Driver.execute(Driver.java:1412)

atorg.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1195)

atorg.apache.hadoop.hive.ql.Driver.run(Driver.java:1059)

atorg.apache.hadoop.hive.ql.Driver.run(Driver.java:1049)

atorg.apache.hadoop.hive.cli.CliDriver.processLocalCmd(CliDriver.java:213)

atorg.apache.hadoop.hive.cli.CliDriver.processCmd(CliDriver.java:165)

atorg.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:376)

atorg.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:736)

atorg.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:681)

atorg.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:621)

atsun.reflect.NativeMethodAccessorImpl.invoke0(NativeMethod)

atsun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

atsun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

atjava.lang.reflect.Method.invoke(Method.java:606)

atorg.apache.hadoop.util.RunJar.run(RunJar.java:221)

atorg.apache.hadoop.util.RunJar.main(RunJar.java:136)

)

The error should be that HBase cannot be connected. HBase is managed by zookeeper. Test as follows:

1. Test the connection of single node HBase

$hive-hiveconfhbase.master=master:60000

After entering hive’s cli, execute the script to create the external table, and find that the error is still reported

2. Test the connection of HBase in cluster

hive-hiveconfhbase.zookeeper.quorum=slave1,slave2,master,slave4,slave5,slave6,slave7

After entering hive’s cli, execute the script to create the external table, and find that the creation is successful

It can be seen that an error occurred when hive read the zookeeper of HBase. Check the hive-site.xml file, there is a property named hive.zookeeper.quorum, copy a property changed to hbase.zookeeper.quorum. As follows:

<property>

<name>hbase.zookeeper.quorum</name>

<value>slave1,slave2,master,slave4,slave5,slave6,slave7</value>

<description>

</description>

</property>

So far, the problem is solved and the creation of HBase external table is successful