May these locations are not writable or multiple nodes were started without increasing

1. Possible these locations are not writable or multiple nodes were started without increasing

When using elk, sometimes the server is suddenly down, which makes es unable to restart. One common error is that maybe these locations are not writable or multiple nodes were started without increasing [[node. Max_local_storage_nodes] (was [1])

The specific error information is as follows:

[elk@master elasticsearch-7.2.1]$ ./bin/elasticsearch

OpenJDK 64-Bit Server VM warning: Option UseConcMarkSweepGC was deprecated in version 9.0 and will likely be removed in a future release.

[2021-08-13T11:02:10,661][WARN ][o.e.b.ElasticsearchUncaughtExceptionHandler] [master] uncaught exception in thread [main]

org.elasticsearch.bootstrap.StartupException: java.lang.IllegalStateException: failed to obtain node locks, tried [[/data/elasticsearch-7.2.1/data]] with lock id [0]; maybe these locations are not writable or multiple nodes were started without increasing [node.max_local_storage_nodes] (was [1])?

at org.elasticsearch.bootstrap.Elasticsearch.init(Elasticsearch.java:163) ~[elasticsearch-7.2.1.jar:7.2.1]

at org.elasticsearch.bootstrap.Elasticsearch.execute(Elasticsearch.java:150) ~[elasticsearch-7.2.1.jar:7.2.1]

at org.elasticsearch.cli.EnvironmentAwareCommand.execute(EnvironmentAwareCommand.java:86) ~[elasticsearch-7.2.1.jar:7.2.1]

at org.elasticsearch.cli.Command.mainWithoutErrorHandling(Command.java:124) ~[elasticsearch-cli-7.2.1.jar:7.2.1]

at org.elasticsearch.cli.Command.main(Command.java:90) ~[elasticsearch-cli-7.2.1.jar:7.2.1]

at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:115) ~[elasticsearch-7.2.1.jar:7.2.1]

at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:92) ~[elasticsearch-7.2.1.jar:7.2.1]

Caused by: java.lang.IllegalStateException: failed to obtain node locks, tried [[/data/elasticsearch-7.2.1/data]] with lock id [0]; maybe these locations are not writable or multiple nodes were started without increasing [node.max_local_storage_nodes] (was [1])?

at org.elasticsearch.env.NodeEnvironment.<init>(NodeEnvironment.java:298) ~[elasticsearch-7.2.1.jar:7.2.1]

at org.elasticsearch.node.Node.<init>(Node.java:271) ~[elasticsearch-7.2.1.jar:7.2.1]

at org.elasticsearch.node.Node.<init>(Node.java:251) ~[elasticsearch-7.2.1.jar:7.2.1]

at org.elasticsearch.bootstrap.Bootstrap$5.<init>(Bootstrap.java:221) ~[elasticsearch-7.2.1.jar:7.2.1]

The reason for this error is that before starting es with root user, this error will be reported when switching user operation. To solve this error, you only need to delete the nodes file in the data directory under the root directory, and then start it with other users

2. Start the message permission denied

The specific error information is as follows:

[elk@testmachine root]$ 2021-09-27 10:51:02,939 main ERROR RollingFileManager (/home/elasticsearch/logs/my-application_server.json) java.io.FileNotFoundException: /home/elasticsearch/logs/my-application_server.json (Permission denied) java.io.FileNotFoundException: /home/elasticsearch/logs/my-application_server.json (Permission denied)

at java.base/java.io.FileOutputStream.open0(Native Method)

at java.base/java.io.FileOutputStream.open(FileOutputStream.java:291)

at java.base/java.io.FileOutputStream.<init>(FileOutputStream.java:234)

at java.base/java.io.FileOutputStream.<init>(FileOutputStream.java:155)

at org.apache.logging.log4j.core.appender.rolling.RollingFileManager$RollingFileManagerFactory.createManager(RollingFileManager.java:640)

at org.apache.logging.log4j.core.appender.rolling.RollingFileManager$RollingFileManagerFactory.createManager(RollingFileManager.java:608)

at org.apache.logging.log4j.core.appender.AbstractManager.getManager(AbstractManager.java:113)

at org.apache.logging.log4j.core.appender.OutputStreamManager.getManager(OutputStreamManager.java:114)

at org.apache.logging.log4j.core.appender.rolling.RollingFileManager.getFileManager(RollingFileManager.java:188)

at org.apache.logging.log4j.core.appender.RollingFileAppender$Builder.build(RollingFileAppender.java:145)

at org.apache.logging.log4j.core.appender.RollingFileAppender$Builder.build(RollingFileAppender.java:61)

at org.apache.logging.log4j.core.config.plugins.util.PluginBuilder.build(PluginBuilder.java:123)

at org.apache.logging.log4j.core.config.AbstractConfiguration.createPluginObject(AbstractConfiguration.java:959)

at org.apache.logging.log4j.core.config.AbstractConfiguration.createConfiguration(AbstractConfiguration.java:899)

at org.apache.logging.log4j.core.config.AbstractConfiguration.createConfiguration(AbstractConfiguration.java:891)

at org.apache.logging.log4j.core.config.AbstractConfiguration.doConfigure(AbstractConfiguration.java:514)

at org.apache.logging.log4j.core.config.AbstractConfiguration.initialize(AbstractConfiguration.java:238)

at org.apache.logging.log4j.core.config.AbstractConfiguration.start(AbstractConfiguration.java:250)

at org.apache.logging.log4j.core.LoggerContext.setConfiguration(LoggerContext.java:547)

at org.apache.logging.log4j.core.LoggerContext.start(LoggerContext.java:263)

at org.elasticsearch.common.logging.LogConfigurator.configure(LogConfigurator.java:225)

It can be seen from the error message that it is logs/my application_ server. For the permission problem of JSON file, you can enter the logs directory of ES and view the users of all files through the LS – L command. You will find that some files belong to the root user, which is the reason for the error

Solution: go back to the root directory and execute the following command

chown -R elk:elk logs

chmod -R 777 logs

Enter the logs directory to check whether all files belong to the elk: Elk user

If so, restart es and start successfully

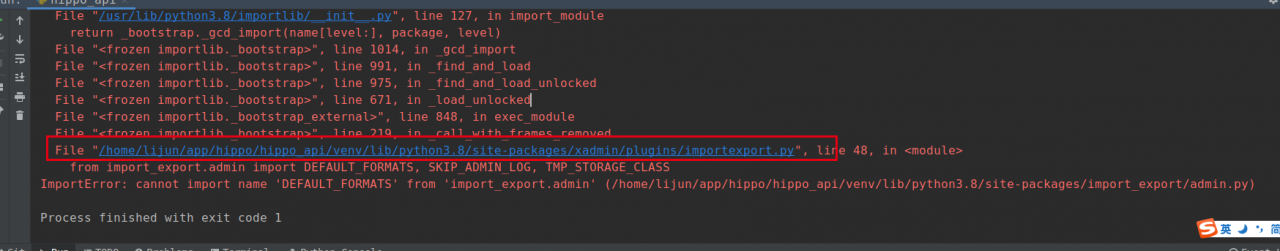

Note the error message line:

Note the error message line: